The Information reported over the weekend that the rapid development of generative AI models appears to be hitting a bottleneck. Some experts predict that simply increasing model parameters, training data, and arithmetic power to boost model performance is becoming ineffective, and may even have negative consequences.

OpenAI Orion, the latest flagship model of GPT-4, is a case in point. Reportedly, Orion's performance gains over GPT-4 are not significant, and may not even be an improvement in some areas such as coding.

Ilya Sutskever, founder of Safe Superintelligence and co-founder of OpenAI, also said that the strategy of simply scaling up models to improve performance has hit its ceiling.

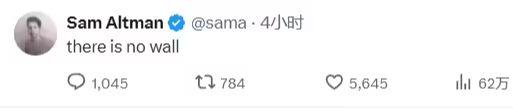

In response, OpenAI CEO Sam Altman just responded on X platform saying "there is no wall." Implying that there is no bottleneck in AI development.

The news in question has sparked fears of an AI industry bubble. Cognitive scientist and AI skeptic Gary Marcus warned that once the industry realizes these limitations, the entire industry could collapse. He pointed out that the valuations of highly valued AI companies such as OpenAI and Microsoft are largely based on the assumption that LLMs (Large Language Models) will become AGI (Generalized Artificial Intelligence) through continued development, which is a fantasy.

If the industry doesn't find a way to break out of its bottlenecks quickly, it could face a new "AI winter". Economic markets tend to be impatient, and once investors realize that the profitability of the AI industry is in question, it could cause the bubble to burst.