The strongest in open sourceThe Chinese and English bilingual model,Wudao·Sky Eagle 34B, here it comes!

How strong is it? In a nutshell:

Its comprehensive Chinese and English abilities, logical reasoning abilities, etc., are far superior to Llama2-70B and all previous open source models!

In terms of reasoning ability, the dialogue model IRD evaluation benchmark is second only to GPT4.

Not only is the model big enough and powerful enough, but it is also delivered in one goA complete set of "family bucket" level luxury peripherals.

The one who can make such a big move is the pioneer of China's large model open source school.AI Research Institute.

If we look at the big model open source approach of Zhiyuan over the past few years, it is not difficult to find that it is leading a new trend:

As early as 2021, the world's largest corpus was made public. In 2022, the FlagOpen large model technology open source system was first proactively deployed, and star projects such as the FlagEval evaluation system, COIG data set, and BGE vector model were successively launched across the entire technology stack.

This courage comes precisely from Zhiyuan's positioning as a non-commercial, non-profit, neutral research institution, whose main focus is "sincere open source co-creation."

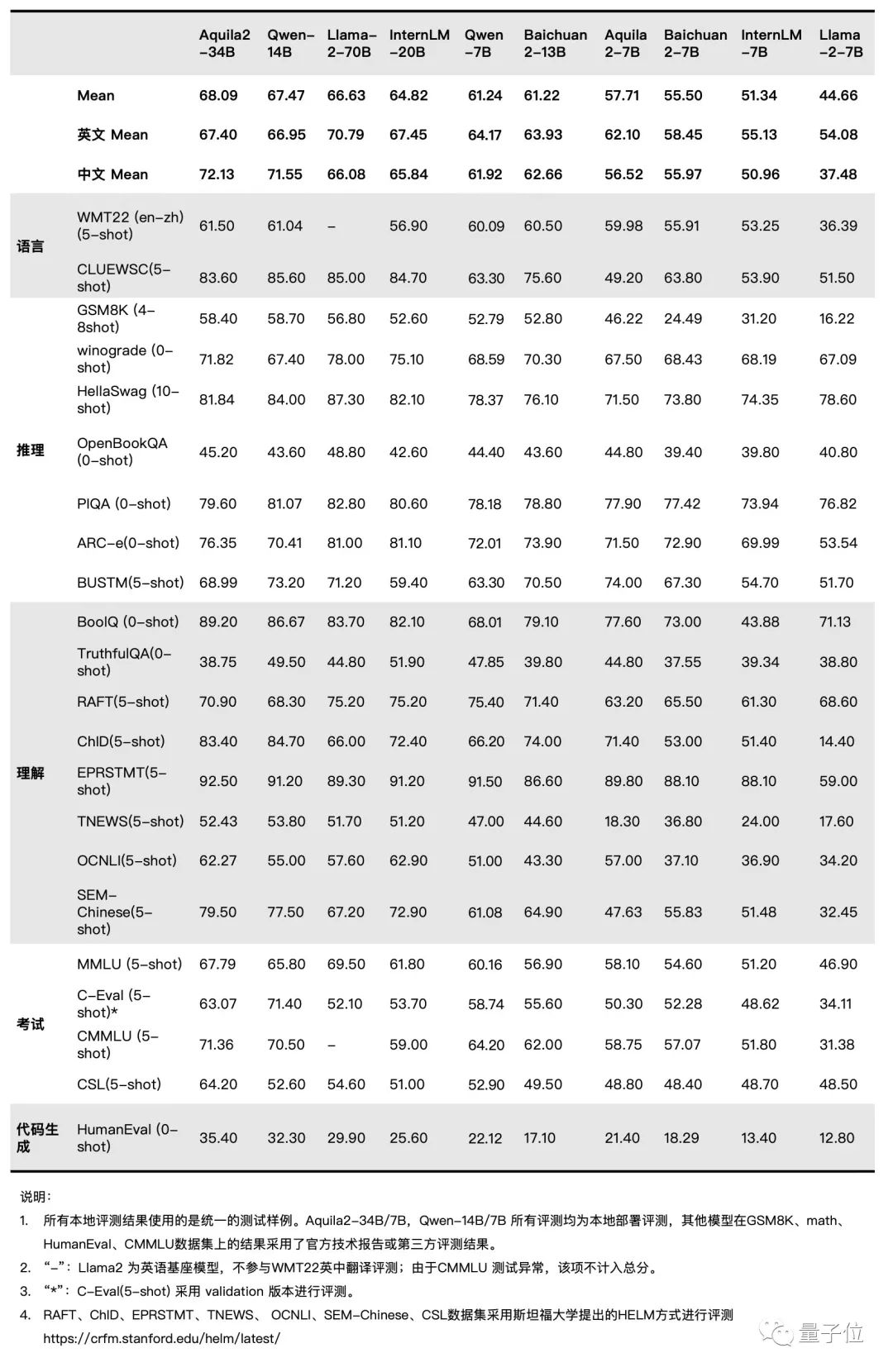

It is understood that the Aquila2-34B base model leads the comprehensive ranking of 22 evaluation benchmarks, including language, comprehension, reasoning, code, examinations and other evaluation dimensions.

Let’s take a look at this picture to get a feel for it:

△

As mentioned earlier, Beijing ZhiyuanAIThe Institute also conscientiously implemented open source to the end, bringing a full range of open source products in one go:

Comprehensive upgrade of the Aquila2 model series: Aquila2-34B/7B basic model, AquilaChat2-34B/7B conversation model, AquilaSQL "text-SQL language" model;

Semantic vector model BGE new version upgrade: Full coverage of the four major search demands.

FlagScale efficient parallel training framework: Training throughput and GPU utilization lead the industry;

FlagAttention High-performance Attention operator set: Innovative support for long text training and Triton language.

Next, let’s continue to take a deeper look at this “strongest open source”.

01 Overview of the “strongest open source” capabilities

As we just mentioned Aquila2-34B, it is one of the base models opened in the "strongest open source" posture this time, including a smaller Aquila2-7B.

Their arrival has also brought considerable benefits to downstream models.

The most powerful open source dialogue model

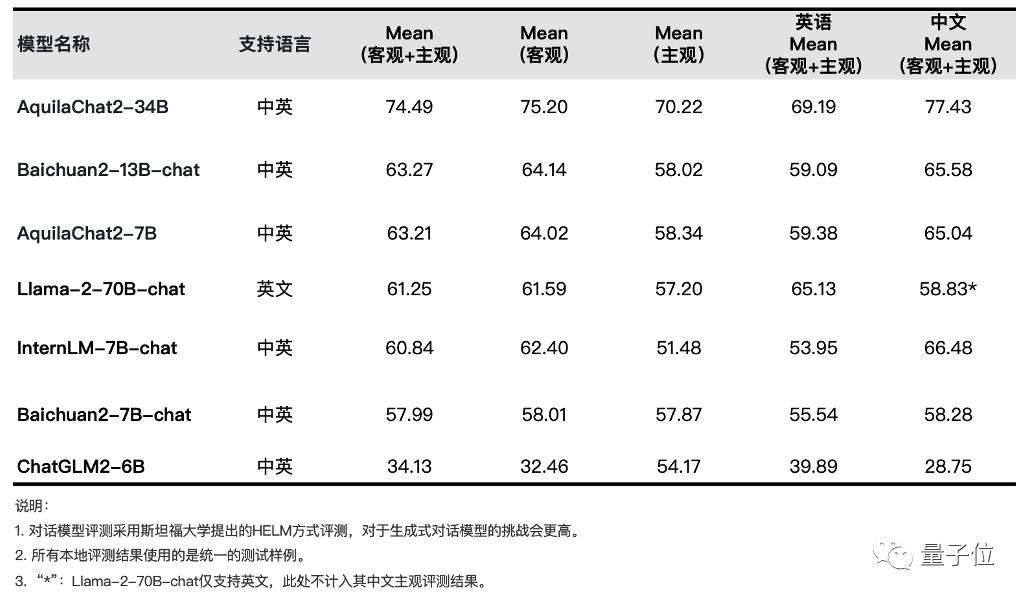

The excellent AquilaChat2 conversation model series was fine-tuned with instructions:

AquilaChat2-34B: It is the most powerful open source Chinese-English bilingual dialogue model currently, and leads in all aspects of subjective + objective comprehensive evaluation;

AquilaChat2-7B: It also achieved the best overall performance among Chinese-English dialogue models of the same level.

△

Evaluation description: For generative dialogue models, the Zhiyuan team believes that it is necessary to strictly judge them according to "the answers freely generated by the model under the input of questions". This method is close to the actual user usage scenario. Therefore, the evaluation is based on the work of Stanford University HELM[1]. This evaluation has stricter requirements on the model's context learning and instruction following capabilities. In the actual evaluation process, some dialogue models' answers do not meet the instruction requirements and may be scored "0". For example: according to the instruction requirements, the correct answer is "A", if the model generates "B" or "the answer is A", it will be judged as "0". At the same time, there are other evaluation methods in the industry, such as letting the dialogue model first splice "question + answer", and after the model calculates the probability of each spliced text, it verifies whether the answer with the highest probability is consistent with the correct answer. During the evaluation process, the dialogue model will not generate any content but calculate the option probability. This evaluation method deviates greatly from the real dialogue scenario, so it is not adopted in the evaluation of generative dialogue models. [1] https://crfm.stanford.edu/helm/latest/

In addition, AquilaChat2-34B also performs very well in reasoning, which is very critical for large language models.

It ranks first in the IRD evaluation benchmark, surpassing models such as Llama2-70B and GPT3.5, and is second only to GPT4.

△

Judging from various achievements, whether it is the base model or the dialogue model, the Aquila2 series can be regarded as the strongest in the open source world.

Context window length up to 16K

For large language models, the ability to handle long text inputs and maintain contextual fluency during multiple rounds of conversations is the key to determining the quality of the user experience.

In order to solve the problem of "long-standing large model problems", Beijing Academy of Artificial Intelligence conducted SFT on a dataset of 200,000 high-quality long text conversations, extending the effective context window length of the model to 16K in one fell swoop.

And not only the length has been improved, the effect has also been optimized.

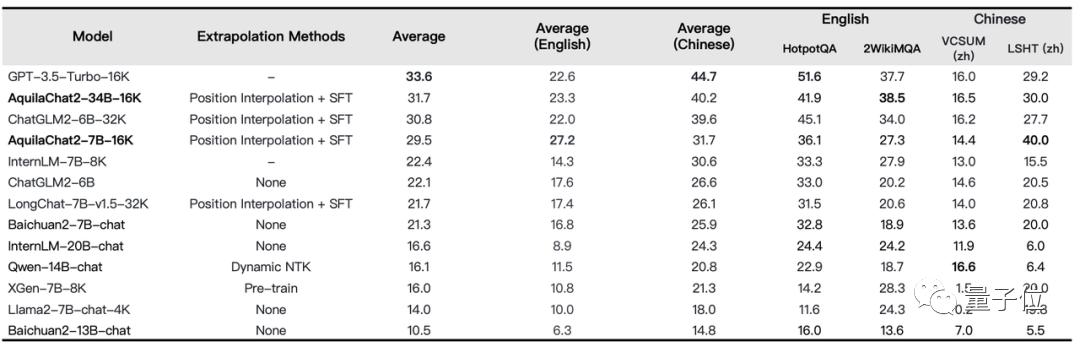

For example, in the evaluation results of LongBench's four Chinese and English long text question and answer and long text summary tasks, it is very obvious.

AquilaChat2-34B-16K is at the leading level of open source long text models and is close to the GPT-3.5 long text model.

△

In addition, the Zhiyuan team conducted a visual analysis of the attention distribution of multiple language models when processing ultra-long texts, and found that all language models have a fixed relative position bottleneck that is significantly smaller than the context window length.

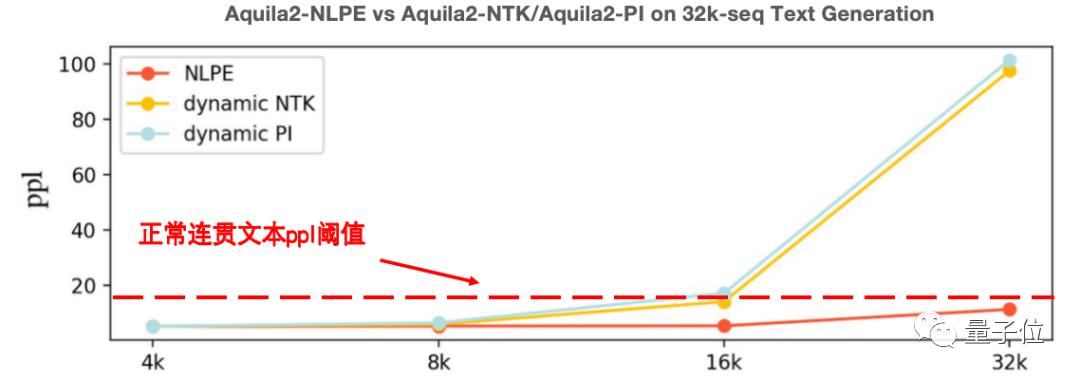

To this end, the Zhiyuan team innovatively proposed the NLPE (Non-Linearized Position Embedding) method. Based on the RoPE method, it improves the model's extension capability by adjusting the relative position encoding and constraining the maximum relative length.

Experiments on text continuation in multiple fields such as code, Chinese and English Few-Shot Leaning, and e-books show that NLPE can extend the 4K Aquila2-34B model to 32K in length, and the coherence of the continued text is much better than Dynamic-NTK, position interpolation and other methods.

△

Moreover, the instruction following capability test on HotpotQA, 2WikiMultihopQA and other datasets with lengths of 5K to 15K showed that the accuracy of AquilaChat2-7B (2K) with NLPE extension was 17.2%, while the accuracy of AquilaChat2-7B with Dynamic-NTK extension was only 0.4%.

△

02 Can handle all kinds of real application scenarios

"Good results" is only one of the criteria for testing a large model. What is more important is that "being easy to use is the bottom line."

This is the generalization ability of the large model, which can easily handle problems that have never been seen before.

To this end, the Wudao Tianying team verified the generalization ability of the Aquila2 model through three real application scenarios.

Building a powerful intelligent entity in Minecraft

The game "Minecraft" can be said to be a good testing ground for the AI industry to test technology.

It has infinitely generated complex worlds and a large number of open tasks, providing a rich interactive interface for intelligent agents.

Based on this, the team from ASC and Peking University proposed a method to efficiently solve Minecraft multi-tasks without expert data - Plan4MC.

Plan4MC can use reinforcement learning with intrinsic rewards to train the basic skills of the agent, allowing the agent to leverage the reasoning capabilities of the large language model AquilaChat2 for task planning.

For example, in the video below, the effect of an intelligent agent using AquilaChat2 to automatically complete multiple rounds of conversational interactions is demonstrated.

The game’s “current state of the environment” and “tasks to be completed” information is fed into the AquilaChat2 model, and AquilaChat2 feeds back decision-making information such as “what skill to use next” to the character, ultimately completing the task set in Minecraft of “cutting down trees and making a workbench nearby.”

Linked vector database via Aquila2+BGE2

Vector databases have become popular in the large modeling community in recent years, but they are still somewhat limited in their capabilities when faced with complex problems that require in-depth understanding.

To this end, the Academy of Chinese Artificial Intelligence combined Aqiula2 with its self-developed open source semantic vector model BGE2, completely unlocking some complex retrieval tasks that cannot be solved by retrieval methods based only on traditional vector libraries.

For example, in the example below, we can clearly see that it becomes very smooth when handling tasks such as "retrieve papers by a certain author on a certain topic" and "generate summary texts of multiple papers on a topic".

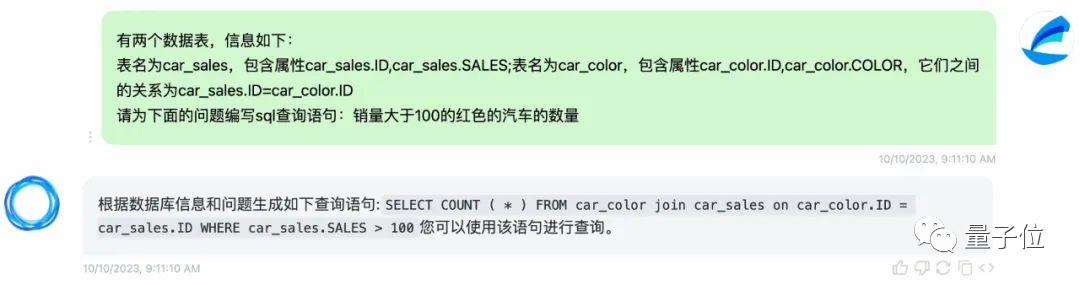

Optimal "text-SQL language" generation model

Many users have a headache with the SQL language when dealing with tasks such as database queries.

Wouldn’t it be great if we could operate using the plain language we commonly use?

Now, this convenient way is already available - AquilaSQL.

In actual application scenarios, users can also conduct secondary development based on AquilaSQL, graft it to the local knowledge base, generate local query SQL, or further improve the data analysis performance of the model, so that the model not only returns query results, but also can further generate analysis conclusions, charts, etc.

For example, when processing the following complex query task, you only need to speak a natural language:

Filter cars whose sales volume is greater than 100 and whose color is red from two data tables containing car sales (car_sales) and car color (car_color).

And AquilaSQL's "performance" is also very impressive.

After continued pre-training on SQL corpus and two-stage SFT training, it finally surpassed the SOTA model on the "Text-SQL Language Generation Model" ranking Cspider with an accuracy rate of 67.3%.

The accuracy of the GPT4 model without SQL corpus fine-tuning is only 30.8%.

03 There is also a whole family of open source

As we mentioned earlier, ASCAL has always been committed to open source.

This time, when the big model was upgraded, ASCAL also unreservedly open-sourced a series of star projects including algorithms, data, tools, and evaluation.

It is understood that the Aquila2 series models not only fully adopt commercial licensing agreements, but also allow the public to use them widely in academic research and commercial applications.

Next, let's take a quick look at these open source packages.

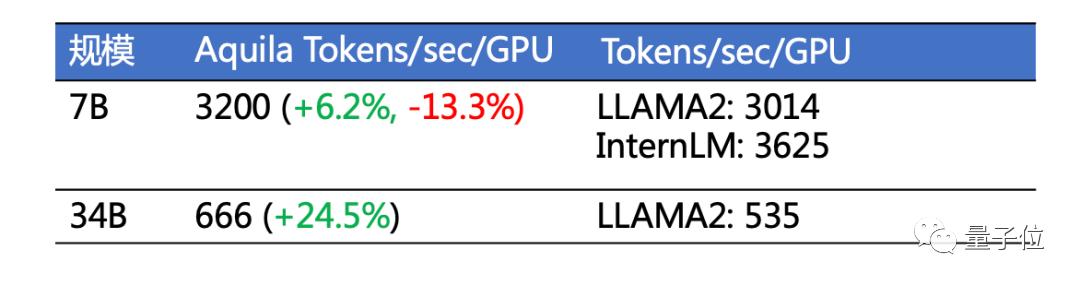

Efficient parallel training framework FlagScale

FlagScale is an efficient parallel training framework used by Aquila2-34B, which can provide one-stop training functions for large language models.

The Zhiyuan team shared the training configuration, optimization scheme and hyperparameters of the Aquila2 model with large model developers through the FlagScale project, marking the first time in China that the training code and hyperparameters were fully open sourced.

FlagScale is an extension of Megatron-LM and provides a series of functional enhancements, including distributed optimizer state re-segmentation, precise positioning of training problem data, and parameter to Huggingface conversion.

After actual testing, Aquila2 training throughput and GPU utilization have reached industry-leading levels.

△

It is understood that FlagScale will continue to keep in sync with the latest code of the upstream project Megatron-LM in the future, introduce more customized functions, integrate the latest distributed training and reasoning technologies and mainstream large models, support heterogeneous AI hardware, and strive to build a general, convenient and efficient distributed large model training and reasoning framework to meet model training tasks of different scales and needs.

FlagAttention high-performance Attention open source operator set

FlagAttention is the first high-performance Attention open source operator set developed in the Triton language that supports long text large model training. It expands the Memory Efficient Attention operator of the Flash Attention series to meet the needs of large model training.

The piecewise attention operator, PiecewiseAttention, has been implemented.

PiecewiseAttention mainly solves the extrapolation problem of the Transformer model with rotational position encoding (Roformer). Its characteristics can be summarized as follows:

Universality: It is universal for models that use piecewise calculation of Attention and can be easily migrated to large language models other than Aquila.

Ease of use: FlagAttention is implemented based on the Triton language and provides a PyTorch interface. The construction and installation process is more convenient than Flash Attention developed in CUDA C.

Scalability: Also thanks to the Triton language, the modification and extension threshold of the FlagAttention algorithm itself is relatively low, and developers can easily develop more new functions based on it.

In the future, the FlagAttention project will continue to target large-model research needs, support Attention operators with other functional extensions, further optimize operator performance, and adapt to more heterogeneous AI hardware.

BGE2 Next-generation semantic vector model

The new generation of BGE semantic vector model will also be open sourced simultaneously with Aquila2.

The BGE-LLM Embedder model in BGE2 integrates four major capabilities: "knowledge retrieval", "memory retrieval", "example retrieval", and "tool retrieval".

For the first time, it achieves comprehensive coverage of the main retrieval requirements of a large language model by a single semantic vector model.

Combined with specific usage scenarios, BGE-LLM Embedder will significantly improve the performance of large language models in important areas such as processing knowledge-intensive tasks, long-term memory, instruction following, and tool use.

…

So are you attracted by such a thorough "strongest open source"?

04 One More Thing

Aquila2 model is open source at: https://github.com/FlagAI-Open/Aquila2https://model.baai.ac.cn/https://huggingface.co/BAAI

AquilaSQL open source repository address: https://github.com/FlagAI-Open/FlagAI/tree/master/examples/Aquila/Aquila-sql

FlagAttention open source code repository: https://github.com/FlagOpen/FlagAttention

BGE2 open source addresspaper: https://arxiv.org/pdf/2310.07554.pdfmodel: https://huggingface.co/BAAI/llm-embedderrepo: https://github.com/FlagOpen/FlagEmbedding/tree/master/FlagEmbedding/llm_embedder

LLAMA2 throughput estimation formula: total tokens / (total GPU hours * 3600), according to the Llama 2: Open Foundation and Fine-Tuned Chat Models paper: 1) 7B total tokens is 2.0 T, total GPU hours is 184320, substituting into the formula we get 3014 Tokens/sec/GPU; 2) 34B total tokens is 2.0 T, total GPU hours is 1038336, substituting into the formula we get 535 Tokens/sec/GPU.