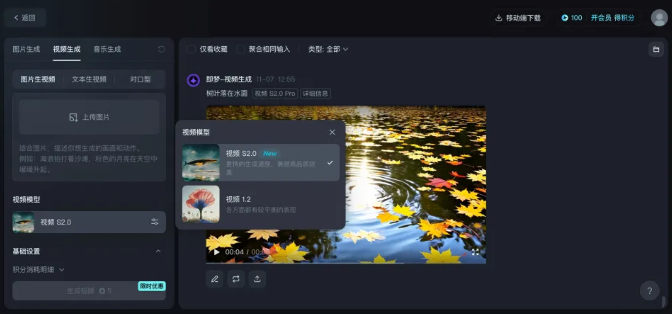

recently,Dream AIannounced that, effective immediately, theByteDancein-houseVideo Generation ModelSeaweed is officially open to platform users. After logging in, under the "Video Generation" function, select "Video S2.0" for the video model to experience.

Seaweed video generation model is part of the beanbag model family, with professional-grade light and shadow layout and color mixing, the visual sense of the screen is very beautiful and realistic. Based on the DiT architecture, Seaweed video generation model can also realize the large-scale motion picture smoothly and naturally.

Tests show that the model takes only 60s to generate a high-quality AI video of 5s in duration, significantly ahead of the 3-5 minute frontier of the domestic industry.

The official of Dream AI reveals that the Pro version of Seaweed and Pixeldance video generation models will also be open for use in the near future, and the Pro version of the model can realize natural and coherent multi-take action and multi-subject complex interactions, and overcome the consistency problem of multi-camera switching, which can maintain the consistency of the subject, style, and atmosphere when switching between cameras and can be compatible with the proportions of movies, TVs, computers, and cell phones. The ratio of various devices can better serve professional creators and artists, applied to design, film and television, animation and other content scenes, helping the realization of imagination and story creation.

The relevant person in charge of the platform said that AI can interact with creators in depth and create together, bringing a lot of surprises and inspirations, by opening up the use of the beanbag video generation model and continuously upgrading the platform's various AI capabilities, i.e., the Dream AI hopes to become the user's most intimate and intelligent creative partner.