Nov. 9 (Bloomberg) -- According to a foreign news report, theOpenAI Another lead security researcher, Lilian Weng, announced Friday that she is leaving the startup, after serving as vice president of research and security since August, and before that as OpenAI's security systems team leader.

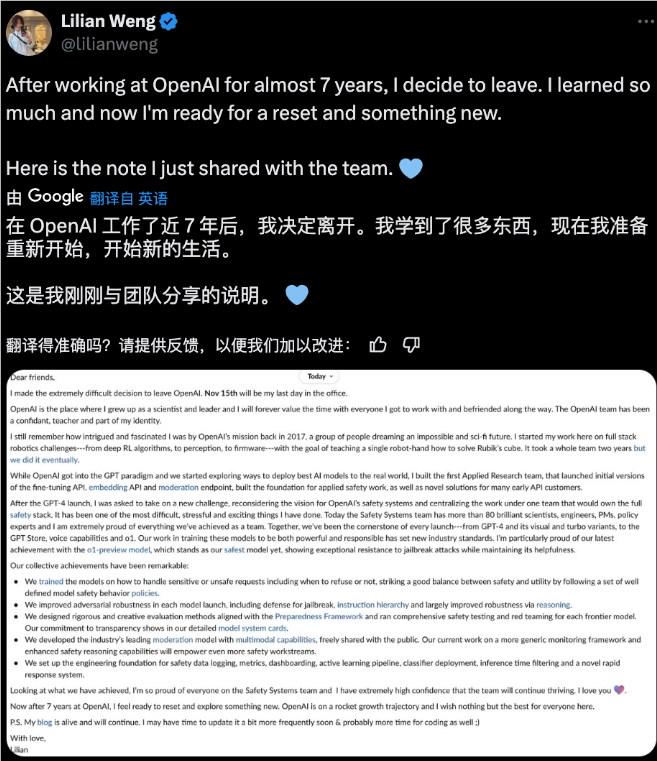

In a post on X, Ms. Weng said, "After 7 years at OpenAI, I feel ready to reboot and explore something new."

Ms. Weng said her last day would be Nov. 15, but did not specify where she would go next.

"I made the extremely difficult decision to leave OpenAI," Weng said in the post. Weng said in the post. "Looking at what we've accomplished, I'm proud of everyone on the Safe Systems team, and I'm very confident that the team will continue to thrive."

father'squit a jobis the latest in a series of AI safety researchers, policy researchers and other executives to leave OpenAI in the last year, several of whom have accused OpenAI of prioritizing commercial products over AI safety.

Weng's departure joins Ilya Sutskever and Jan Leike, who headed OpenAI's now-defunct Superalignment team, which sought to develop ways to control superintelligent AI systems, and who also left the startup this year for other AI safety work.

According to Weng's LinkedIn information, she first joined OpenAI in 2018 and worked on the startup's robotics team, ultimately building a robotic hand that could solve a Rubik's Cube - a task that took two years to complete, according to her post.

As OpenAI began to focus more on the GPT paradigm, so did Weng. in 2021, the researcher turned to helping build the startup's applied AI research team. After the release of GPT-4, Weng was tasked with creating a dedicated team to build safety systems for the startup in 2023. Today, OpenAI's Safe Systems division employs more than 80 scientists, researchers, and policy experts, according to Weng's post.

The number of AI safety experts is considerable, but many are concerned about OpenAI's focus on safety as it tries to build increasingly powerful AI systems. Miles Brundage, a longtime policy researcher, left the startup in October and announced that OpenAI was disbanding its AGI readiness team, which he had advised.

On the same day, the New York Times reported on former OpenAI researcher Suchir Balaji, who said he left OpenAI because he felt the startup's technology was doing more harm than good to society.

OpenAI revealed to TechCrunch that its executives and security researchers are working to find a replacement for Weng.

We are grateful for Lilian's contributions to groundbreaking security research and the establishment of rigorous technical safeguards," an OpenAI spokesperson said in an emailed statement. We are confident that the Safe Systems team will continue to play a critical role in ensuring that our systems are safe and secure for the hundreds of millions of people around the world.

Other executives who have left OpenAI in recent months include CTO Mira Murati, CRO Bob McGrew, and VP of research Barret Zoph. in August, prominent researcher Andrej Karpathy and co-founder John Schulman also announced they would be leaving the startup. Some of them, including Leike and Schulman, left to join OpenAI competitor Anthropic, while others went on to start their own ventures.