Meta issued a press release about its FAIR(Basic Artificial Intelligence Research) team forrobotThe status of research on tactile perceptual abilities.This research aims to allow robots to further understand and manipulate external objects through tactile means.

Meta said that the core of building appropriate AI robots is to let the robot's sensors sense and understand the physical world, and at the same time use the "AI brain" to accurately control the robot's response to the physical world, and the team is currently developing the robot's tactile sensing ability is mainly to allow the robot to detect the material of the objects it interacts with and the sense of touch, so that the AI to determine how to operate these devices (such as holding an egg and other scenarios). The team is currently developing the robot's tactile sensing capabilities to allow the robot to detect the material and tactile properties of the objects it interacts with, in order to allow the AI to determine how the robot should operate these devices (e.g., picking up an egg).

Referring to the literature published by Meta, we learned that Meta has announced a total of Meta Sparsh, Digit 360 and Meta Digit Plexus, among which Meta Sparsh is a kind of AI-based haptic encoder, which mainly utilizes the self-supervised learning ability of AI to realize cross-scene haptic perception, so that the AI brain of the robot can flexibly "perceive" the characteristics of relevant objects in various scenes after learning the "sense of touch" for a certain object. The AI brain of the robot can flexibly "perceive" the characteristics of relevant objects in various scenes after learning the "sense of touch" of a certain object.

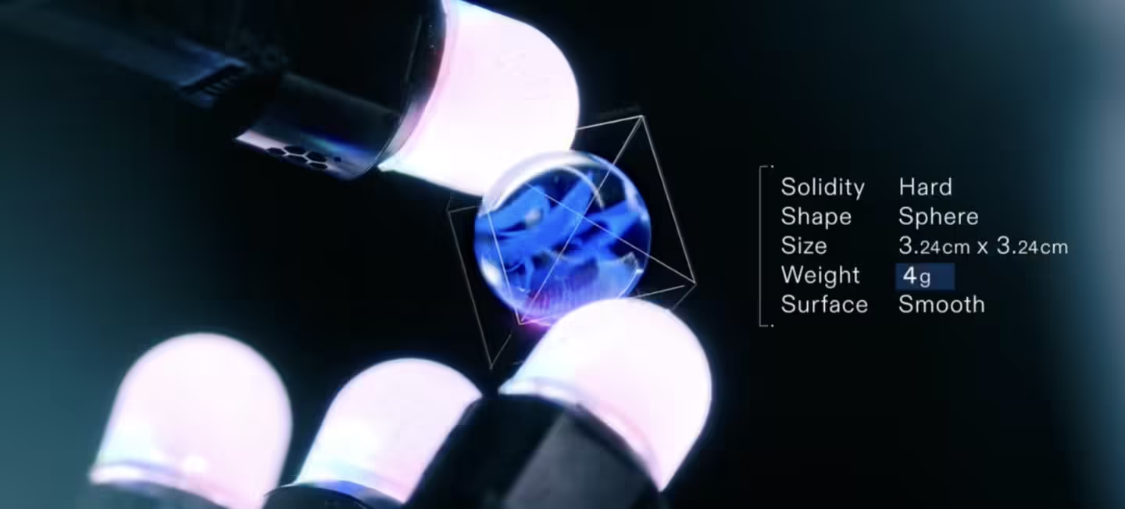

The Digit 360, on the other hand, is a high-precision sensor that is deployed primarily on the robot's fingers and is claimed to have multimodal sensing capabilities to capture subtle tactile changes.Meta claims that the sensor can mimic the human sense of touch and supports multiple sensing capabilities such as vibration and temperature.

Meta Digit Plexus, on the other hand, is an open platform that claims to be able to integrate a wide range of sensors, enabling robots to sense their surroundings more comprehensively through sensors and respond by interfacing with an AI brain in real time through a unified standard.

In addition, Meta has developed the PARTNR Benchmark, a test framework for evaluating human-robot collaboration to test the ability of robotic AI systems to plan and reason in real-life scenarios. 100,000 natural language tasks are built into the benchmark to simulate a variety of scenarios in a home environment to help developers test a robot's ability to understand and execute natural language.

Meta has now made these techniques and data available to researchers, including papers, open source code, and models, to encourage the wider research community to participate in innovative research in haptic technology, with the following addresses for related projects (Click here to visit).