recently,Cohere announced the launch of two new open source AI models that aim to close the language gap in the underlying models through its Aya project. The two new models are called Aya Expanse8B and 35B, now available on Hugging Face. The introduction of these two models has resulted in significant AI performance improvements in 23 languages.

In his blog post, Cohere said that the 8B parametric model makes breakthroughs easier for researchers around the world, while the 32B parametric model provides industry-leading multilingual capabilities.

The goal of the Aya project is to extend access to the base model to more non-English languages.Prior to that, Cohere's research department launched the Aya program last year and in February released the Aya101 Large Language Model (LLM), which covers 101 languages. In addition, Cohere has launched the Aya dataset to help train models on other languages.

The Aya Expanse model follows many of the core methods of Aya101 in its construction.Cohere says that the improvements to Aya Expanse are based on years of rethinking the core building blocks in the area of machine learning breakthroughs. Their research direction has focused on closing the language gap, with some key breakthroughs such as data arbitrage, preference training for general performance and security, and model merging.

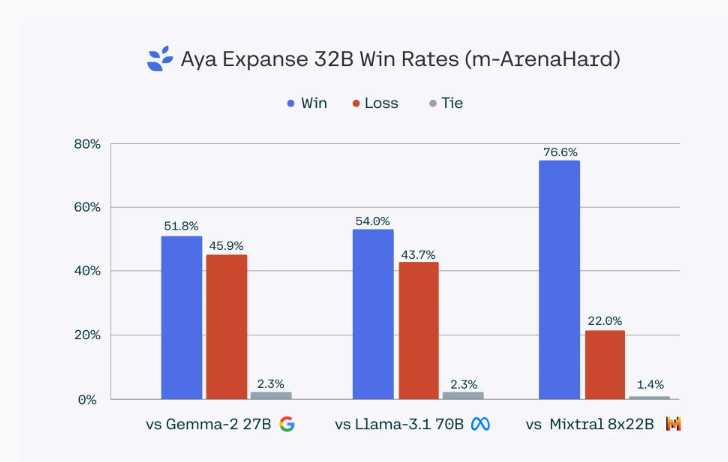

In several benchmark tests, theCohere said two of Aya Expanse's models outperform similarly sized AI models from companies like Google, Mistral and Meta.

where Aya Expanse32B outperforms Gemma227B, Mistral8x22B, and even the larger Llama3.170B in multilingual benchmarks, while the smaller 8B model similarly outperforms Gemma29B, Llama3.18B, and Ministral8B.Winning percentages ranged from 60.41 TP3T to 70.61 TP3T.

To avoid generating incomprehensible content, Cohere uses a data sampling method called data arbitrage. This approach allows for better training of models and is especially effective when targeting low-resource languages. Additionally, Cohere focuses on steering the model toward "global preferences" and taking into account the perspectives of different cultures and languages, which in turn improves the performance and security of the model.

Cohere's Aya program seeks to ensure that LLMs perform better for research in non-English languages. While many LLMs will eventually be released in other languages, they often face a lack of data when training models, especially for low-resource languages. Cohere's efforts are therefore particularly important in helping to build multilingual AI models.

Official Blog:https://cohere.com/blog/aya-expanse-connecting-our-world