Midjourney and Stable Diffusion are both popular AI image generation tools that generate high-quality images based on text descriptions. Both are text-to-image generation models based on deep learning techniques, but they are each based on different large models.

But a recently introduced model that is more powerful than the first two, generates more realistic images, and is more in tune with the real world in terms of detail, is theFLUX!

What is Flux?

Flux AI is one of the newest text-generated image models from Black Forest Labs, a team of original members who left Stable Diffusion to create a new big model for AI mapping.Flux AI models are known for their superior visual quality, precise cue word following, diverse styles, and complex scene generation capabilities. Flux AI models are known for their superior visual quality, precise cue word adherence, diverse styles and ability to generate complex scenes. It comes in three versions: FLUX.1 [pro], FLUX.1 [dev] and FLUX.1 [schnell], which are designed for different scenarios and needs.

FLUX.1 Pro

is a closed-source model designed for commercial use, offering state-of-the-art image generation performance.

FLUX.1 Dev

is an open source bootstrap distillation model for non-commercial applications.

FLUX.1 Schnell

It is a fast version designed for local development and personal use.

Flux AI models utilize an innovative hybrid architecture that combines multimodal processing capabilities with the Transformer technology for parallel diffusion mechanisms and scale to up to 12 billion parameters. These models employ flow matching techniques in the methodology used to train the generated models, which is not only versatile but also conceptually simple and particularly suitable for a wide range of scenarios, including diffusion processes.

Official website: https://blackforestlabs.ai/

Here is a comparison of the Flux, Midjourney and Stable Diffusion models:

image quality

Flux: does not require the involvement of other plug-ins, and by itself excels at generating high-resolution, detail-rich images, especially in complex scenes and human anatomy.

Midjourney: Known for its artistic style and high quality output, Midjourney has a particular strength in artistry and stylistic diversity.

Stable Diffusion: Generates realistic images for projects that require realistic output.

Speed and efficiency

Flux: Provides fast image generation, especially for schnell variants, for processes that require rapid prototyping and iterative design. In most cases there is no need to download additional style models to output images in a variety of styles.

Midjourney: no explicit mention of speed, but often business models may run on cloud servers and there may be a waiting list.

Stable Diffusion: Slower generation, but provides more control in the image optimization process.

Handling complex scenarios

Flux: excels in handling complex compositions, thanks to its advanced architecture. Especially for text, flux can output images with text, as long as the cue word is accurate enough to directly output the design of poster-level images.

Midjourney: Capable of handling complex scenes, but in some cases more iterations may be needed to achieve the desired result.

Stable Diffusion: There may be some limitations in handling complex scenes.

Human Anatomy Rendering

Flux: Excellent at rendering human anatomy, especially hand details, which are more fully reproduced.

Midjourney: Not specifically mentioned, but usually produces artistic images of people that are difficult to specify an accurate depiction of.

Stable Diffusion: May have difficulty accurately depicting human features. Requires additional plug-ins or post-processing to barely correct or largely achieve the effect.

Flexibility and integration

Flux: offers several variants for different usage scenarios and needs, including open source and specialized models.

Midjourney: Being a commercial tool, there may be some limitations in terms of customization.

Stable Diffusion: open-source model, community-driven, offering a wealth of customization and integration options.

Open Source and Business Models

Flux: Provides open source models to encourage community involvement and innovation.

Midjourney: for commercial models, providing professional image generation services.

Stable Diffusion: open source model with active community support and continuous improvement.

application-specific

FluxI: Ideal for projects that require high detail and accurate representation of complex scenes.

Midjourney: Suitable for artistic creation and design, especially in areas requiring artistic style and creative expression.

Stable Diffusion: For realistic output where control of the final image is critical.

To summarize flux:

(1) More detail and better effect on the screen

(2) Image text support is more prominent and complete, and the output is more accurate.

(3) The character's hands are complete and realistic, and basically impossible to get wrong

(4) The model contains many styles, supports a variety of styles, and does not rely on additional models to assist in supplementation.

(5) Negative prompts can be ignored and positive prompts alone can be accurately output.

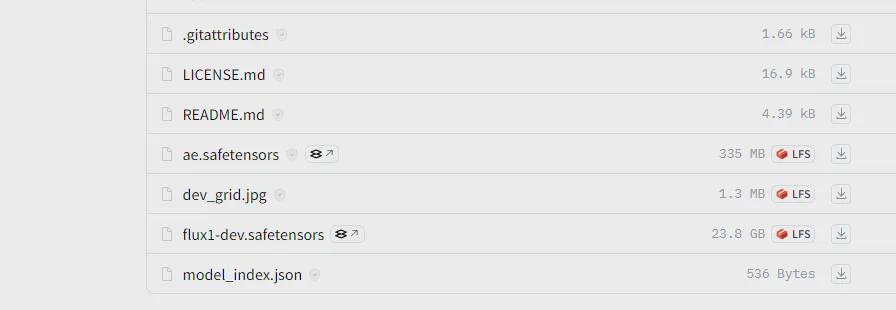

Through the above comparison, flux is stronger because its training parameters are larger than the first two, stable diffusion 3 is the highest 8B training parameters, about 8 billion, while the beginning of flux 1 is 12B training parameters, a full 12 billion, several times more! The size of a single model is 23G in size, so you can imagine that Flux is powerful is very powerful.

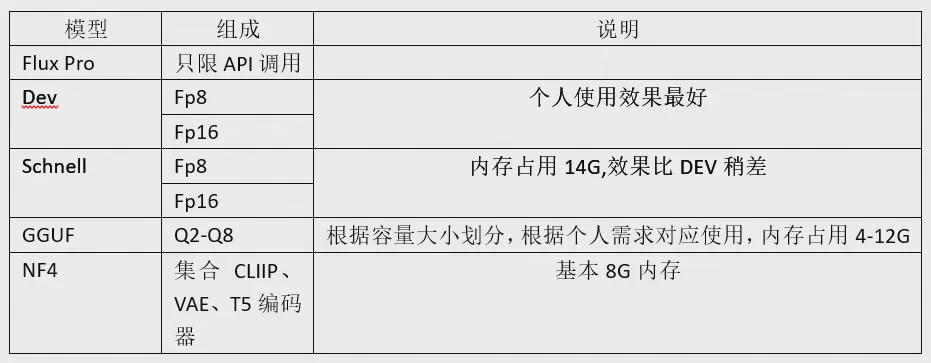

Difference between Flux models: There are three main models as follows

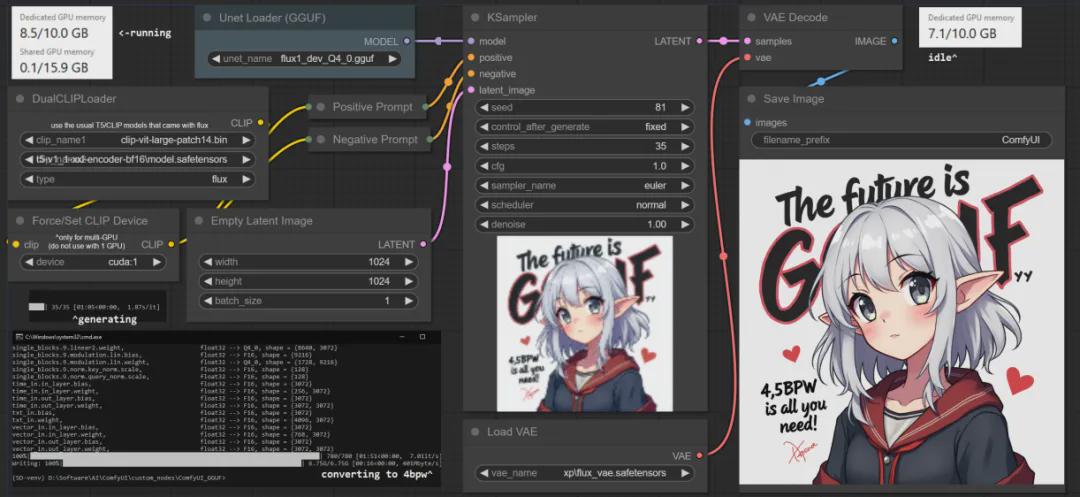

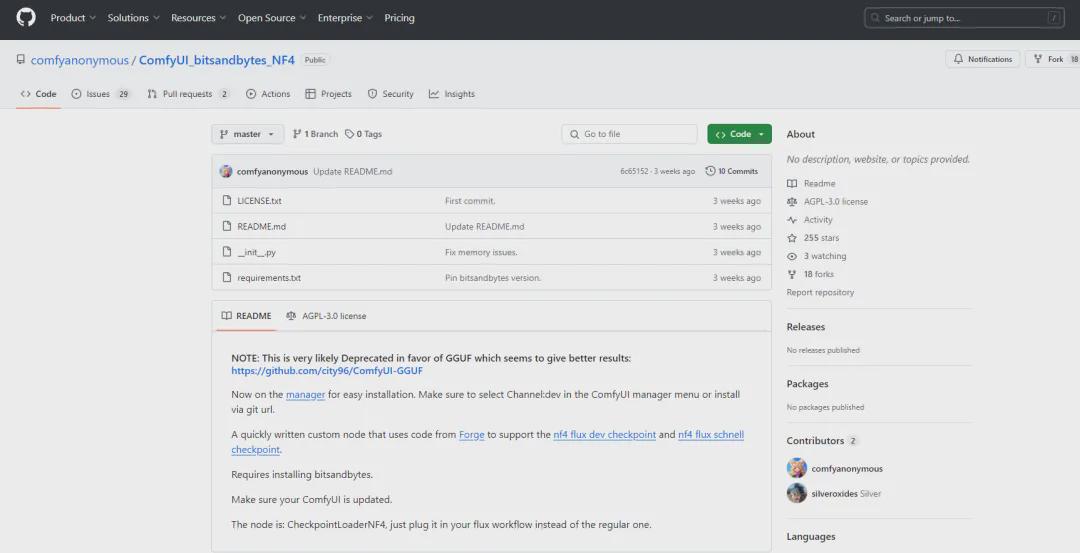

*Note: If using GGUF and NF4, additional plug-ins need to be installed:

GGUF node: https://github.com/city96/ComfyUI-GGUF

NF4 node: https://github.com/comfyanonymous/ComfyUI_bitsandbytes_NF4

How to deploy and install flux?

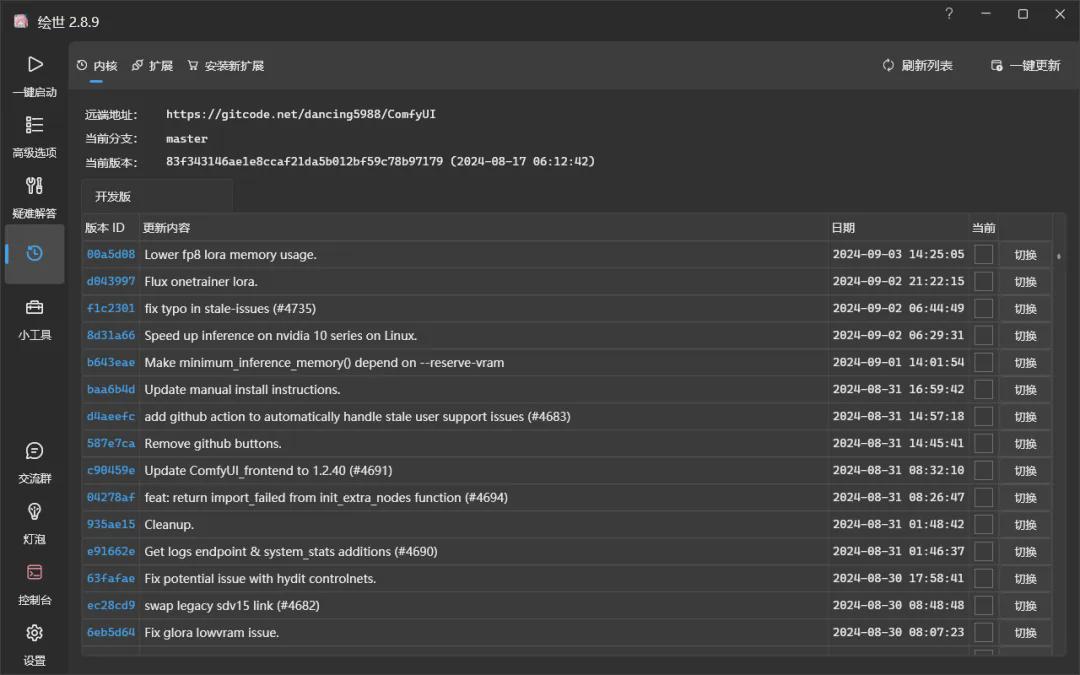

1. Make sure your comfyui is the latest version. (This article uses Mr. Akiba's launcher)

Click on the version of the launcher, see the kernel and extensions at the top, and click on the one-click update button in the upper right corner to update to the latest. Since flux was released before August, make sure your comfyui is updated to the latest version after August 1st.

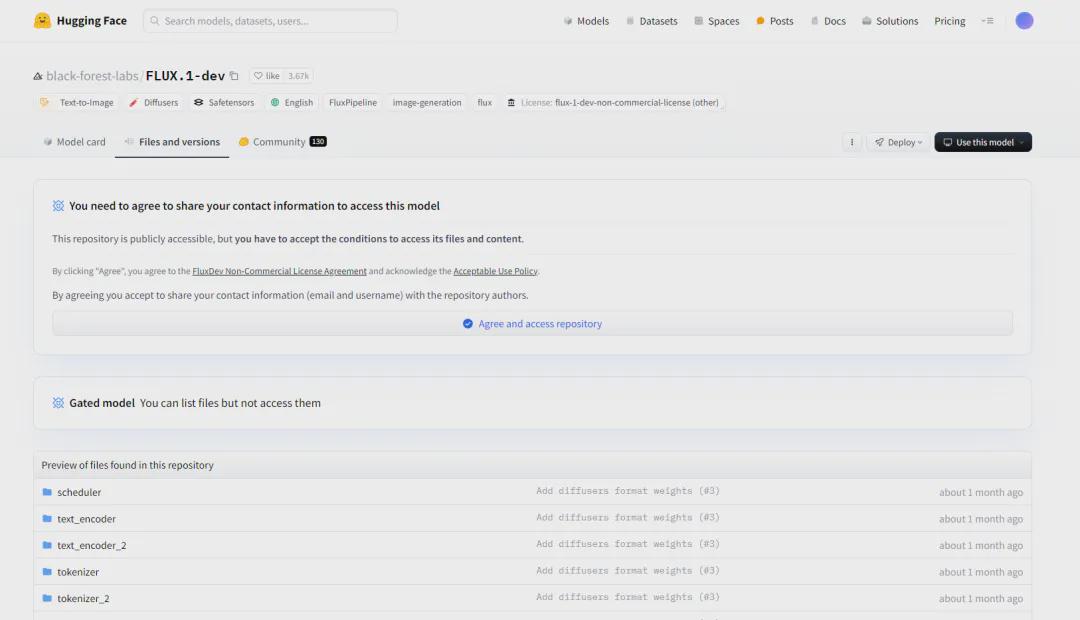

2. Go to the Hamburg homepage on the official website https://huggingface.com/black-forest-labs/flux.1-dev

Download ae.safetensors (i.e. vae), flux1-dev.safetensors (i.e. unet)

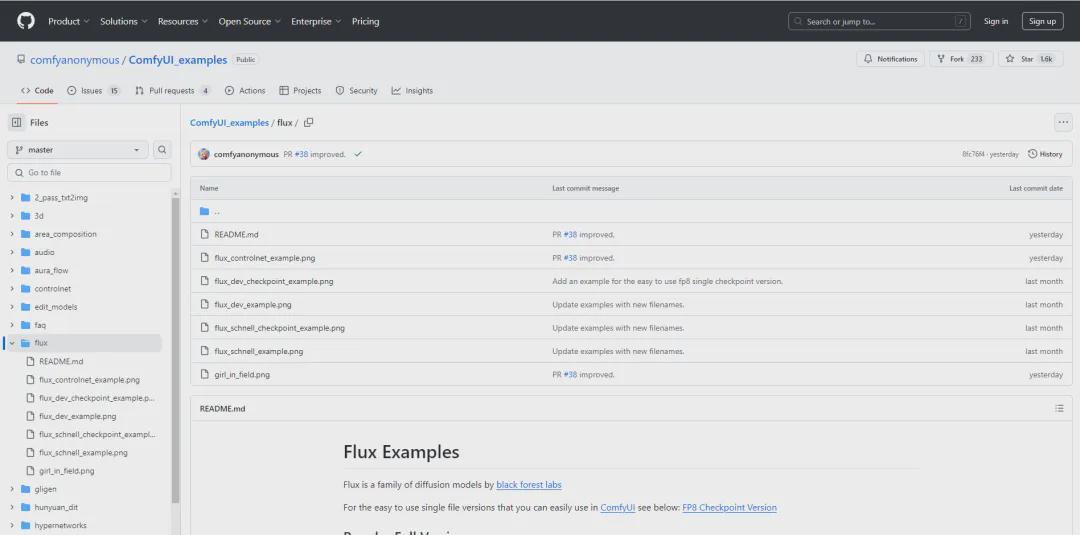

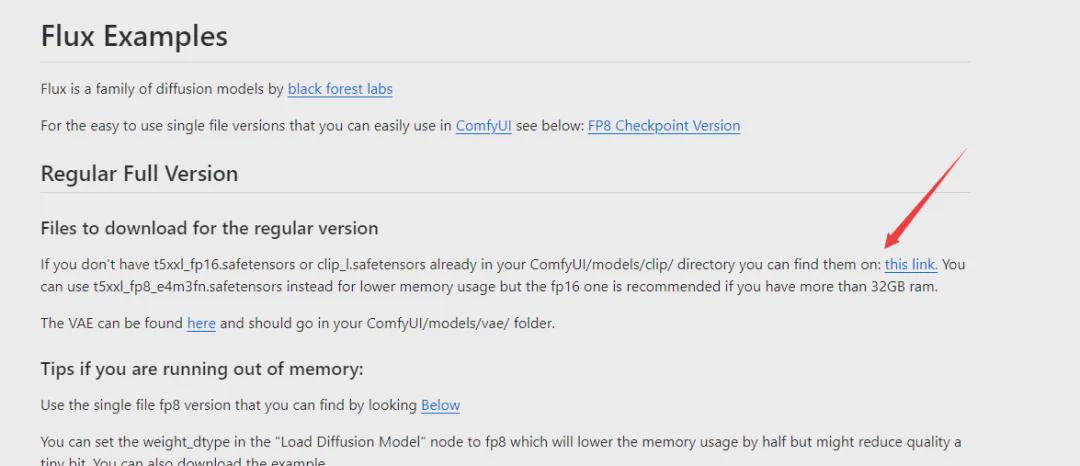

3. Download the clip file, go to comfyui's github homepage and find flux: https://github.com/comfyanonymous/ComfyUI_examples/tree/master/flux

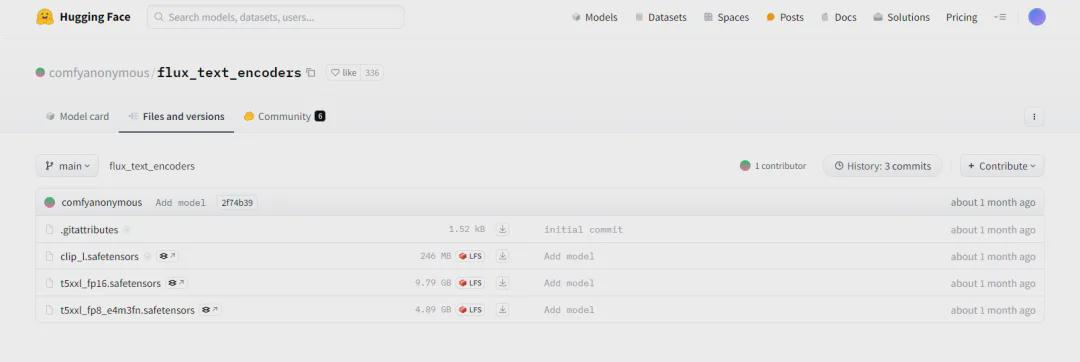

Enter from here and see the files clip_l.safetensors, t5xxl_fp16.safetensors, t5xxl_fp8_e4m3fn.safetensors download.

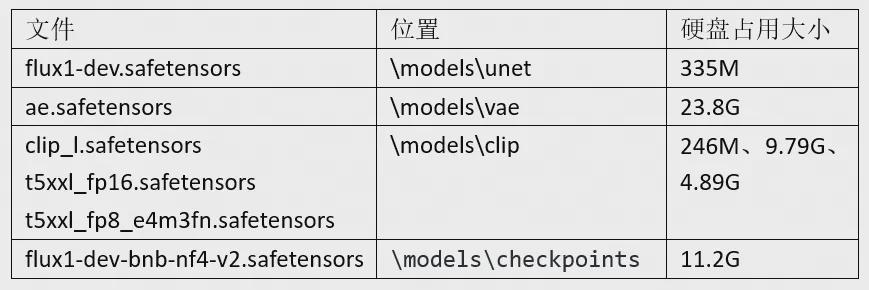

4. Placement of documents:

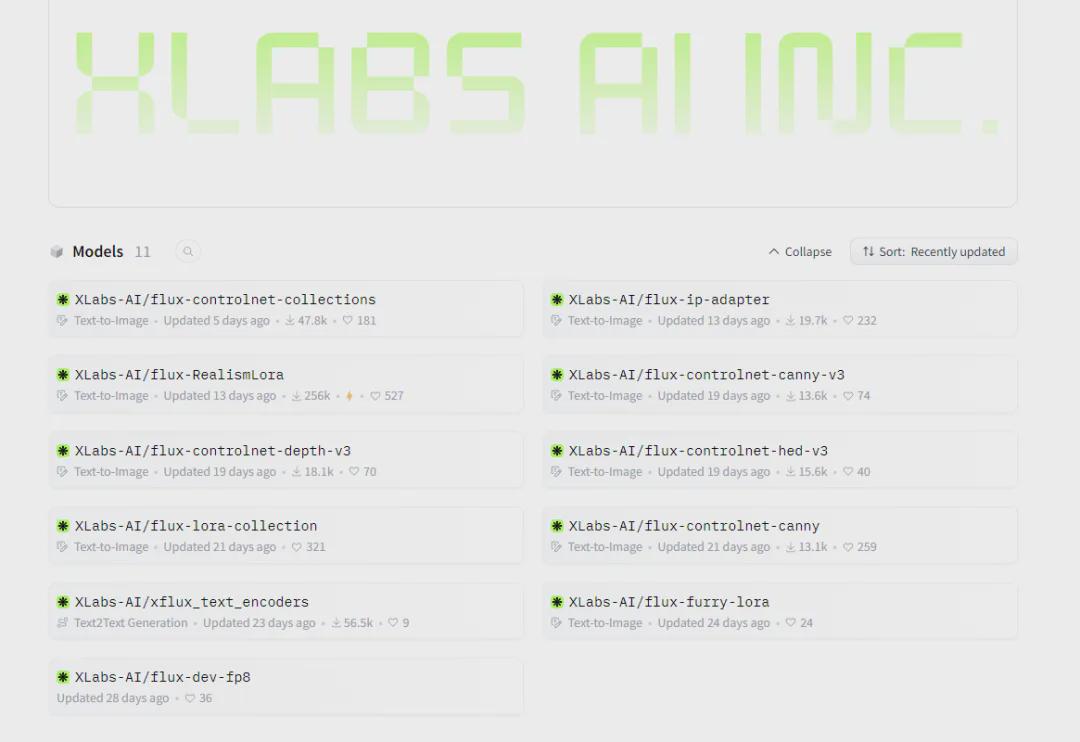

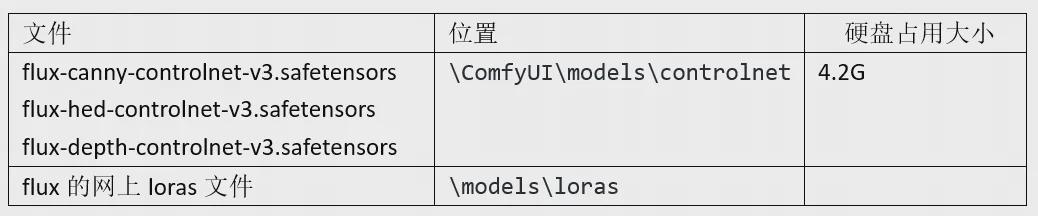

5. Other resources, including controlnet and lora, are available on the xlab-ai homepage at https://huggingface.co/XLabs-AI.

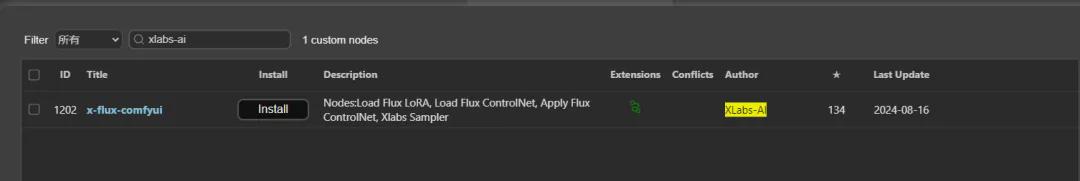

*If you want to use the controlnet of the flux model or the iPadapter of the flux model, you need to download and install the XLabs-Ai plugin in comfyui. Open the node manager in comfyui manager, then search for the plugin and install it, then restart comfyui.

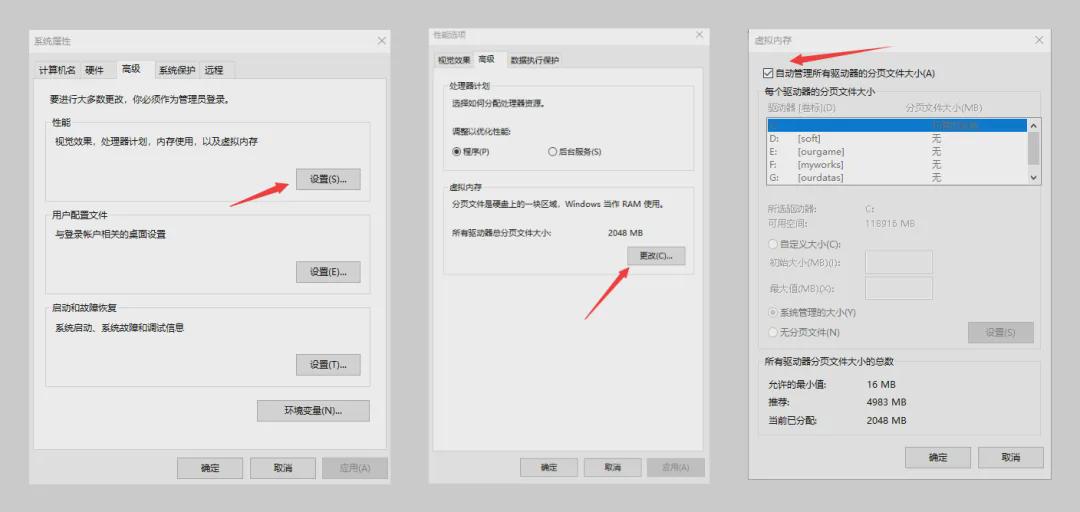

6. System memory settings

Note that the model used to run takes up more memory, it is recommended to turn on the system's virtual memory: System Settings-Advanced-Performance Settings-Advanced-Virtual Memory-Check the box for Automatic Management.

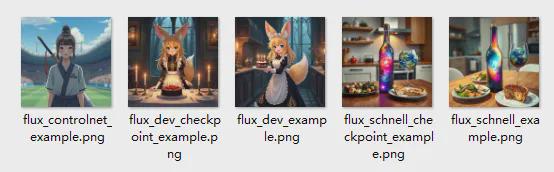

How quickly can I use it?

The official workflow of the related use is given, just drag and drop the official github case picture to comfyui. It's worthwhile to see the pictures named to correspond to the usage.

Official Case Workflow

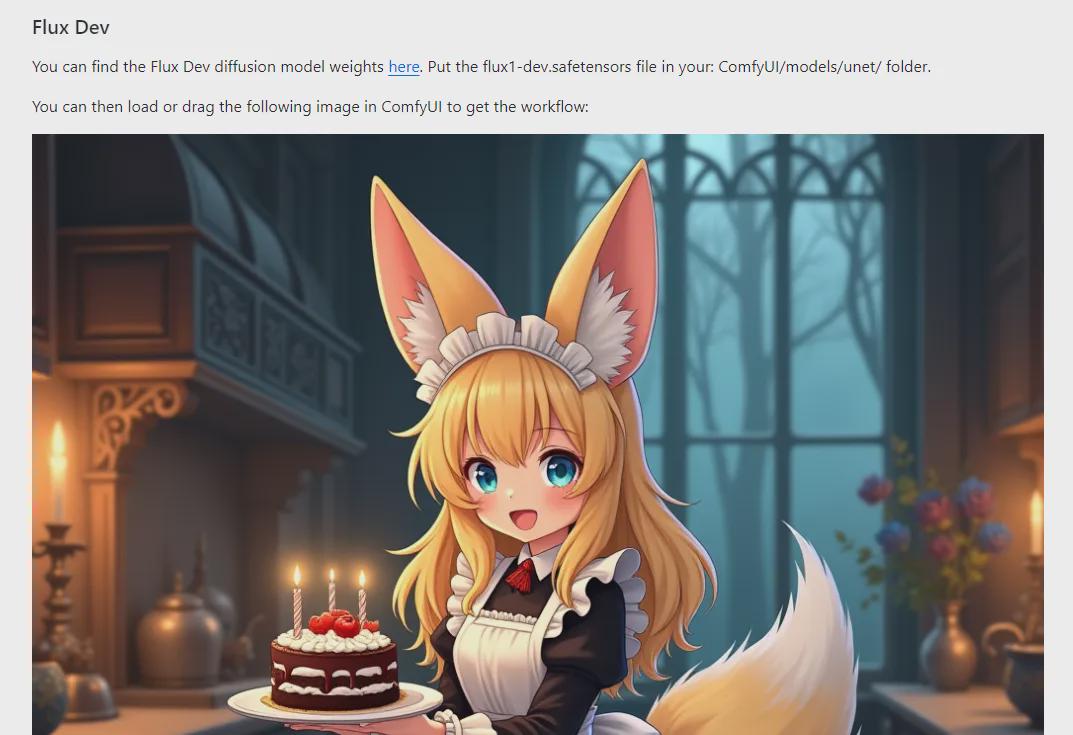

If you use the flux_dev_example workflow as an example, drag and drop the image into the comfyui

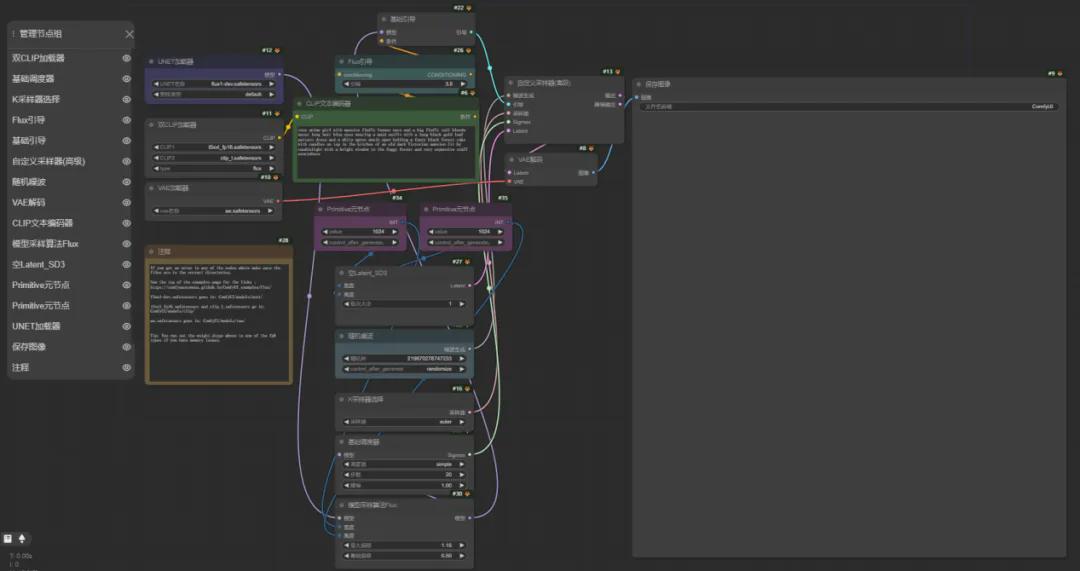

Get the following workflow:

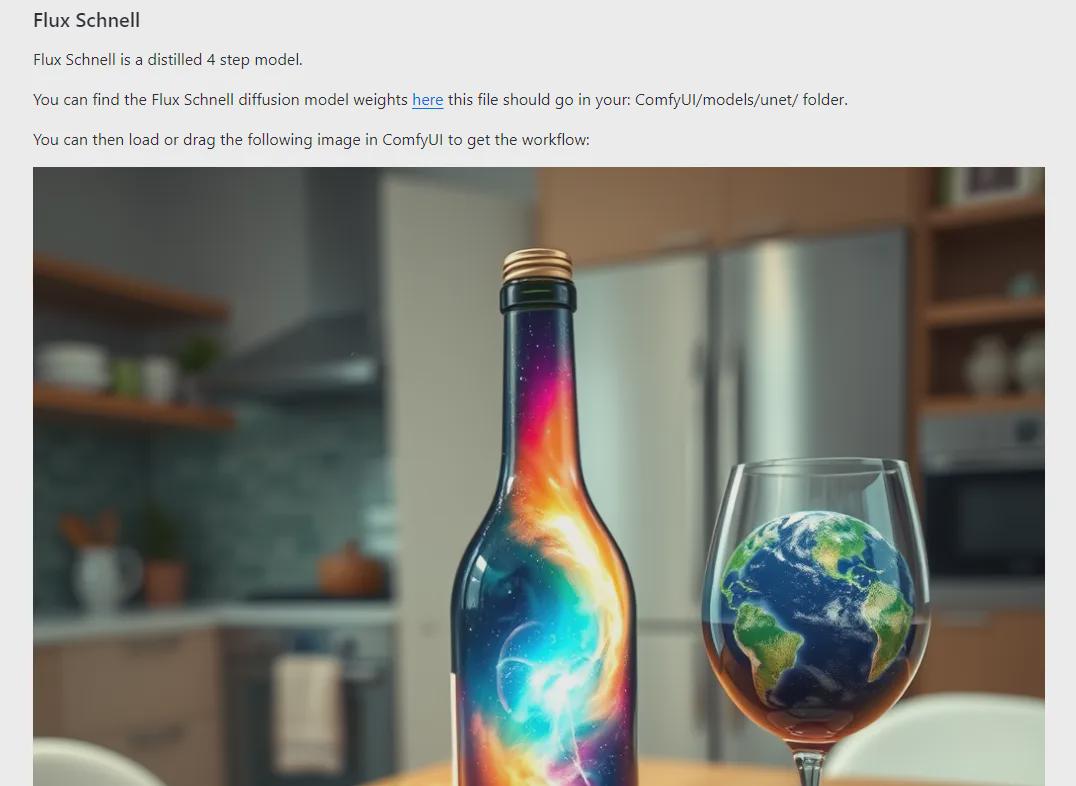

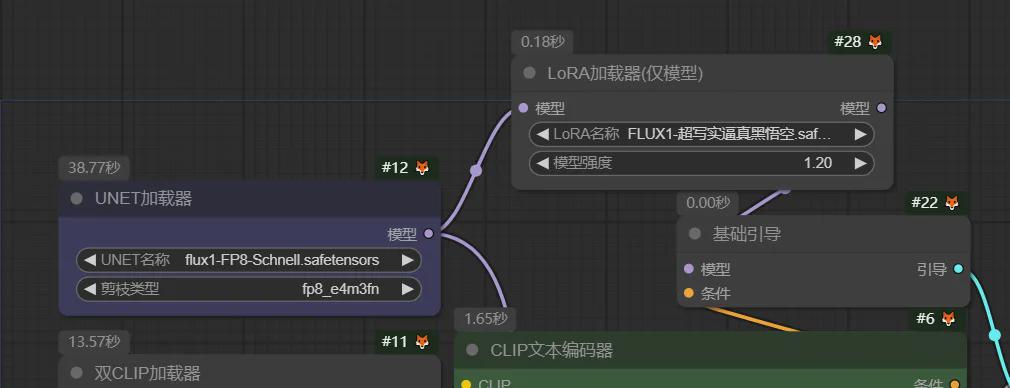

Dragging in flux_schnell_example gives the following:

Divide the structure:

The flux_schnell_example is the simplest, 4 steps to a graph.

Starting simple, try using the following prompt words inside the flux_schnell_example workflow:

A girl in school uniform, holding chalk and writing happy words on a blackboard, real photography, school classroom, half-length composition, cinematic lighting, rich details, Japanese low saturation

A girl wearing a school uniform, holdingchalk to write happy words on the blackboard, real photography, schoolclassroom, half body composition, movie Lighting, rich details, Japanese lowsaturation

Getting out of the picture:

(1) Accurate experience of words on the blackboard

(2) Figures with normal hand structure

(3) The texture effect conforms to the true style of the cue word

(4) High efficiency in producing pictures, no sense of waiting, and matching the cue words.

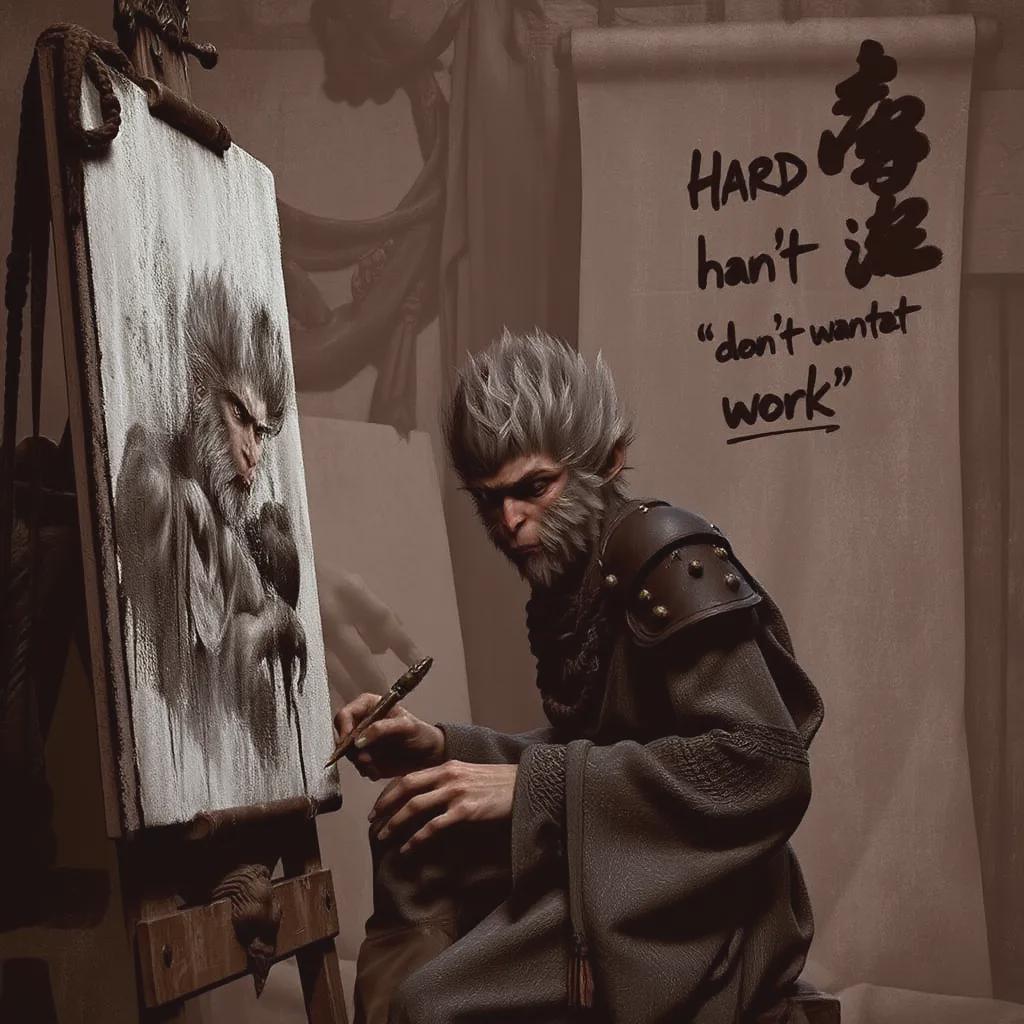

Then we inserted a lora loader between the models and downloaded a lora model of Black Myth Goku from the internet and loaded it in (lora from liblib.art platform by AI Game Classroom Bear)

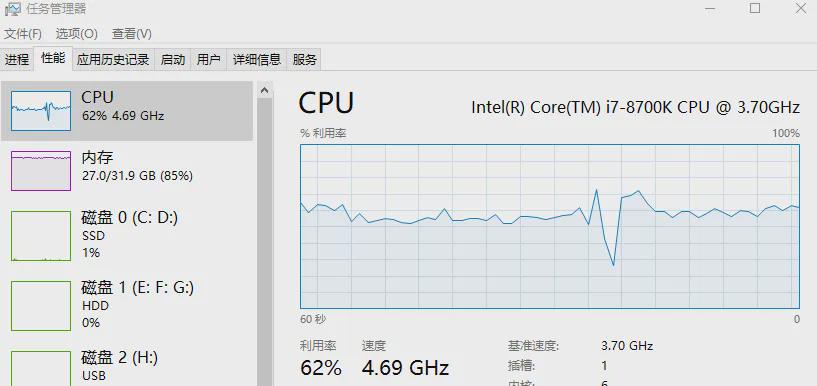

The model starts with fp8 schnell, using the descriptors provided by the author of the lora, and then launches the queue, seeing that the author's computer has 32G of RAM and can go on 85% to 98%, and the graphics card is an NVIDIA 2080.

Enter the prompt word:

Goku, in the drawing room, painting, sketching, brushes, writing, working hard, (large text "doesn't want to work")

Wukong, in the painting studio, painting, sketching, using a paintbrush, writing, and working hard (big text says' don't want to work ')

Get the image:

Simple description, no need for negative cue words, great character hands, text, etc. after adding lora.

Overall flux in addition to the big models need strong configuration of the computer, smaller models in fact, the effect is not much wrong, for ordinary media applications, program ideas, etc. enough to use.

In addition to simply adding lora, etc., if you are familiar with comfyui, adding nodes that zoom in to increase detail, or even using advanced applications such as controlnet can produce more and better results.

The models and workflows mentioned in this paper select addresses:

Link: https://pan.baidu.com/s/1LSO-JUa26fdL7_dpT5jnRQ

Extract code: PKV7