GoogleIn a blog post on October 24, the Company announced the launch of SAIF Risk assessment tools,Designed to help AI developers and organizations evaluate theirSafetyposture, identify potential risks, and implement stronger security measures.

Introduction to SAIF

Google released the Safe Artificial Intelligence Framework (SAIF) last year to help users deploy AI models safely and responsibly, and SAIF not only shares best practices, but also provides the industry with a framework for safe design.

To promote this framework, Google has formed the Consortium for Safe Artificial Intelligence (CoSAI) with industry partners to advance critical AI safety measures.

SAIF Risk Assessment Tool

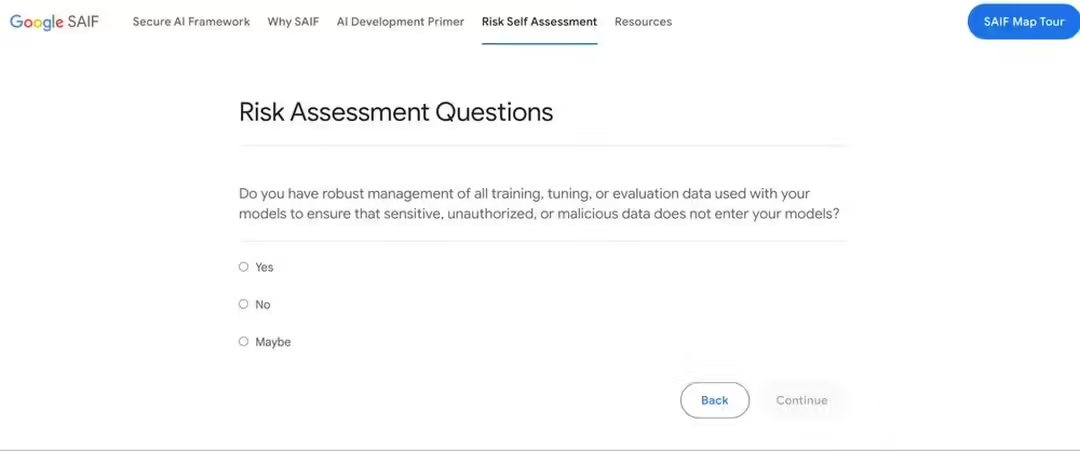

Google has now made the SAIF Risk Assessment Tool available on its new website, SAIF.Google, which runs as a questionnaire that users answer to generate a customized checklist that guides them in securing their AI systems.

The tools cover a number of topics, including:

- Training, Tuning and Evaluation of AI Systems

- Access control to models and datasets

- Protection against attacks and adversarial inputs

- Secure Design and Coding Framework for Generative AI

Immediately after the user answers the question, the tool generates a report highlighting specific risks to AI systems, such as data poisoning, prompt injection, and model source tampering.

Each risk is accompanied by a detailed explanation and suggested mitigations, and an interactive SAIF Risk Map allows users to learn how different security risks can be introduced and mitigated during AI development.