USAMegan Garcia, the mother of a 14-year-old who died by suicide, is speaking out.Chatbot Platform Character.AI, its founders Noam Shazeer and Daniel De Freitas, and Google's mention ofprosecutionsLawsuits. The allegations include wrongful death, negligence, deceptive trade practices and product liability. The complaint alleges that the Character.AI platform is "unreasonably dangerous" and lacks safety precautions when marketed to children.

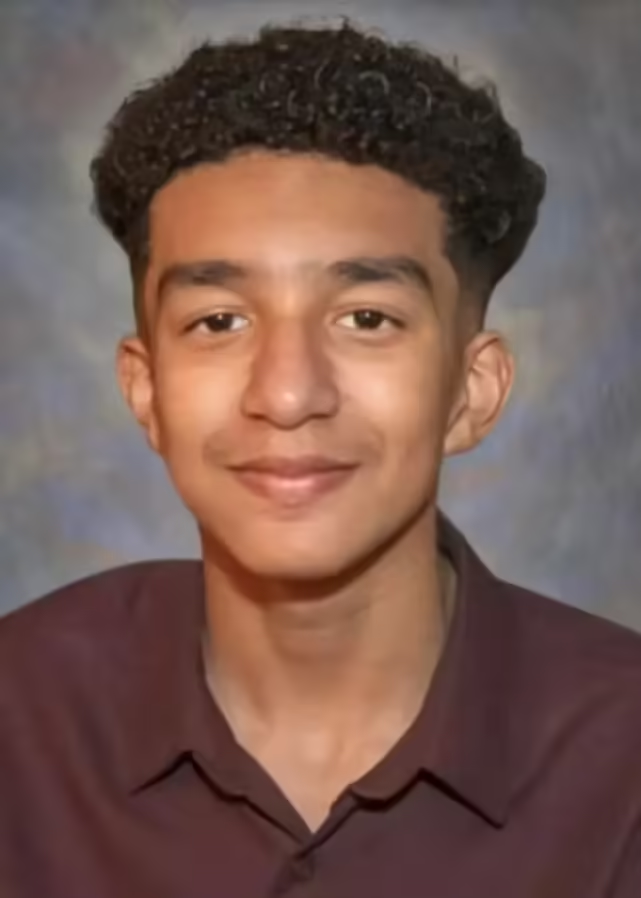

According to the complaint, 14-year-old Sewell Setzer III began using Character.AI last year and interacted with chatbots modeled after Game of Thrones characters, including Daenerys Targaryen. Setzer chatted with the bots for months before his death, and committed suicide on February 28, 2024, "seconds after" his last interaction.

Allegations in the complaint Character.AI Platforms with "anthropomorphic" artificial intelligence characters and chatbots providing "unlicensed psychotherapy"The platform has chatbots that specialize in mental health under names such as "therapist" and "are you lonely". The platform has mental health-focused chatbots with names like "Therapist" and "Are you lonely" that Setzer has interacted with.

Garcia's attorney quoted a previous interview with Shazier in which Shazier said that he and DeFreitas left Google to start their own company because of the "brand risk of launching anything interesting in a big company" and his desire to "maximize the acceleration of the " the development of this technology. The suit also mentions that they left after Google decided not to launch the Meena large-scale language model they had developed. Google, for its part, acquired the leadership team of Character.AI in August of this year.

Character.AI's website and mobile apps feature hundreds of customized chatbots.Many of them are based on popular characters from TV shows, movies and video games.

Character.AI has now announced some changes to the platform. In an email to The Verge, the company's head of communications, Chelsea Harrison, said, "We are heartbroken by the loss of one of our users and offer our deepest condolences to the family."

Some of the changes include:

- Model changes for minors (under 18) are designed to reduce the likelihood of encountering sensitive or suggestive content.

- Improve detection, response and intervention to user input that violates the platform's terms or community guidelines.

- A revised disclaimer appears in every chat, reminding users that the AI is not a real person.

- Users are notified when they have a one-hour session on the platform and can choose to follow up at their own discretion.

Harrison said, "As a company, we take the safety of our users very seriously, and our Trust and Safety team has implemented a number of new safety measures over the past six months, including a pop-up window directing users to the National Suicide Prevention Lifeline when they use words like self-harm or suicidal thoughts."