October 22, local time.Anthropic LaunchedupgradeVersion Claude 3.5 Sonnet and the new Claude 3.5 Haiku model, the upgraded Claude 3.5 Sonnet is not only more programmable, but also brings a new feature, computer use, which supports the operation of computers in the same way as human beings.The ability to follow user commands to move the cursor around the computer screen, click on relevant locations, and enter information via the virtual keyboardthat simulates the way people interact with their own computers.

The upgraded version of Claude 3.5 Sonnet is now available and the computer use beta is open for use.

The evolution of Claude 3.5 Sonnet has resulted in significant enhancements across the board, especially the industry-leading encoding capabilities.

Jared Kaplan, Anthropic's Chief Scientific Officer, said, "I think we're going to enter a new era where models can use all the same tools to accomplish tasks as people."

The release of the upgraded Claude 3.5 Sonnet marks a significant advancement in Anthropic's business AI modeling space. The model is designed to go beyond the traditional chat box and become a true "AI Agent”.

An "AI agent" is an AI model that can use software and perform other computer tasks like a human. Some AI agents, like Cognition AI's Devin, specialize in programming. Anthropic, on the other hand, positions its AI agent as a versatile one, claiming it can browse the web and use any website or application. Users can use the AI agent as they see fit, whether it's for technical tasks like programming or simple tasks like travel planning.

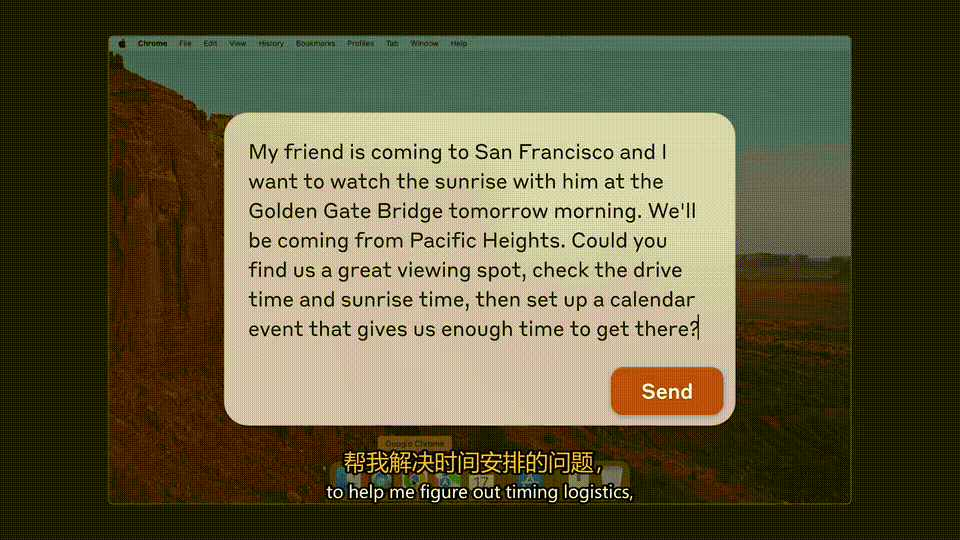

In a demo described by Wired, Claude was asked to plan a trip with a friend to view the Golden Gate Bridge at sunrise.The AI opened a web browser, searched Google for a good viewpoint and other details, and added the itinerary to a calendar application. While impressive, Wired notes that Claude didn't take into account other useful details, such as how to get there.

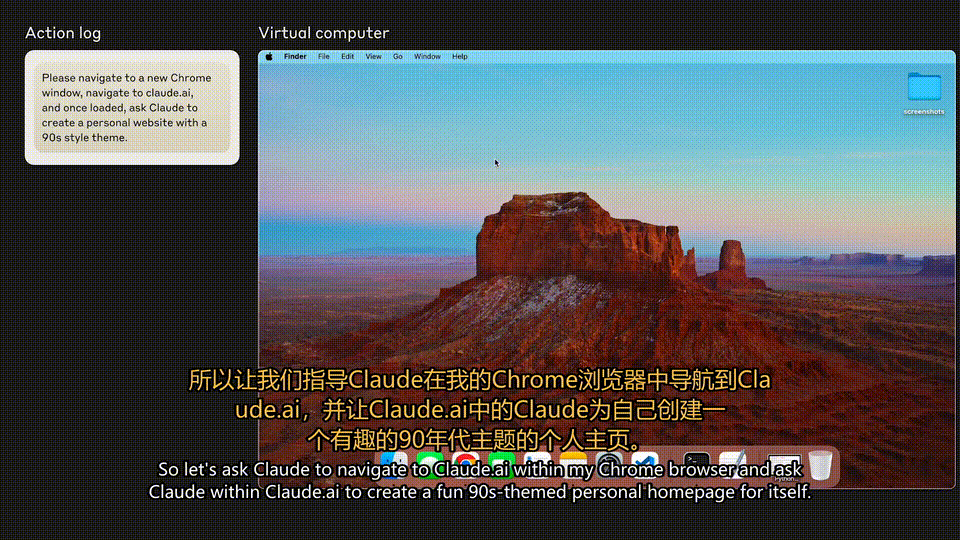

In another demo, Claude was asked to create a simple website, and he used Microsoft's Visual Studio Code to accomplish the task, even opening a local server to test the site he had just created. Although a small error occurred during the creation process, the code was corrected when prompted.

As promising as these AI models seem, they still have reliability issues, especially when it comes to writing code, and Anthropic's model is no exception. Even in simple tests, such as booking flights and modifying reservations, Claude 3.5 Sonnet managed to complete less than half of the tasks, according to TechCrunch.

In addition to technical imperfections, AI proxies pose obvious security risks. Whether users are willing to allow these unstable and sometimes unpredictable technologies to access PC files and use web browsers remains a question worth pondering.

Anthropic responded by saying that by gradually opening up this limited, relatively more secure AI model, it can help improve the security of AI agents. In a statement, they wrote: "Rather than waiting for more powerful models to become available, we believe it is better to give existing, safer models access to computers so that we can begin to observe and respond to potential problems, and incrementally enhance security measures as we increase the level of use."