As AI Artificial Intelligence technology continues to evolve, some field related concepts and acronyms are always appearing in various articles, like Prompt Engineering,Agent Intelligentsia, knowledge bases, vector databases,RAG and knowledge graphs and so on, but it's also true that these technologies and concepts have a role to play in the AILarge ModelAI technology plays a critical role in the development of AI. These technological elements collaborate with each other in diverse forms to drive AI technology forward. In this article, we will delve into the high-frequency terms including but not limited to the above, which always appear with big models, to understand the meaning behind these technologies in vernacular.

The above techniques belong to the first to do a classification, easy to understand. Several concepts related to the optimization of large model technology: Prompt Engineering, RAG, Fine-Tunning, Function Calling, Agent. first, a simple analogous diagram to roughly appreciate the different positioning of these technologies:

The others are generic concepts in AI: knowledge bases, vector databases, knowledge graphs,AGI

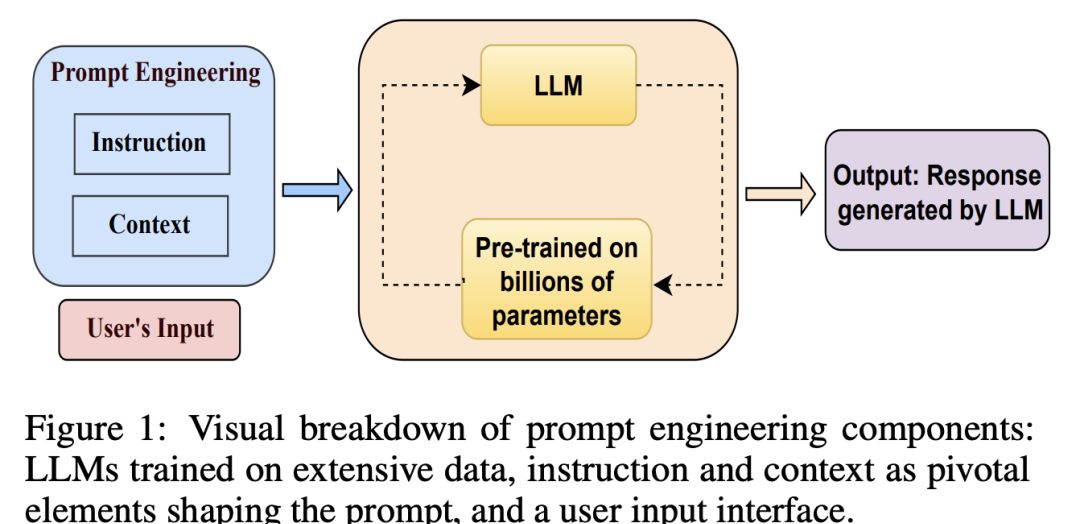

Prompt Engineering

Pure Prompt technical architecture can be seen as the most primitive and intuitive way to interact with large language models. It is also the most efficient in terms of task efficiency, effective Prompt can also maximize the stimulation of the model's good performance, in the small note of the previous article also shared with you, it is the model effect optimization of the two paths - fine-tuning and RAG technology, the common basis. openAI official website also gives a tutorial to optimize the Prompt, you can also see the details of the small note of the previous question.

To extend the point, Prompt is more like a kind of guidance to stimulate the model to perform better internally, because the model will summarize a lot of internal rules of in-context-learning when it learns based on a large amount of data, and a good Prompt is to stimulate the right rules to guide the model to perform better.

In terms of interaction, this is the friendliest way for a big language model to be with humans. It's like having a conversation with a person, you say something and the AI responds, simple and straightforward, without any weighting updates or complex processing of the model.

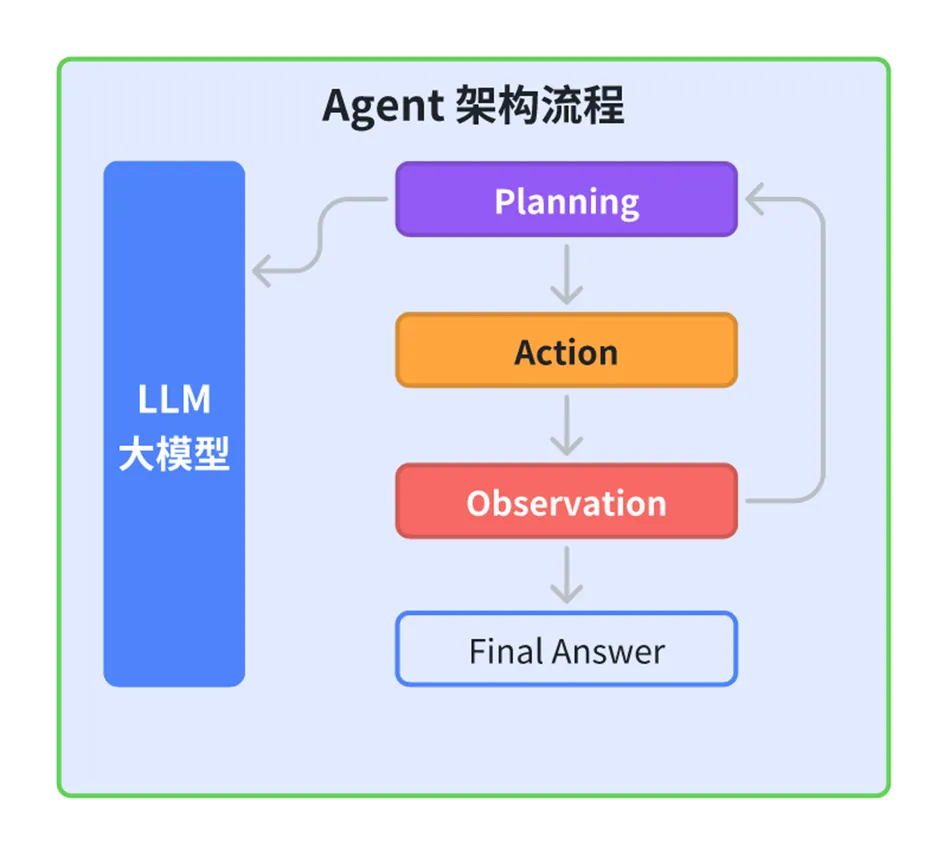

Agent

To summarize in one sentence, Agent Intelligence = Reasoning results based on a big model + Combined with tools to trigger next behaviors and interactions.

In the era of AI macromodeling, Agent can be abstractly understood as a real product or capability that has the ability to interact with the environment based on the result of thinking. We sometimes call it "agent" or "intelligent subject".

Agent is built on the basis of the reasoning ability of the large language model, the planning of the large language model of the planning of the program using tools to execute (Action), and the process of execution of observation (Observation), to ensure that the task of the implementation of the ground.

An example to make it easier to understand:

Imagine a robot housekeeper. This robot understands your commands, such as "Please clean the living room", and is able to perform this task. The robot housekeeper is an agent that autonomously senses the environment (e.g., recognizes which areas are the living room), makes decisions (e.g., determines the order and method of cleaning), and performs the task (e.g., uses the vacuum cleaner to clean). In this analogy, a robotic housekeeper is an entity that can act autonomously and make complex decisions.

Function Calling

Function calling usually refers to the ability of a model to call specific functions, which may be built-in or user-defined. When performing a task, a model may decide when and how to call these functions by analyzing the problem. For example, a language model answering a mathematical question may use an internal computational function to arrive at an answer.The Function calling mechanism allows a model to utilize external tools or internal functionality to enhance its ability to handle a particular task.

The Function Calling mechanism consists of the following key components:

Function Definition: Pre-define the function that can be called, including the name, parameter type and return value type.

Function Call Request: A call request from the user or the system, containing the name of the function and the required parameters.

Function executor: the component that actually executes the function, which may be an external API or a local logic processor.

Result Return: After the function is executed, the result is returned to ChatGPT to continue the conversation.

An example to understand:

Imagine you are using a smartphone. When you want to take a picture, you open the camera app. The camera app is a Function Calling, which provides the ability to take a picture. You "call" this function by tapping on the camera icon, and then you can take a picture, edit it, etc. In this analogy, the smartphone is the big model. In this analogy, the smartphone is the big model, the behavior you want to take a picture is the conclusion of the big model, the camera app is the predefined function, and opening the camera app and using its function is the Function Calling based on the result of the model analysis.

LLM's Function Calling mechanism greatly extends its functionality by enabling it to dynamically call external functions during conversations to provide real-time, personalized and interactive services. This mechanism not only enhances the user experience, but also provides developers with powerful tools to help them build smarter and more robust dialog systems and applications.

Function Calling VS Agent

Function Calling is like calling a specific function or tool to help you accomplish a specific task, whereas an Agent is more like an individual who can think and act independently, and it can accomplish a series of complex tasks without direct human guidance.

In some advanced applications, the Function Calling capability can be regarded as a specific form of action expression of an AI Agent, i.e., a strategy for the intelligence to call external resources or services during the execution of a task. For example, an AI Agent with Function Calling capability can dynamically initiate a function call to obtain additional information or perform a specific operation when carrying out a dialog or solving a problem, so as to better serve the user's needs.

function_call is more focused on representing the specific behavior of the model that directly generates the call to the function, a specific step or operation in the execution flow of the model.

An agent is a broader concept that represents a whole system with intelligent behavior, including perception, reasoning, decision-making, and execution, rather than just initiating a function call.

To summarize, function_call is an important part of building an efficient and intelligent Agent, which is used to realize the Agent's operation interface and interaction capability to the external world, while the Agent is a complete entity that contains more complex logic and life cycle management.

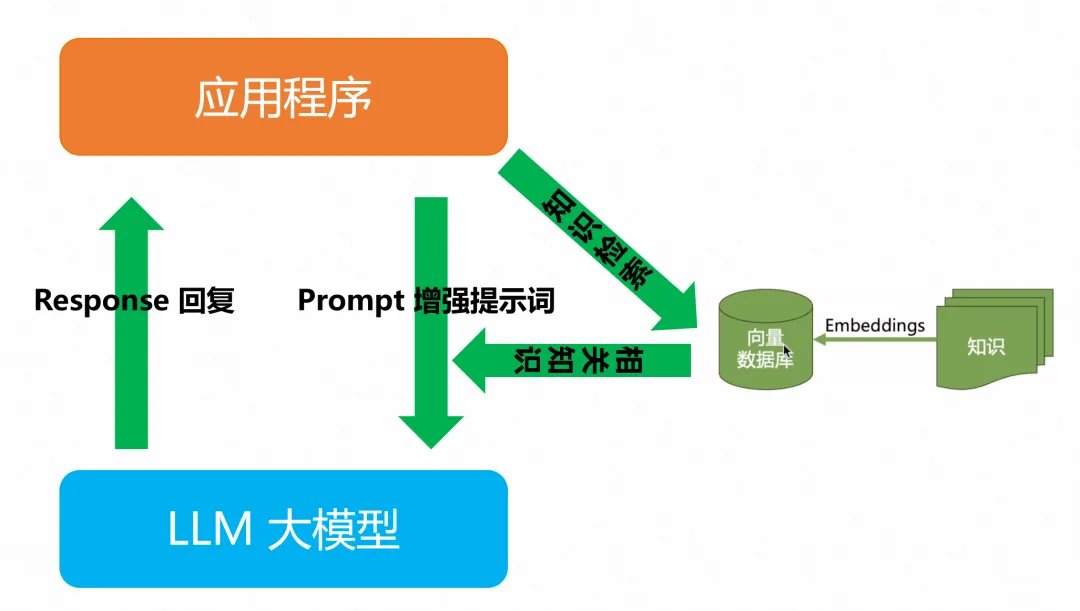

RAG (Retrieval Augmentation Generation)

In a nutshell, RAG is about giving the big model the knowledge it didn't have in the original dataset.

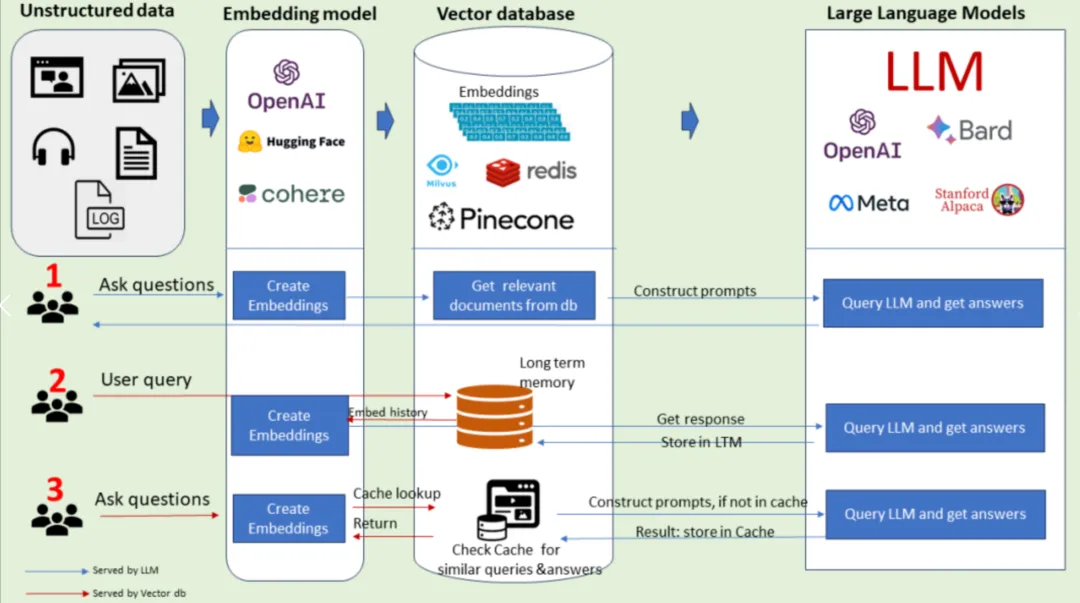

RAG (Retrieval-Augmented Generation) technology is a knowledge augmentation scheme that integrates the dual capabilities of retrieval and generation, aiming to meet the challenges of querying and generating complex and changing information. In today's big model era, RAG skillfully introduces external data sources that are not used for model training, such as more real-time data after the cutoff data used for model training, or internal data that are not publicly available, etc., which empowers big models with more accurate and real-time information retrieval, and thus significantly improves the quality of information querying and answer generation.

The RAG architecture combines Embeddings and Vector Database technology.Embeddings is the conversion of text into vector encodings, which are stored in a vector database to facilitate similarity calculations and fast lookups. When receiving user input, the AI finds the most similar vectors, i.e., relevant pieces of knowledge, in the database based on the input vectors, which are then combined with the intelligence of the larger model to generate a more accurate and comprehensive answer for the user.

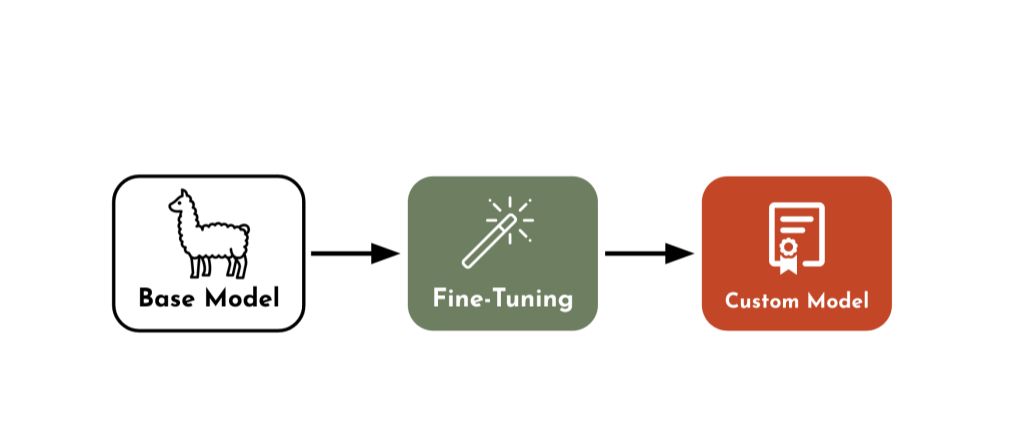

Fine-Tuning: Deep Learning and Long-Term Memory

Fine-tuning is a technique for targeted optimization of pre-trained models with domain-specific data to improve their performance on specific tasks. It utilizes domain-specific datasets to further train a large pre-trained model so that the model can be better adapted to and perform domain-specific tasks

Core reasons for Fine-tuning

Customized functionality: The core reason for fine-tuning is to give the big models more customized functionality. Generic macromodels, while powerful, may not perform well in specific domains. By fine-tuning, the model can be better adapted to the needs and characteristics of a specific domain.

Domain Knowledge Learning: By introducing domain-specific datasets for fine-tuning, a large model can learn the knowledge and language patterns of the domain, which can help the model achieve better performance on specific tasks

RAG VS Fine-tuning

Knowledge Acquisition and Fusion Capability: RAG technology endows language models with unparalleled knowledge retrieval and fusion capabilities, breaking through the limitations of the traditional Fine-tuning fine-tuning approach, so that the quality of the model's output and its knowledge coverage have been greatly improved.

Hallucination reduction: the design of the RAG system inherently reduces its likelihood of hallucination because it bases its response construction on actual retrieved evidence, whereas Fine-tuning fine-tuning, while mitigating the model's tendency to hallucinate to some extent, does not completely eliminate this risk

knowledge base

For some vertical fields, or internal enterprises, it is inevitable to encounter the need to build a vertical or internal knowledge base in line with their own business characteristics. Through RAG, fine-tuning and other technical means, we can transform a generalized model into an "industry expert" who has a deep understanding of a specific industry or internal enterprise, so as to better serve specific business needs.

The technical architecture of the knowledge base is divided into two parts:

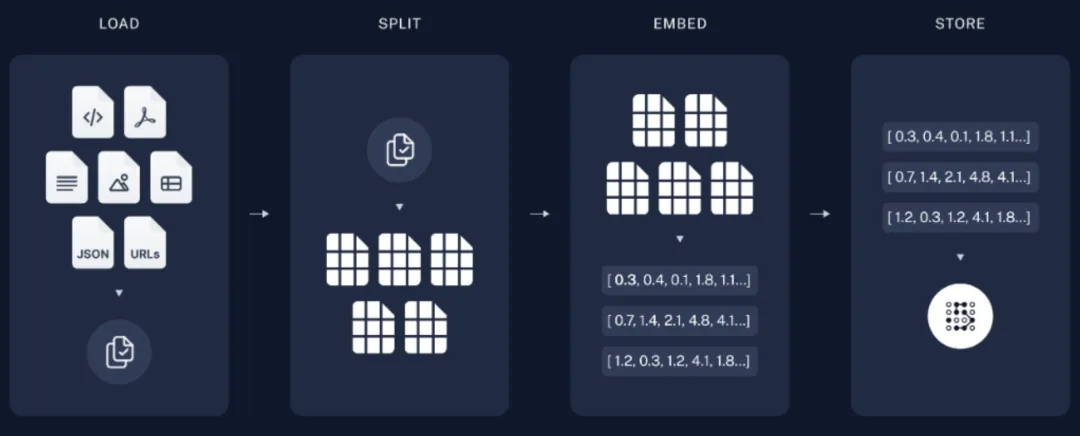

Offline knowledge data vectorization:

The technical architecture of the knowledge base is divided into two parts:

1. Offline knowledge data vectorization:

Load: First, the system reads and loads the data or knowledge base through Document Loaders. This may include text files, PDF, Word documents and other formats.

Split: text splitter will be large documents into smaller blocks or paragraphs, the purpose of doing so is to allow these small pieces of data (chunks) can be more easily processed and vectorized.

Vectors: these split data chunks are vectorized, i.e., the text is converted into numeric vectors by some algorithm (e.g., Word2Vec, BERT, etc.), and these vectors can capture the semantic information of the text.

Storage: these vectorized data blocks are stored into a vector database (VectorDB). Such databases are specialized for storing and retrieving vectorized data, allowing efficient similarity searches.

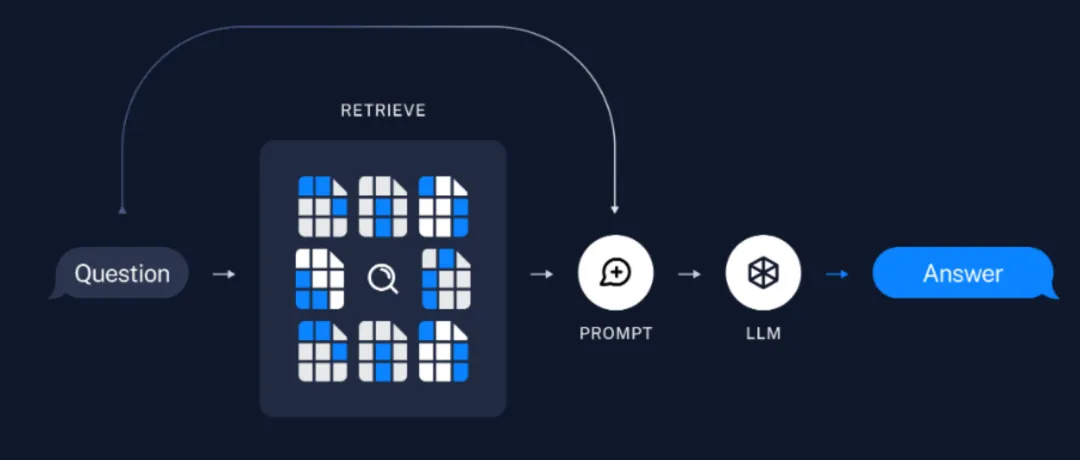

2. Retrieval of online knowledge returns:

Retrieval: When a user asks a question, the system uses a retriever to retrieve the relevant data chunks (Chunks) from the vector database based on the user input. The retrieval process may involve calculating the similarity between the user input and the vectors in the database and finding the few most relevant data chunks.

Generation: The retrieved data chunks, along with the user's questions, are provided as input to the large language model. The language model generates answers based on this information. This process will involve understanding and integrating the retrieved knowledge and then answering the question in natural language.

vector database

A vector database is a system specifically designed to store and query vector data. Vector data here usually refers to the form of numerical vectors into which raw data (e.g., text, speech, images, etc.) is converted by some algorithm.

The storage carrier of a knowledge base is often a vector database. In terms of data storage and retrieval, vector databases efficiently store and retrieve high-dimensional data with a vector space model, providing strong data support for AI grand models and Agent intelligences.

Definition of vector database:

A vector database is a system specifically designed to store and query vector data. Vector data here usually refers to the form of numerical vectors into which raw data (e.g., text, speech, images, etc.) is converted by some algorithm.

Differences between vector databases and traditional databases:

Traditional databases mainly deal with structured data, such as tabular data stored in relational databases. Vector databases, on the other hand, are more adept at handling unstructured data that has no fixed format or structure, such as text documents, image files, and audio recordings.

Application scenarios for vector databases:

In the field of machine learning and deep learning, raw data often needs to be converted into vector form for further processing and analysis. Vector databases are very useful in this regard as they can efficiently process these vectorized data.

Advantages of vector databases:

- Efficient storage: Vector database optimizes the storage mechanism to efficiently store a large amount of high-dimensional vector data.

- Efficient indexing: Vector databases use special indexing structures (e.g., KD-tree, ball-tree, etc.) to quickly locate and retrieve vector data.

- Efficient Search: Vector databases are able to perform fast similarity searches to find data points that are most similar to the query vector, which is important for applications such as recommender systems, image recognition, and natural language processing.

Ability to handle complex data:

Vector databases excel in handling complex data containing numerical features, text embedding or image embedding. These data, after vectorization, can be searched using the search capabilities of vector databases for more accurate matching and retrieval.

knowledge map

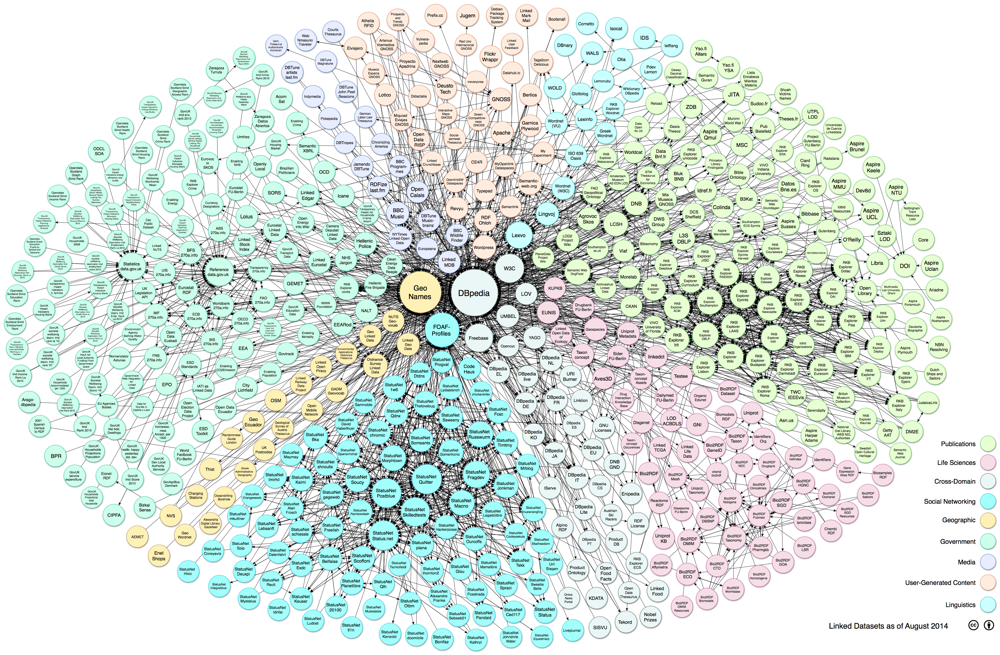

Knowledge graphs are essentially a type of knowledge base called semantic networks, i.e., a knowledge base with a directed graph structure, where the nodes of the graph represent entities or concepts, and the edges of the graph represent various semantic relationships between entities/concepts.

Definition of Knowledge Graph:

A knowledge graph is a special type of database that uses a graph structure to store and represent knowledge. Such databases are based on entities (e.g., people, places, organizations, etc.) and the relationships between them.

The process of knowledge graph construction:

Knowledge graphs are essentially a type of knowledge base called semantic networks, i.e., a knowledge base with a directed graph structure, where the nodes of the graph represent entities or concepts, and the edges of the graph represent various semantic relationships between entities/concepts.

Definition of Knowledge Graph:

A knowledge graph is a special type of database that uses a graph structure to store and represent knowledge. Such databases are based on entities (e.g., people, places, organizations, etc.) and the relationships between them.

The process of knowledge graph construction:

Through semantic extraction techniques, entities and their relationships are identified from the text, and this information is then organized into a tree or other graphical structure.

Entities have specific attributes, e.g. a person may have attributes such as name, age, etc., whereas relationships describe connections between entities, e.g. "resides in", "belongs to", etc.

Data modeling for knowledge graphs:

Knowledge graphs use a structured data model, which means that data is stored in a fixed format that is easy to manage and query. Each entity has its attributes and relationships with other entities.

The value of knowledge graphs:

Knowledge mapping can reveal the dynamic development law of the knowledge field, through data mining, information processing and graphic drawing, it can show the complex connection between different entities, not only to show the static knowledge structure, but also to reveal the evolution and development of knowledge over time, and it has a wide range of applications in the fields of intelligent recommendation, natural language processing, machine learning, and so on. Especially in the field of search engine, it can improve the accuracy of search and provide users with more accurate search results.Outside of the AI field, it is widely used in the field of wind control and healthcare.

Entities have specific attributes, e.g., a person may have a name, age, etc.; whereas relationships describe connections between entities, e.g., "resides in", "belongs to", etc.

AGI

AGI stands for "Artificial General Intelligence", which is the ultimate vision of AI development, and refers to an artificial intelligence system with a wide range of cognitive capabilities. AGI refers to an AI system with a wide range of cognitive capabilities, which is able to exhibit intelligent behavior in multiple domains and tasks like humans, including but not limited to understanding, reasoning, learning, planning, communication, and perception.

Unlike the currently common "narrow AI" (Artificial Narrow Intelligence), AGI is capable of handling a wide range of different problems, not just algorithms designed for specific tasks. Narrow AIs often excel in specific domains, such as speech recognition, image recognition, or board games, but they lack cross-domain generalization and adaptability.

Key features of AGI include:

- Self-learning: AGI is able to learn from experience and continuously improve its performance.

- Cross-domain capability: AGI is able to deal with many different types of problems, not just a single domain.

- Understanding Complex Concepts: AGI is able to understand and work with abstract concepts, metaphors and complex logic.

- Self-awareness: although this is controversial in academic circles, some views suggest that AGI may develop some form of self-awareness or self-reflective ability.

Currently, AGI remains a frontier area of AI research that has yet to be realized. Many researchers are exploring how to build such systems, but there are many technical and ethical challenges.