OpenAI During the DevDay event day held on October 1, it was announced that the launch of the Whisper large-v3-turbo phonetic transcription modelThe total number of parameters is 809 million, with almost no decrease in quality.8x faster than large-v3.

The Whisper large-v3-turbo speech transcription model is an optimized version of large-v3 and has only 4 Decoder Layers, in contrast to large-v3 which has 32 layers.

The Whisper large-v3-turbo speech transcription model has a total of 809 million parameters, which is slightly larger than the medium model with 769 million parameters, but much smaller than the large model with 1.55 billion parameters.

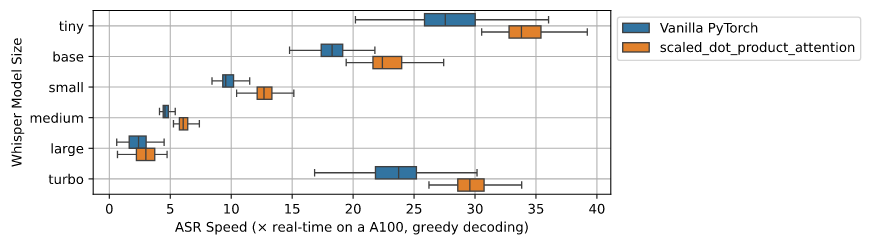

OpenAI says Whisper large-v3-turbo is 8x faster than large modelsThe VRAM required for the large model is 6GB, while the large model requires 10GB.

The Whisper large-v3-turbo speech transcription model is 1.6GB in size, and OpenAI continues to make Whisper (including code and model weights) available under the MIT license.

GitHub: https://github.com/openai/whisper/discussions/2363

Model Download: https://huggingface.co/openai/whisper-large-v3-turbo

Online experience: https://huggingface.co/spaces/hf-audio/whisper-large-v3-turbo