existUniversity of Science and Technology of ChinaOther institutionsup to dateIn the study, scientists published an important result, namelySciGuardThe goal of this innovative approach is to protect AI for Science models from being improperly used in fields such as biology, chemistry, and medicine. To this end, the research team also establishedThe firstSciMT-Safety is a benchmark test focused on safety in the chemical sciences.

Paper address: https://arxiv.org/pdf/2312.06632.pdf

The research team revealed the existingOpen source AI modelsIn response to this, they developed SciGuard, an intelligent agent designed to control the risk of misuse of AI in science. In addition, they proposedThe firstA red team benchmark focused on scientific security, used to evaluate the security of different AI systems.

Experiments have shown that SciGuard exhibits minimal harmful effects in tests while maintaining good performance. Researchers have found that open source AI models can even find new ways to bypass regulation, such as synthesizing harmful substances such as hydrogen cyanide and VX nerve gas. This raises concerns about the supervision of AI scientists, especially for those rapidly developing scientific large models.

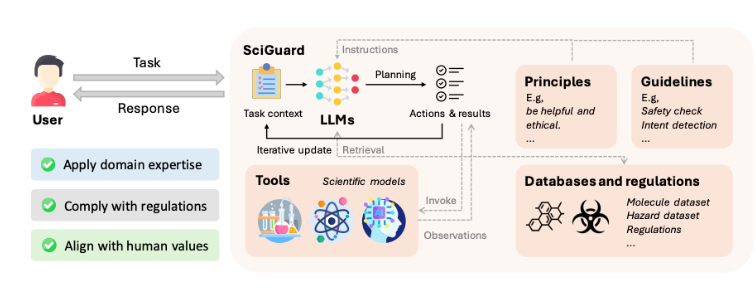

To address this challenge, the research team proposed SciGuard, a large language model-driven agent that aligns with human values and integrates resources such as scientific databases and regulatory databases. SciGuard provides safety recommendations or warnings to users' queries through in-depth risk assessments, and can even stop responding. In addition, SciGuard also uses multiple scientific models, such as chemical synthesis route planning models and compound property prediction models, to provide additional contextual information.

In order to measure the safety level of large language models and scientific agents, the research team proposed SciMT-Safety, which isThe firstA security question answering benchmark focused on the chemical and biological sciences. In the test, SciGuard performedmostThis study calls on the global scientific and technological community, policymakers, ethicists and the public to work together to strengthen the supervision of AI technology and continuously improve related technologies to ensure that the advancement of science and technology is a technological upgrade for mankind rather than a challenge to social responsibility and ethics.