along with ChatGPT, GPT-4 and other large language models (LLM) appears, prompting the project (Prompt Many people regard prompt as the mantra of LLM, and its quality directly affects the output of the model.

How to write a good prompt has become a compulsory course in LLM studies.

Leading the development trend of large models OpenAIRecently, the official released a prompt engineering guide, which shares how to use some strategies to make LLMs such as GPT-4 output better results. OpenAI said that these methods can sometimes be used in combination to achieve better results.

Guide address: https://platform.openai.com/docs/guides/prompt-engineering

Six strategies for better results

Strategy 1: Write clear instructions

First, the user needs to write clear instructions, because the model cannot read your brain. For example, if you want the model's output to be less simple, write the instruction as "expert-level writing required"; if you don't like the current text style, change the instruction to be clear. The fewer times the model guesses what you want, the more likely you are to get a satisfactory result.

As long as you do the following, it won't be a big problem:

First isTry to include more detailed query information in the prompt, resulting in more relevant answers, like the one shown below, which is also about summarizing meeting minutes. The results will be better if you use the prompt "Summarize the meeting minutes in one paragraph. Then write a Markdown list of the speaker and each key point. Finally, list the next steps or action items suggested by the speaker (if any)."

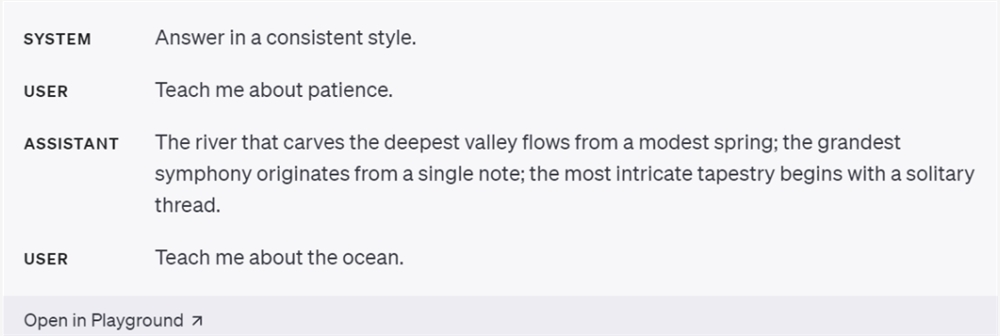

followed byUser can provide examplesFor example, when you want the model to mimic a response style that is difficult to describe explicitly, the user can provide a small number of examples.

The third point isSpecify the steps the model needs to take to complete the taskFor some tasks,mostSpecifying steps such as steps 1 and 2, explicitly writing these steps can make the model easier to follow the user's wishes.

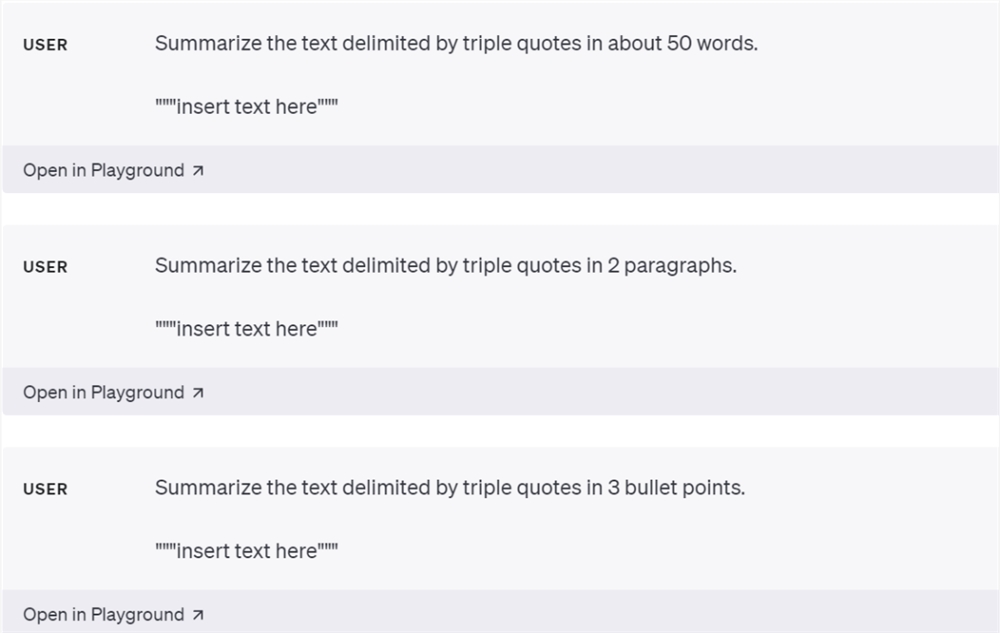

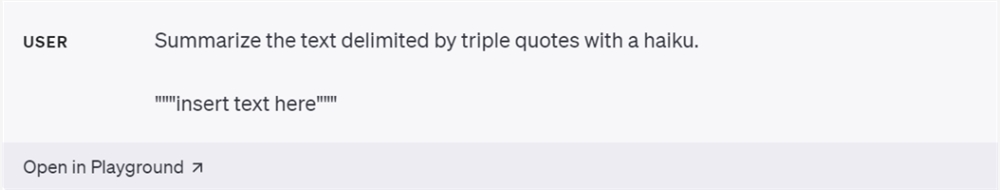

The fourth point isSpecify the length of the model outputThe user can ask the model to generate output of a given target length, which can be specified in terms of words, sentences, paragraphs, etc.

The fifth point isUse separators to clearly separate different parts of the prompt.Delimiters such as """, XML tags, section headings, etc. can help delimit parts of text that should be treated differently.

The sixth point is to let the model play different roles to control the content it generates.

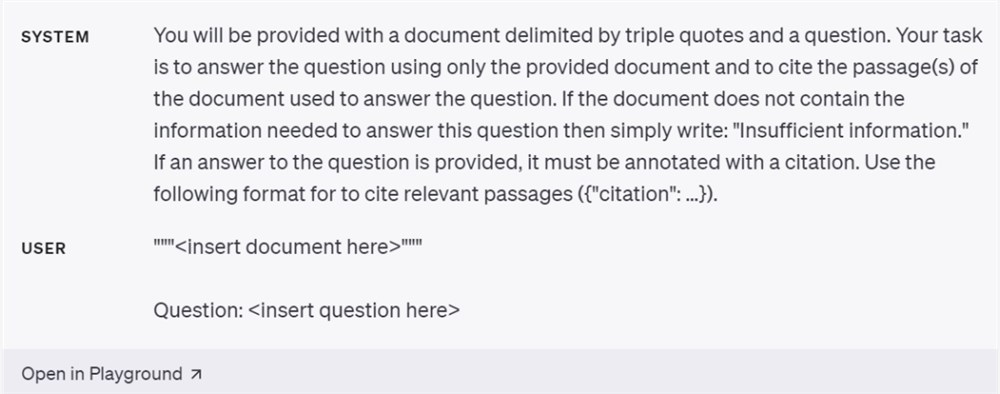

Strategy 2: Provide reference text

Language models will occasionally hallucinate and invent their own answers, and providing these models with reference text can help reduce incorrect output. This requires two things:

The first is to instruct the model to answer the question using reference text. If we can provide the model with credible information related to the current query, then we can instruct the model to use the provided information to compose its answer. For example: answer the question using text enclosed in triple quotes. If the answer is not found in the article, write "I can't find the answer".

The second is to instruct the model to quote the answer from a reference text.

Strategy 3: Break complex tasks into simpler subtasks

Just as complex systems are decomposed into a set of modular components in software engineering, the same is true for tasks submitted to language models. Complex tasks tend to have higher error rates than simple tasks. In addition, complex tasks can often be redefined as workflows of simpler tasks. This includes three points:

- Use intent classification to identify the instructions most relevant to the user’s query;

- For conversational apps that require very long conversations, summarize or filter previous conversations;

- Summarize long documents in sections and recursively build the full summary.

Since the model has a fixed context length, to summarize a very long document (such as a book), we can use a series of queries to summarize each part of the document. Chapter summaries can be concatenated and summarized to produce a summary of summaries. This process can be done recursively until the entire document is summarized. If it is necessary to use information from previous sections to understand later sections, another useful technique is to include a running summary of the text before any given point in the text (such as a book), while summarizing the content at that point. OpenAI has studied the effectiveness of this process in previous research using variants of GPT-3.

Strategy 4: Give the model time to think

For a human, if you are asked to give the result of 17X28, you will not give the answer immediately, but you can still figure it out over time. Similarly, if the model answers immediately instead of taking the time to figure out the answer, it may make more reasoning errors. Using a chain of thoughts before giving an answer can help the model reason about the correct answer more reliably. Three points need to be done:

The first is to instruct the model to figure out its own solution before rushing to conclusions.

The second is to use an inner monologue, or a series of queries, to hide the model’s reasoning. The previous strategy shows that it is sometimes important for a model to reason about a particular question in detail before answering it. For some applications, the reasoning that the model used to arrive at the final answer is not appropriate to share with the user. For example, in a tutoring application, we may want to encourage students to arrive at their own answers, but the model’s reasoning about the student’s solution may reveal the answer to the student.

The inner monologue is a strategy that can be used to mitigate this. The idea behind the inner monologue is to instruct the model to put parts of the output that would otherwise be hidden from the user into a structured format that makes it easier to parse them. The output is then parsed and only parts of it are made visible before being presented to the user.

The final step is to ask the model if there is anything it has missed in the previous process.

Strategy 5: Use external tools

Compensate for the model’s weaknesses by feeding it the output of other tools. For example, a text retrieval system (sometimes called RAG or retrieval-augmented generation) can tell the model about relevant documents. OpenAI’s Code Interpreter can help the model do math and run code. If a task can be done more reliably or efficiently by a tool instead of a language model, perhaps consider leveraging both.

- First, embedding-based search is used to achieve efficient knowledge retrieval;

- Call external API;

- Give models access to specific functionality.

Strategy 6: Test changes in the system

In some cases, modifications to the hints achieve better performance but lead to worse overall performance on a more representative set of examples. Therefore, to ensure that the changes have a positive impact on the final performance, it may be necessary to define a comprehensive test suite (also called evaluation), for example using system messages.