In a blog post on September 25, Meta officially launched the Llama 3.2 AI models that are characterized by openness and customizability, allowing developers to customize the implementation of edge AI and the vision revolution according to their needs.

Llama 3.2 provides multimodal visualization and lightweight models that represent Meta's latest advances in Large Language Models (LLMs), providing increased power and broader applicability across a variety of use cases.

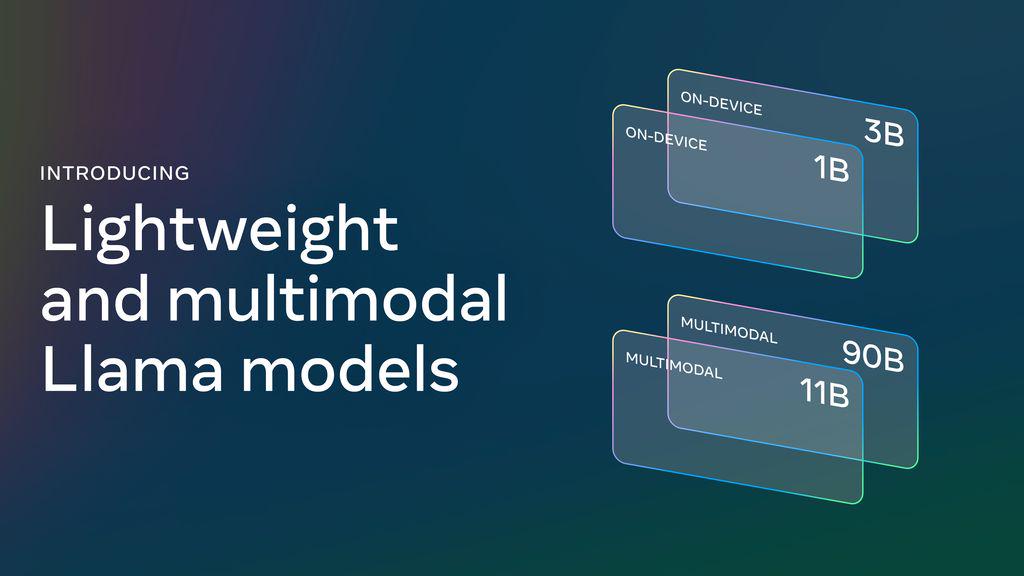

These include small to medium-sized visual LLMs (11B and 90B) suitable for edge and mobile devices, as well as lightweight text-only models (1B and 3B), in addition to pre-trained and instruction-tuned versions.

Attached are 4 versions of AI model profiles as follows:

- Llama 3.2 90B Vision (text + image input):Meta's state-of-the-art model is ideal for enterprise-class applications. The model excels in common sense, long text generation, multilingual translation, coding, math and advanced reasoning. It also introduces image reasoning capabilities for image understanding and visual reasoning tasks. The model is well suited for the following use cases: image captioning, image text retrieval, visual basics, visual question answering and visual reasoning, and document visual question answering.

- Llama 3.2 11B Vision (text + image input):Ideal for content creation, conversational AI, language understanding and enterprise applications requiring visual reasoning. The model excels at text summarization, sentiment analysis, code generation, and execution of instructions, and adds image reasoning capabilities. The use cases for the model are similar to the 90B version: image captioning, image text retrieval, visual basics, visual question answering and visual reasoning, and document visual question answering.

- Llama 3.2 3B (text input):Designed for applications that require low-latency reasoning and limited computational resources. It excels at text summarization, classification and language translation tasks. The model is well suited for the following use cases: mobile AI writing assistants and customer service applications.

- Llama 3.2 1B (text input):The lightest model in the Llama 3.2 model family is well suited for retrieval and summarization for edge devices and mobile applications. The model is well suited for the following use cases: personal information management and multilingual knowledge retrieval.

Among them, the Llama 3.2 1B and 3B models support a context length of 128K tokens, leading the way in device use cases running locally at the edge, such as summarization, instruction tracking, and rewrite tasks. These models support Qualcomm and MediaTek hardware on day one and are optimized for Arm processors.

The Llama 3.2 11B and 90B visual models can be used as direct replacements for the corresponding text models, while outperforming closed-source models such as Claude 3 Haiku for image understanding tasks.

consultations with otherOpen SourceUnlike multimodal models, both pre-trained and aligned models can be fine-tuned for custom applications using torchtune and deployed locally using torchchat. Developers can also try out these models using the intelligent assistant Meta AI.

Meta will share the first official distributions of Llama Stack, which will dramatically simplify the way developers use Llama models across different environments, including single node, on-premise, cloud, and appliances, enabling turnkey deployments of Retrieval-Augmented Generation (RAG) and tool-enabled apps with integrated security.

Meta has been working closely with partners such as AWS, Databricks, Dell Technologies, Fireworks, Infosys and Together AI to build Llama Stack distributions for their downstream enterprise customers. Device distribution is via PyTorch ExecuTorch and single node distribution is via Ollama.