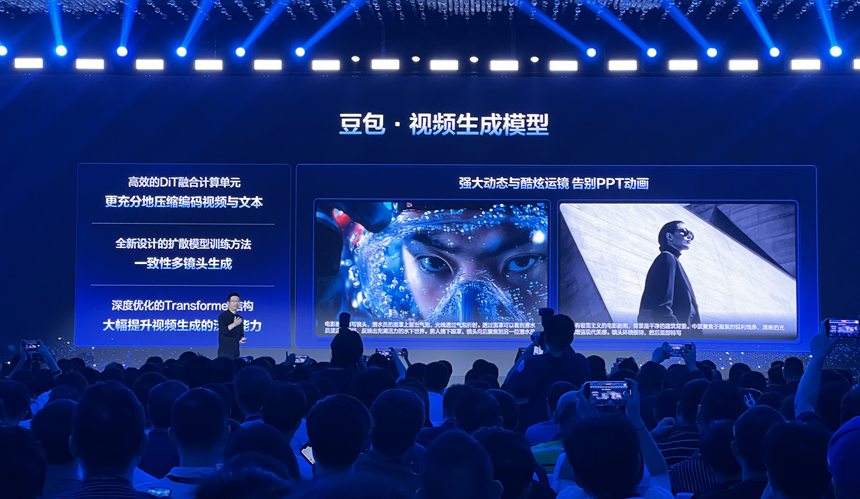

Bean curdVideo generation model release site. Photo by Jiang Jialing

SHENZHEN, Sept. 24 (Xinhua) -- People's Daily Online (江佳玲)ByteDanceIts Volcano Engine held an AI innovation tour in Shenzhen, releasing two large models, Beanbag Video Generation-PixelDance and Beanbag Video Generation-Seaweed, on the spot, and opening invitations for testing for the enterprise market.

According to reports, most of the previous video generation models can only accomplish simple instructions. The new beanbag video generation model allows the video to switch freely in the large dynamic and transport mirror, with zoom, surround, target following and other multi-camera language capabilities, and with professional-grade light and shadow layout and color palette, the picture vision is more aesthetic and realistic.

Some creators in the experience of the new beanbag video generation model, said that the generated video can not only follow the complex instructions, so that different characters to complete the interaction of multiple action commands, character appearance, clothing details and even headgear in different operation of the mirror is also consistent, close to the effect of the actual shooting.

In addition, the new Doubao video generation model supports a variety of styles such as 3D animation, 2D animation, Chinese painting, black and white, thick paint, etc., and adapts to the scale of various devices such as movies, TVs, computers, cell phones, etc., which is not only suitable for e-commerce marketing, animation education, urban culture and tourism, micro-scripts and other corporate scenarios, but can also provide creative assistance for professional creators and artists.

Currently, the new beanbag video generation model is being tested on a small scale in the internal beta version of Dream AI, and will be gradually opened to all users in the future.

"There are a lot of difficult hurdles in video generation that need to be broken through. Beanbag's two models will continue to evolve, explore more possibilities in solving key problems, and accelerate the expansion of AI video creation space and application landing." Volcano Engine President Tan To said.

On the day of the event, Volcano Engine also released the Beanbag Music Model and the Simultaneous Interpretation Model, which will cover language, speech, image, video and other modalities to meet the needs of business scenarios in different industries and fields.