Riffusion is a free and open source with a stable diffusion of real-time music and audio generation library, the user only need to enter a description of the music, the AI will be able to generate the corresponding style of music. The open source project was launched by Seth Forsgren and Hayk Martiros. The principle behind Riffusion is to fine-tune spectrograms based on Stable Diffusion (a text-to image model), which is a visual representation of audio showing the amplitude of different frequencies over time, and then convert the high fidelity spectrogram image into audio.

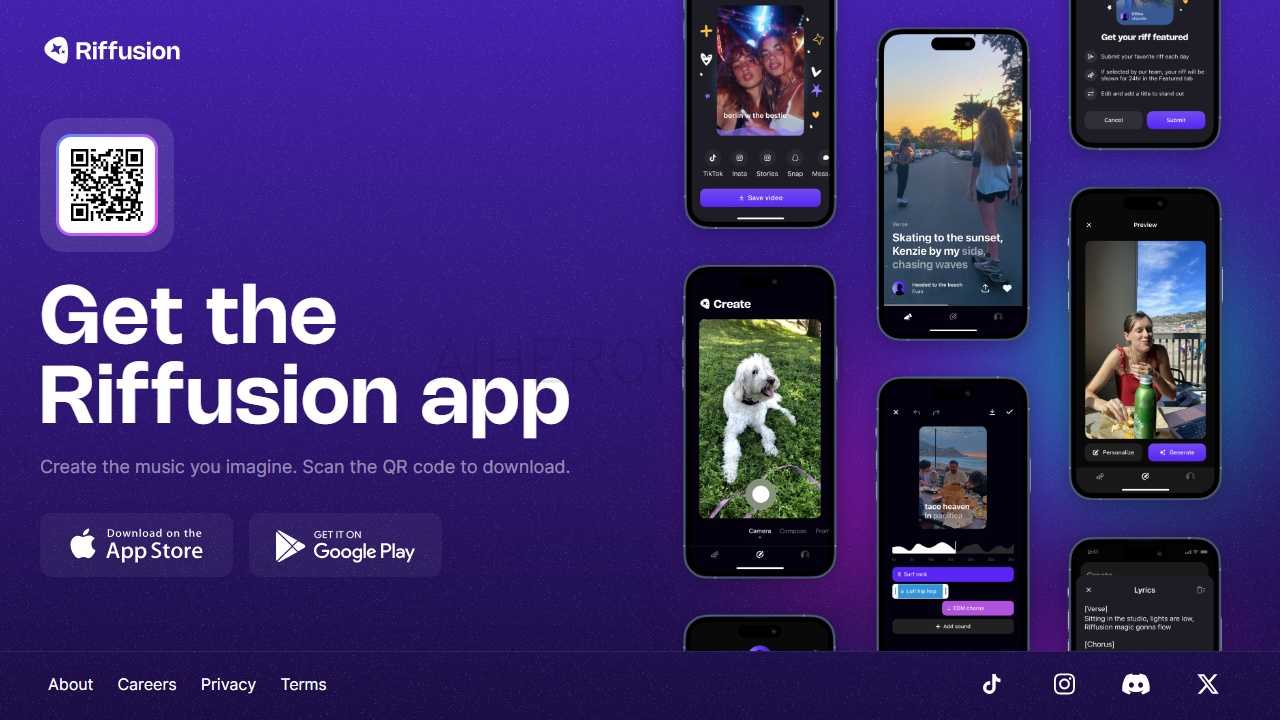

Riffusion Features

- Generate music images based on text cues: Users can enter text cues and have Riffusion generate corresponding music images.

- Real-time music generation: Riffusion is able to generate music quickly to fulfill users' need for instant creation.

- A unique way to create music: By visualizing sound, Riffusion offers a new way to create music.

Official website link:https://www.riffusion.com