I realized that there are many other peeps who haven't been exposed to it beforeAI Painting. In order to help you quickly understand AI painting, as soon as possible to cross the door into the AI painting, start the AI painting journey, I personally feel very necessary to write an article for newbies to start.

Of course it's not just for beginners, there are some peeps who fall into the category of

- The configuration of the computer is not enough to set up the SD environment locally.

- Do content partners, not too concerned about the tool itself and technical capabilities, focus on creativity and content expression, can generate the effect I want to picture can be.

For these types of peeps, I would generally recommend a newbie-friendly AI drawing tool - the LiblibAI website. Just register to use the onlineSD Drawing ToolsThe key is that the interface of this online SD drawing tool is almost exactly the same as the SD Web UI, which is very favorable for beginners to start learning SD related skills.

- LiblibAI Web site:: https://www.liblib.art/

The site can be used by registering, and currently there are 300 free points available every day, generally generating a picture consumes 2-8 points ranging.

About the LiblibAI website itself is not introduced here, we are mainly for newcomers to get started quickly to be able to produce the first picture-based explanation. Without further ado, directly open the whole.

I. Quick start

Enter the address of the LiblibAI website into your browser and click on the "Online Graphics" button on the homepage.

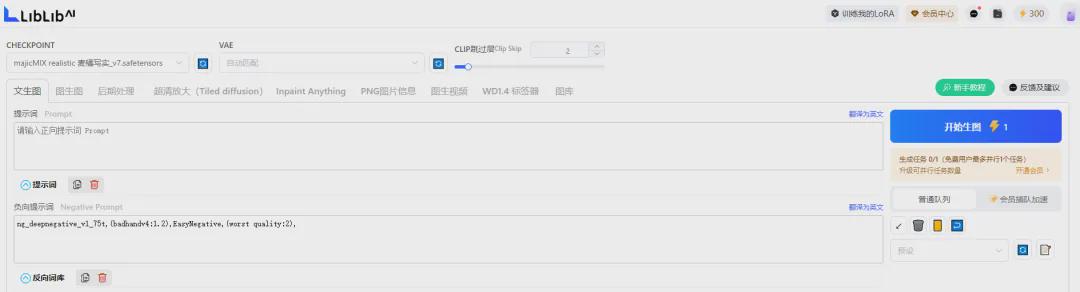

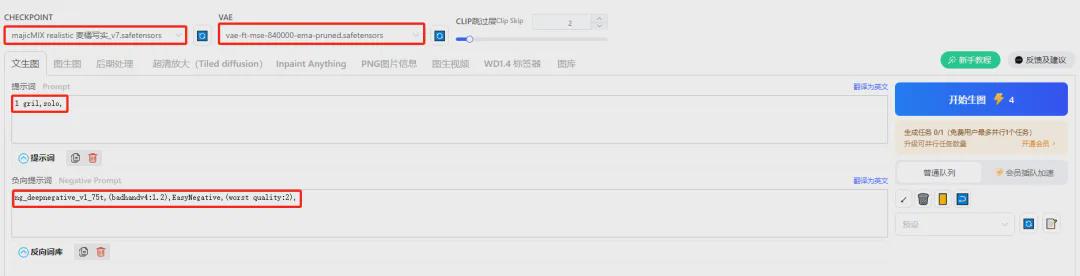

Go to the main screen of LiblibAI drawing.

Below you can follow my following interface parameter settings first out of a picture to see the effect. In order to facilitate the demonstration, here I divided into several parts of the parameter settings.

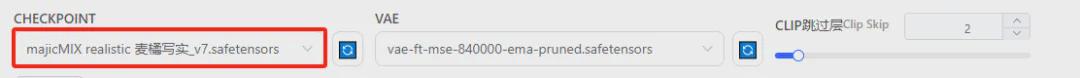

(1) Big models and cue words

Description of relevant parameter settings:

- CHECKPOINT (large model): majicMIX realistic mai orange realistic_v7.safetensors

- VAE (VAE model): vae-ft-mse-840000-ema-pruned.safetensors

- CLIP Skip Layer Clip Skip: 2 (default value, generally left unchanged)

- Cue word (positive cue word): 1 gril, solo.

- Negative cue words: ng_deepnegative_v1_75t,(badhandv4:1.2), EasyNegative,(worst quality:2),

(2) Parameter setting

Description of relevant parameter settings:

- Sample method: Euler a

- Sampling Steps: 30

- Width: 768

- Height: 512

- Numer of images: 2

- Cue guidance factor (CFG scale): 7 (default)

- Random number seed (Seed): -1 (default)

(3) ADetailer plugin

Description of relevant parameter settings:

- Enable ADetailer: Checked for "On".

- Model selection: face_yolov8n

Well, the basic parameters here are set up, we can generate images. Click the "Start Picture" button on the page to generate the picture.

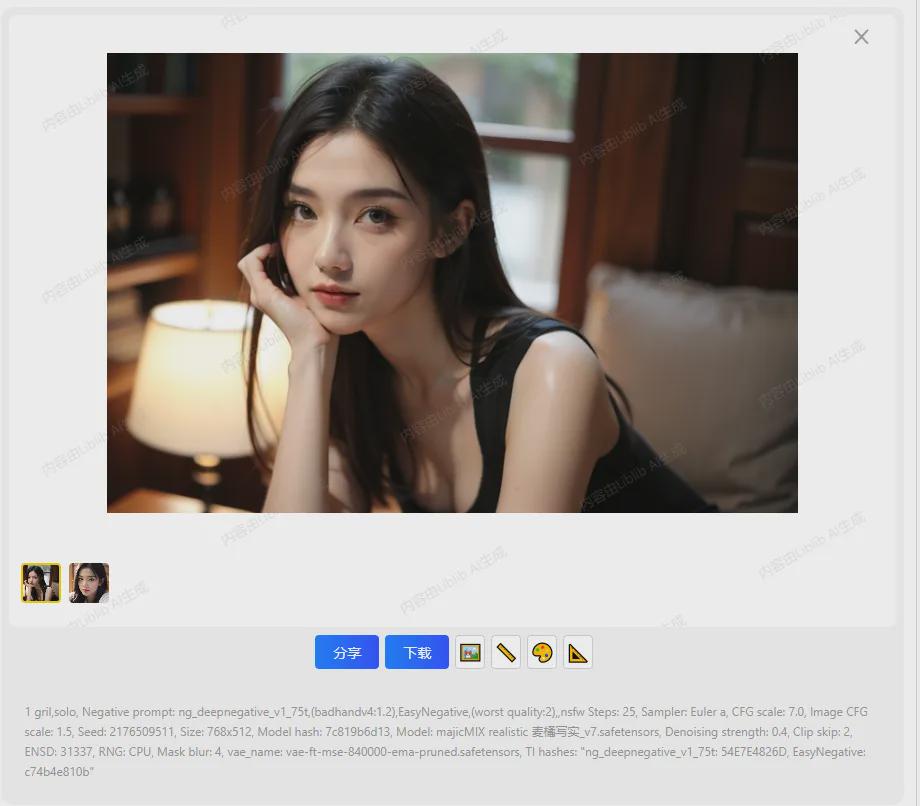

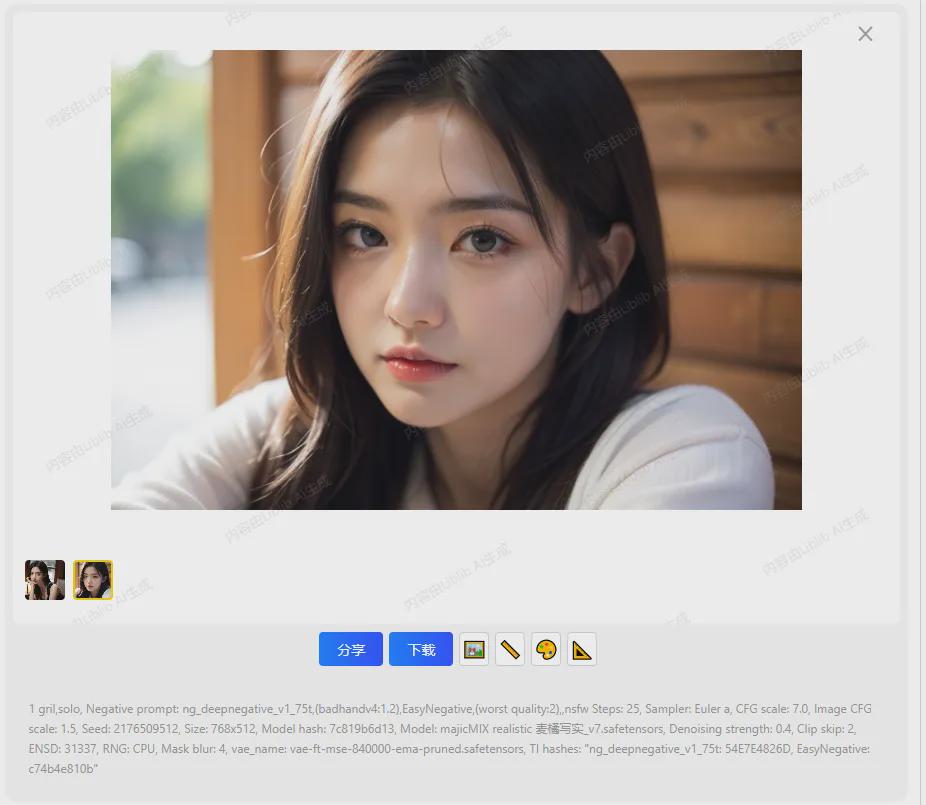

Let's take a look at the effect of the generated images. In the parameter above we set the number of images to 2 and the tool will generate 2 images for us.

Okay, so here's where our first image is generated.

II. Explanation of various parameters

Here's a primer on each of the above parameters.

1. Large model (checkpoint)

The base model of SD drawing, also called the base model, must be matched with a big model to be used. The big model determines the main style of the generated picture, and different big models will focus on different areas, such as common secondary style, real style, national style, etc. At present, there are two main types of big models based on SD1.5 and SDXL ecology. Currently, there are 2 major types of big models, SD1.5 and SDXL based.

For an in-depth look at big models, see my previous post for more details.

Stable Diffusion: Models

Stable Diffusion: A Commonly Used Large Model

For beginners, here is a focus on 2 knowledge points

(1) How to add a large model to the CHECKPOINT drop-down list box.

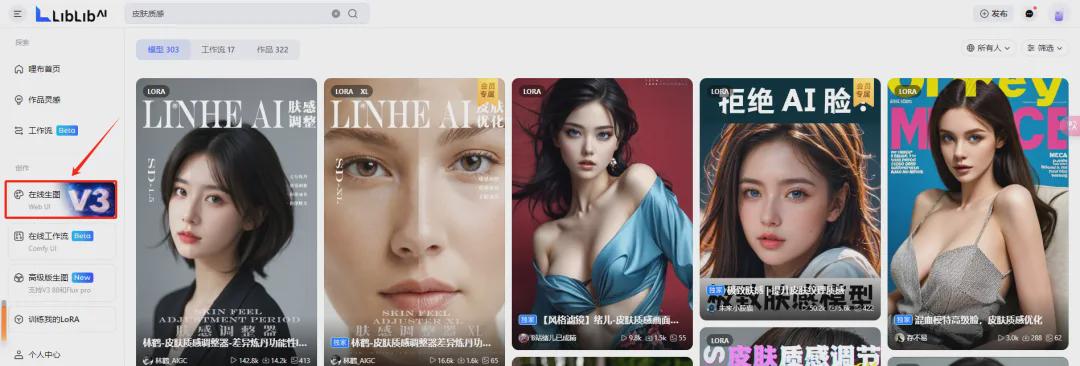

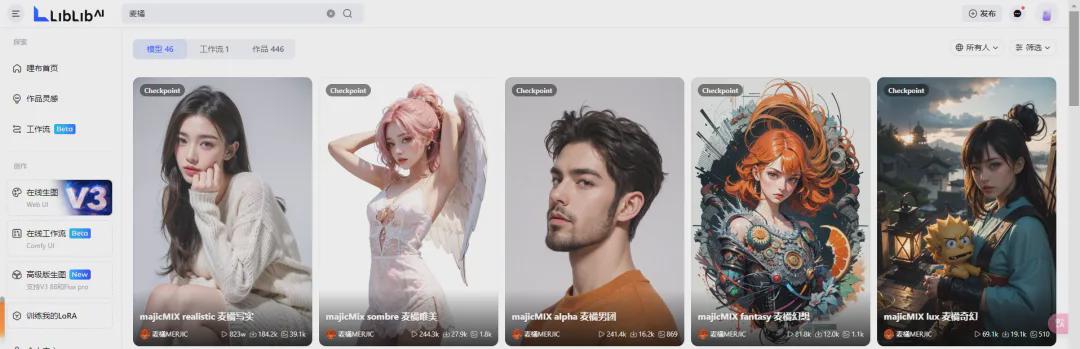

On the home page of the LiblibAI website, you can navigate directly to the detailed interface of the big model by means of search or by links.

In the search box on the home page, type in "MAGNIFICENT ORANGE".

You will be taken to a list of all the big models related to the keyword "MAGNUM".

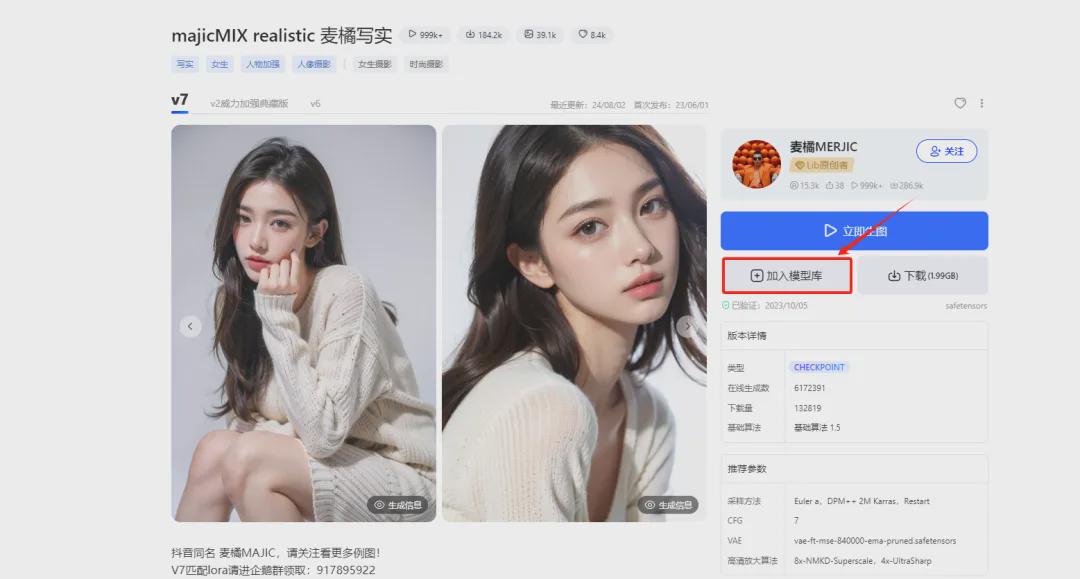

We select the desired macromodel and click on the image above to enter the macromodel detail screen.

Click Add to Model Library above to add a large model to the Large Model CHECKPOINT drop-down list box on the main drawing screen.

Often times, clicking on the "Add to Library" button does not add the large model to the CHECKPOINT dropdown list box. We can add the Refresh button next to it to see the model loaded.

(2) Commonly used large models

Based on the SD1.5 Big Model

- Universal Large Model: DreamShaper

- Realistic large models: DeliberateV2/ReVAnimated_v122 Mainly a variety of scene realistic

- Realistic large models: realisticMIX realistic V7/realisticVisionV60/Chilloutmix-Ni-pruned-fp32-fix

- Realistic large models: AWPortaint and kimchiMix (optimized for hands)

Based on the SDXL Large Model

- Universal Model: DreamShaper XL/juggernautXL_v9 + RDPhoto 2/RealVisXL V4.0 Lightning/AlbedoBase XL V2.1/Dream Tech XL (Tsukuba Industries)

- Realistic Class: LEOSAM HelloWorld New World/wuhaXL V8.2/wuhaXL_realisticMix

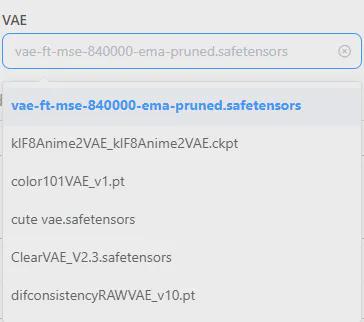

2. VAE model

VAE model is understood as a color filter, which is mainly used to adjust and beautify the color of the generated image. The most commonly used VAE model is "vae-ft-mse-840000-ema-pruned". Generally, you can choose this model when you use it.

Related Note: The LiblibAI tool has restrictions on the use of VAE models, especially when the CHECKPOINT large model is used with the SDXL large model, the VAE model cannot be selected, which greatly reduces the possibility of beginners making mistakes when using VAE models.

3. Prompts

Cues are divided into 2 categories

- Positive Cue Words: keywords you want the information contained in the generated image to use.

- Reverse cue words: keywords used for information you want the generated image to not contain.

The cue word is a huge body of content, so I won't expand on this one. Focus on a few points

- Prompts need to be described in English, either as a sentence, multiple phrases or words.

- Do not know how to write the cue word, you can look at the interface of the LiblibAI big model, which has a large number of enthusiasts posted pictures, many of which contain the cue word, beginners can imitate the writing.

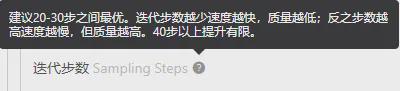

4. Sample method and sampling steps

These 2 parameters are usually set together.

The so-called sampling method is used to de-noise the image during the process of generating the image.

There are a variety of samplers available today. It is sufficient to focus on a few commonly used samplers.

- Euler a

- DPM++2M Karras

- DPM++SDE Karras

- DPM++2M SDE Karras

There is a small hint icon next to the liblibAI adoption method.

The sampling method is related to the selection of the macromodel, and the sampling method recommended for use with the macromodel is generally available in the macromodel's detail screen.

Iteration Steps: is the number of steps needed to generate an image, each sampling step generates a new image based on the image generated in the previous iteration. In general, the number of sampling iteration steps can be set between 20-40.

5. Image width and height

The optimal aspect ratio for SD V1.5 models is 512x512. The following aspect ratios are commonly used:

- 1:1 (square): 512x512, 768x768

- 3:2 (landscape): 768x512

- 2:3 (portrait): 512x768

- 4:3 (landscape): 768x576

- 3:4 (portrait): 576x768

- 16:9 (widescreen): 912x512

- 9:16 (vertical): 512x912

Optimal aspect ratio for SDXL model: 1024*1024. below are the commonly used aspect ratios:

- 1:1 (square): 1024x1024, 768x768

- 3:2 (landscape): 1152x768

- 2:3 (portrait): 768x1152

- 4:3 (landscape): 1152x864

- 3:4 (portrait): 864x1152

- 16:9 (widescreen): 1360x768

- 9:16 (vertical): 768x1360

Note on aspect ratio settings:

- The width and height should ideally be divisible by 8.

- Common ratios: 1:1, 2:3 (3:2), 3:4 (4:3), 9:16 (16:9)

- It's easy to generate all sorts of weird images when the aspect ratio isn't set properly.

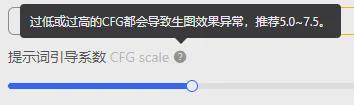

6. Cue guide factor

Determines the image degrees of freedom, the higher the value, the more the generated image will match the cue word. The default value is 7, and is usually set between 5-10. It is also not recommended to set it too large.

7. Seed of random numbers (Seed)

Equivalent to the DNA of the picture, determines the content of the picture, generally set to -1 (default), indicating random generation, SEED the same case, you can generate a more similar picture.

For beginners, it may be confusing why my same parameters generate different images every time, mainly because we set the random number seed to -1, and every time we generate the image is random. So, accept this concept.

With the above basics to lay the groundwork for a basic concept of the basic parameters of the interface, such as their own hands to successfully draw a few pictures, even if the beginner.