I don't know if you have used BizyAir cloud node, this is the Siliconflow team open source plugin dedicated to ComfyUI, you need the API key on the siliconflow website to use.

But since it's an API, you can use their functionality wherever you can run code.

After playing with the coze platform for a long time, you may feel that the current raw map model doesn't meet your needs, isn't it?

Even if some of you will have various workflow builds, you may not have enough graphics card resources locally, so what should you do?

Today, we'll take a look at how coze callsFLUXThe model is an example of how to use the API for silicon-based flows, so you can follow along and try it out if you haven't practiced it yet.

Here to say the benefits of using api, equivalent to others in their own platform to the model are deployed, you can call their API to generate graphs, do not need their own local arithmetic, do not need to rent cloud platforms according to the hour, only according to the generation of the consumption of arithmetic to billing, more flexible.

1、Use your cell phone number to register for an account with Silicon Mobility

https://account.siliconflow.cn/

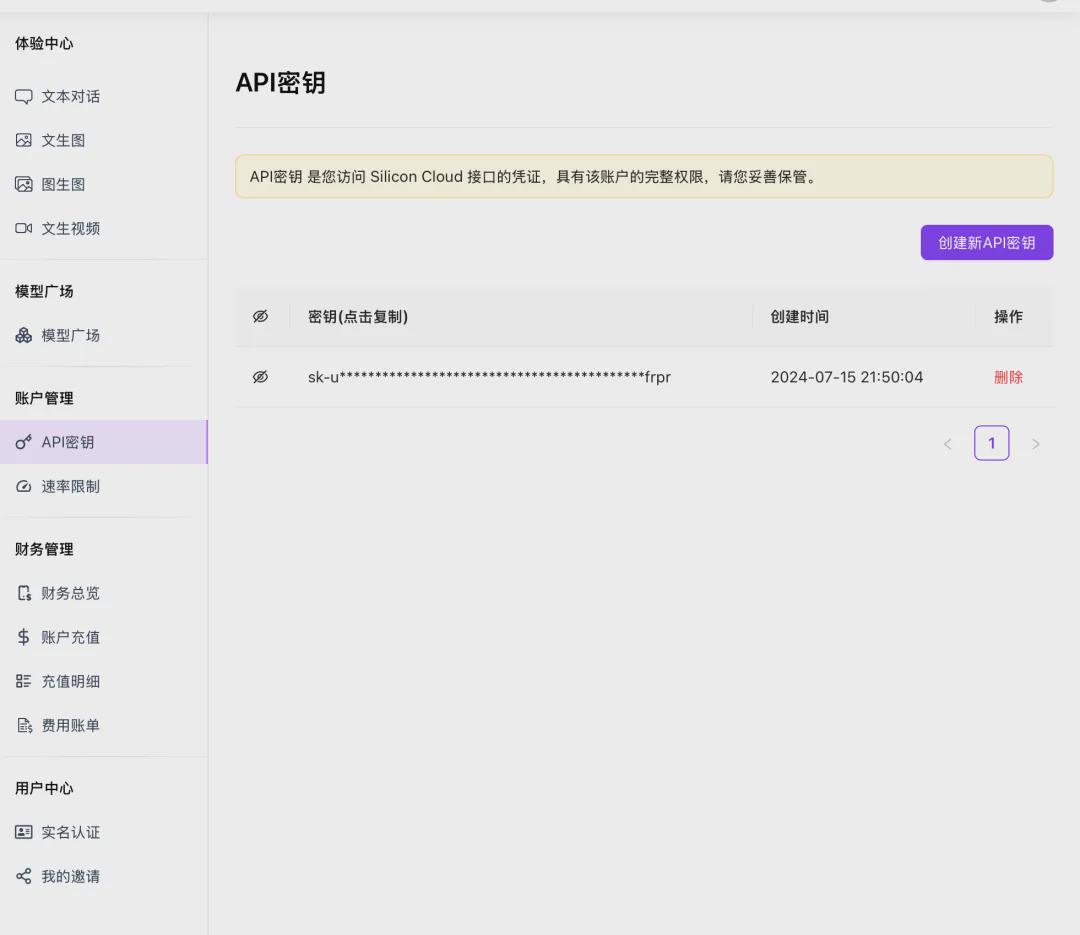

2, after logging in to the web page, there is an API key on the left side, this is the pass that can be used in other platforms to invoke the silicon-based mobility services, you can create an api key for subsequent use.

3, here in fact to use the silicon-based flow API on coze, then it involves API call code, you need to look at the API manual, there is a document button on the upper part of the page

The Documentation Center has a description of how to use the API

As you can see here, it is possible to go directly to the API manual and use the API-key to select the target language and generate code for the corresponding language.

Students who do not know the code do not need to panic, first familiar with where it can be, follow the steps.

4, open the API manual, in the right-hand directory, select Text To Image, you can see that there are SD models andFlux Model

This time, we take the flux model as an example, which is the best current model for graph generation.

Here you can fill in the key just now, you can see that the corresponding code is generated, this demonstration uses the python language.

5. Find the Plugins page in coze and select New Plugin:

Select cloud side plugin, plugin url use api use in base url: https://api.siliconflow.cn/v1

Authorization method select Service, location select Header, here corresponds to the code part of step 4, look carefully at the api documentation, you can see a headers, then the next Parameter name, you need to write "authorization", Service token fill in the "Bearer sk-" that string! key.

6, plug-ins created successfully, click on the creation tool, start doing the first plug-in function

7, fill in the tool information, here you need to fill in the tool path and request method, you can look at the api documentation to find the answer:

You can see his address in the picture, as well as the monochrome text block in front of it stating that it's the POST method, so fill it out as follows and save it.

8, the next need to fill in the parameter name, or find the API documentation, looking for body params section:

You can see that several parameters, except seed must be filled in, but does not mean that you must select it as a required field when configuring the tool.

We can set some default values for all of them, taking care to configure the input parameter types and incoming methods without selecting required, which is very convenient when testing the node.

Fill in the following according to the api documentation, click save and continue.

9, the following need to configure the output parameters, here you can not need to fill in manually, click on the upper right corner of the automatic parsing, fill in the input parameters, automatic parsing.

If the output parameters appear normally, the run is successful.

Save, the next step can continue to debug, if the response appears to image the url, open it will automatically download the run out of the map, the output here is a man, if you also use seed 0, you will run out and I have the same image.

This proves that the api is tuned, and completes the configuration of the tool.

10. Publish the plug-in so that it can be invoked in the workflow, because there is no information involved in collecting users here, just publish it directly.

11, preliminary test plug-ins, open the workflow, try to call just configured plug-ins:

After adding directly do not fill in any parameters to test, configure the content of the answer, you can see the success of the output url:

When using bot generation, the image can be successfully seen:

12. Configure some nodes that automatically generate parameters according to the parameters to be entered.

We have just generated the image using the default parameters, now we need to go ahead and change the incoming content.

For example, seed, you can find a plugin that generates random numbers to generate seed:

Here I choose to use:

Configure the maximum and minimum values and test the node:

For the cue word generation section, a large model was used to augment the cue words.

Here some students may want to recover the content of the image, they need to keep the seed of the image, the cue word, you can keep these parameters in the output for output in bot:

Testing in bot:

If you want to change the size of the image, remember to set it according to the api documentation, here are some fixed sizes:

The size can not be set arbitrarily, api has been stipulated, and finally according to the task requirements of the workflow, you can choose a fixed size or intelligent transformation. It must be noted that "1024x1024" which is the letter of the small x link, not *.

13. Finally, a word about costs

Here you can look at the cost bill to see the breakdown of their own use, now siliconflow product is also just out soon, some models are still in the stage of free use.

The account is now gifted $14 per person with a little free credit, like the flux model just used, which is still free to call at the moment.

Of course this platform can not only use the raw map model, there are a lot of text big language model, image generation side of the instantid model is also available, you can refer to the facial features, you can make up for some coze on the raw map algorithms do not have.