TechCrunch, a technology media outlet, reported yesterday (September 11) thatFrance AI Startups Mistral Release of Pixtral 12B.is the company's first multimodal AI big speech model capable of processing images and text simultaneously.

The Pixtral 12B model has 12 billion parameters and is about 24 GB in size; the parameters roughly correspond to the model's solving power, and models with more parameters usually perform better than models with fewer parameters.

The Pixtral 12B model is built on the text model Nemo 12B and is capable of answering questions about any number of images of any size.

Similar to other multimodal models such as Anthropic's Claude series and OpenAI's GPT-4o, the Pixtral 12B should theoretically be able to perform tasks such as adding descriptions to images and counting the number of objects in a photo.

Users can download and fine-tune the Pixtral 12B model and use it under an Apache 2.0 license.

Pixtral 12B will soon be available for open beta testing on Mistral's chatbot and API service platforms Le Chat and Le Plateforme, said Sophia Yang, Mistral's head of developer relations, in a post on the X platform.

In terms of technical specifications, the Pixtral12B is equally impressive: a 40-layer network structure, 14,336 hidden dimensions, 32 attention heads, and a 400M dedicated visual coder that supports the processing of images with 1024x1024 resolution.

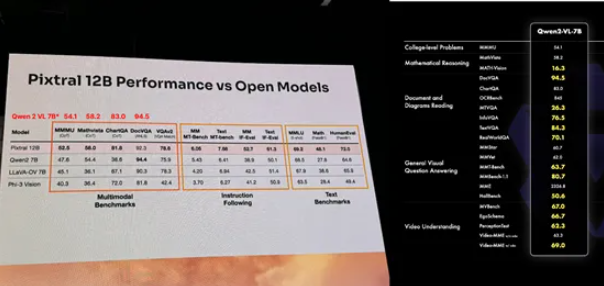

On platforms such as MMMU, Mathvista, ChartQA, and DocVQA, it outperforms a number of well-known multimodal models including Phi-3 and Qwen-27B, which fully proves its strong strength.

Huggingface address.

https://huggingface.co/mistral-community/pixtral-12b-240910