Deepfake(Deep fake) and once again caught up in the storm of public opinion.

This time, the severity of the crime committed using this AI technology was called "South KoreaRoom N2.0", its evil claws even reach many minors!

The impact of the incident was so great that it directly became a hot topic on various search and hot lists.

As early as May this year, Yonhap News Agency reported a piece of news, the content is:

Seoul National University graduates Park and Kang are suspected of using Deepfake to synthesize pornographic photos and videos and distributing them privately on the communication software Telegram from July 2021 to April 2024. As many as 61 women were victimized, including 12 Seoul National University students.

Park alone used Deepfake to create about 400Porn Videosand photos, and distributed 1,700 explicit contents with his accomplices.

However, this incident is just the tip of the iceberg of the rampant Deepfake in South Korea.

Just recently, more terrifying inside stories related to this have been uncovered one after another.

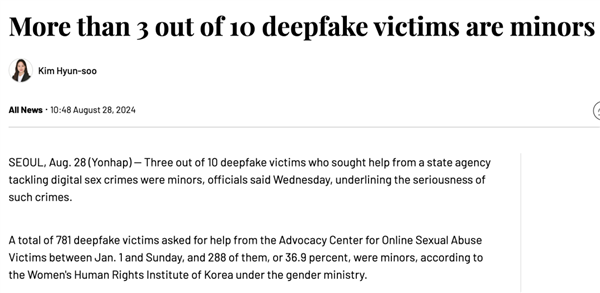

For example, the Korean Women's Human Rights Institute released a set of data:

From January 1 this year to last Sunday, a total of 781 Deepfake victims sought help online, of which 288 (36.9%) were minors.

And this "Room N 2.0" is also a very terrifying existence.

Arirang further reported:

A Deepfake-related Telegram chat room attracted 220,000 people who created and shared fake images by doctoring photos of women and girls. The victims included college students, teachers, and even soldiers.

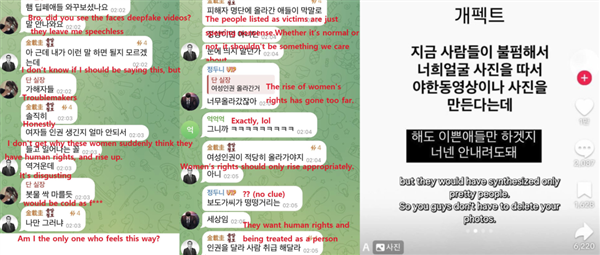

Not only are the victims minors, but there are also a large number of teenagers among the perpetrators.

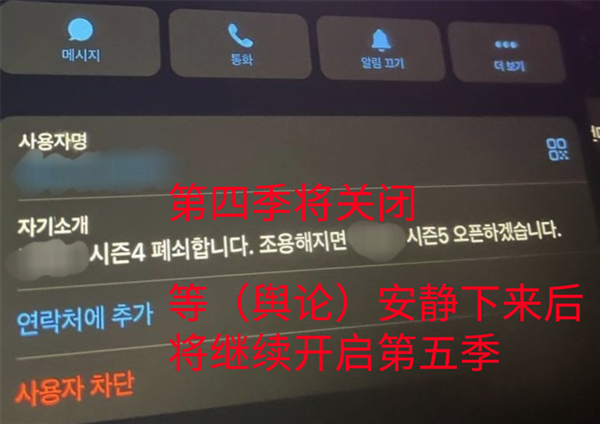

Not only that, the process of the rise of public opinion was also extremely dramatic.

Because the Korean men who caused the incident (hereinafter referred to as Korean men) can be said to be very rampant. When there are signs of public opinion, they will "restrain" a little:

Some Korean men also have a bad attitude towards this matter. There are even junior high school boys who publicly wrote, "Don't worry, you are not pretty enough to be Deepfake."

So, the counterattack of Korean women (hereinafter referred to as Korean women) began.

They turned their "position" to social media outside of South Korea. For example, on X, someone posted a map of the school where Deepfake was made:

There is also a Korean woman who posted a "help post" on Weibo:

As public opinion fermented on major social media, the South Korean government also made a statement:

More than 200 schools have been affected by Deepfakes; there are plans to increase the prison sentence for Deepfake crimes from 5 to 7 years.

It is understood that the South Korean police have set up a special task force to deal with fake video cases such as deep fake crimes. The task force will operate until March 31 next year.

Deepfakes have evolved

In fact, the latest Deepfake technology has evolved to the "horror" stage!

AI Flux, a photo-sharing company, used a set of TED talk photos that were hard to tell apart from the real thing, and attracted millions of ?? (former Twitter) netizens to fight fakes online. (The left side was generated by AI)

Musk's late-night live broadcast also attracted tens of thousands of people to watch and reward, and even started a live broadcast with netizens.

You know, real-time face-changing can be achieved with just one picture during the entire live broadcast.

It is true as netizens said, Deepfake has brought science fiction into reality.

In fact, the term Deepfake originated in 2017, when a Reddit user "Deepfakes" replaced the faces of pornographic actresses with some well-known American actors, causing controversy.

This technology can be traced back to 2014, when Goodfellow and his colleagues published the world's first scientific paper introducing GAN.

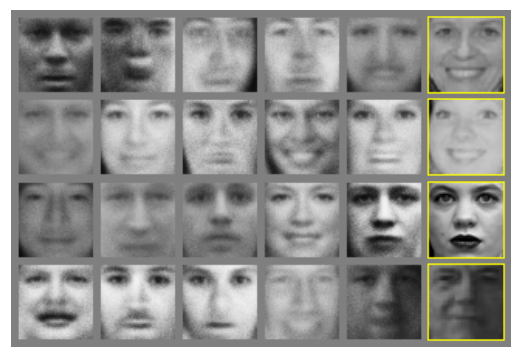

At the time, there were signs that GANs could generate highly realistic human faces.

Later, with the development of deep learning technology, technologies such as autoencoders and generative adversarial networks were gradually applied to Deepfake.

Let me briefly introduce the technical principles behind Deepfake.

For example, faking a video.

The core principle is to use deep learning algorithms to "graft" the target object's face onto the object being imitated.

Since the video is composed of a series of pictures, you only need to replace the face in each picture to get a new video with a changed face.

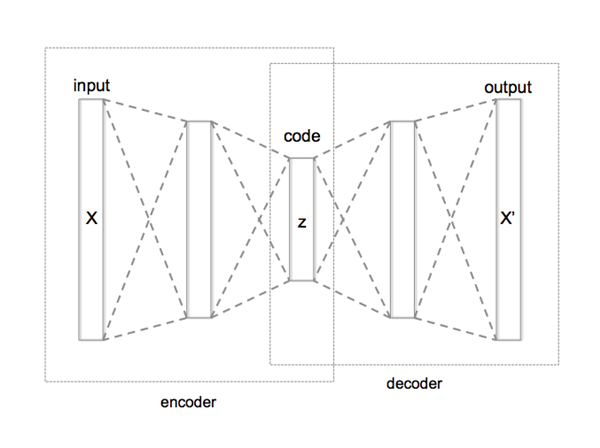

An automatic encoder is used here to input video frames and encode them when applied to Deepfake.

△Image source: Wikipedia

They consist of an encoder that reduces the image to a lower-dimensional latent space and a decoder that reconstructs the image from the latent representation.

Simply put, the encoder converts some key feature information (such as facial features, body posture) into a low-dimensional latent space representation, while the decoder restores the image from the latent representation for network learning.

Another example is forging an image.

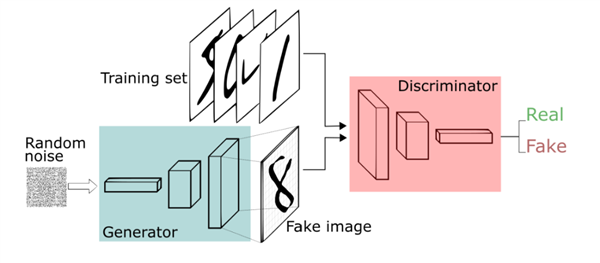

The main method used here is Generative Adversarial Network (Gan), which is a method of unsupervised learning that learns by letting two neural networks compete with each other. (This method can also be used to forge videos)

The first algorithm, called the generator, takes in random noise and converts it into an image.

This synthetic image is then added to a stream of real images (such as images of celebrities), which are fed into a second algorithm, called the discriminator.

The discriminator tries to distinguish whether the sample is real or synthetic, and each time it notices a difference between the two, the generator adjusts accordingly, until it eventually reproduces the real image so that the discriminator can no longer tell the difference.

However, in addition to making the appearance indistinguishable, current Deepfakes are using a "combination punch".

Voice cloning has also been upgraded. Now, you can find an AI tool and copy your voice instantly by providing a few seconds of the original sound.

There are also numerous cases of fake celebrities using synthetic voices.

In addition, generating a video from one picture is no longer novel, and the current focus is on subsequent polishing, such as making expressions and postures look more natural.

One of them is a lip syncing technology, such as letting Leo speak.

How to identify Deepfake?

Although Deepfakes are now very realistic, here are some identification tips for you.

The various methods currently discussed on the Internet can be summarized as follows:

Unusual or awkward facial postures

Unnatural body movements (limb distortion)

Unnatural coloring

Inconsistent audio

The one who doesn't blink

The aging of the skin does not match the aging of the hair and eyes

The glasses either have no glare or too much glare, and the glare angle remains the same no matter how the person moves.

Video that looks weird when zoomed in

…

Well, even Leeuwenhoek would call it an expert after seeing it, but it is really a bit tiring to observe it with the naked eye!

A more efficient approach is to defeat magic with magic—detect AI with AI.

Well-known technology companies at home and abroad have taken related actions. For example, Microsoft has developed an identity authentication tool that can analyze photos or videos and give a score to determine whether they have been manipulated.

OpenAI also previously announced the launch of a tool for detecting images created by the AI image generator DALL-E 3.

In internal testing, the tool correctly identified images generated by DALL-E 3 in 98% and handled common modifications such as compression, cropping, and saturation changes with minimal impact.

Chipmaker Intel's FakeCatcher uses algorithms to analyze image pixels to determine whether something is real or fake.

In China, SenseTime's digital watermarking technology can embed specific information into multimodal digital carriers, supporting multimodal digital carriers such as images, videos, audio, and text. Officials say this technology can ensure watermark extraction accuracy exceeding 99% without losing image quality.

Of course, Quantum位 has previously introduced a very popular method for identifying AI raw images - adjusting the saturation to check the person's teeth.

When the saturation is turned up to the maximum, the teeth of the AI portrait will become very strange and the boundaries will be blurred.

Science article: Standards and testing tools are needed

Just yesterday, Science also published an article discussing Deepfake.

This article argues that the challenge posed by Deepfakes is the integrity of scientific research - science requires trust.

Specifically, Deepfake's realistic falsification and difficulty in detection further threaten trust in science.

In the face of this challenge, Science believes that we should "grasp both ends": one is to use the technical ethical standards of Deepfake, and the other is to develop accurate detection tools.

When discussing the relationship between Deepfake and educational development, the article believes:

While deepfakes pose significant risks to the integrity of scientific research and communication, they also provide opportunities for education.

The future impact of deepfakes will depend on how the scientific and educational communities respond to these challenges and exploit these opportunities.

Effective misinformation detection tools, sound ethical standards, and research-based educational approaches can help ensure that science is enhanced, not hindered, by deepfakes.

In short, there are thousands of technological paths, but safety comes first.

One More Thing

When we asked ChatGPT to translate the content of the relevant event, it responded like this:

Well, even the AI felt that it was inappropriate.

Reference Links:

[1]https://en.yna.co.kr/view/AEN20240826009600315

[2]https://en.yna.co.kr/view/AEN20240828003100315?input=2106m

[3]https://en.yna.co.kr/view/AEN20240829002853315?input=2106m

[4]https://www.arirang.com/news/view?id=275393&lang=en

[5]https://www.science.org/doi/10.1126/science.adr8354

[6]https://weibo.com/7865529830/OupjZgcxF

[7]https://weibo.com/7929939511/Out1p5HOQ

This article comes from WeChat public account:Quantum Bit (ID: QbitAI), author: Jin Lei Yishui