Zhipu AIRecently released its latest baseLarge ModelGLM-4-Plus, which demonstrates powerful visual capabilities comparable to OpenAI GPT-4, was announced to be open for use on August 30.

Major update highlights:

- Language Basic ModelGLM-4-Plus: has achieved a qualitative leap in language parsing, instruction execution and long text processing capabilities, and continues to maintain its leading position in international competition.

- Wenshengtu ModelCogView-3-Plus: Performance comparable to the industry-leading MJ-V6 and FLUX models.

- Image/video understanding model GLM-4V-Plus: Not only does it excel in image understanding, it also has video understanding capabilities based on time series analysis. This model will soon be launched on the open platform bigmodel.cn and will become the first general video understanding model API in China.

- Video Generation ModelCogVideoX: After the release and open source of version 2B, version 5B was also officially open sourced to the outside world, with significantly improved performance, making it the leader among current open source video generation models.

- The cumulative downloads of Zhipu open source models have exceeded 20 million times, making a significant contribution to the prosperity and development of the open source community.

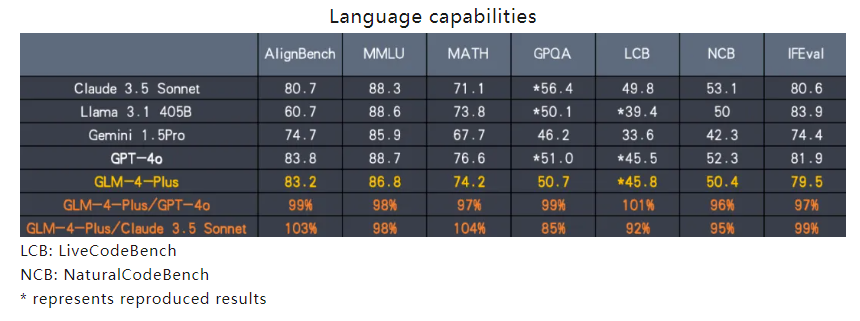

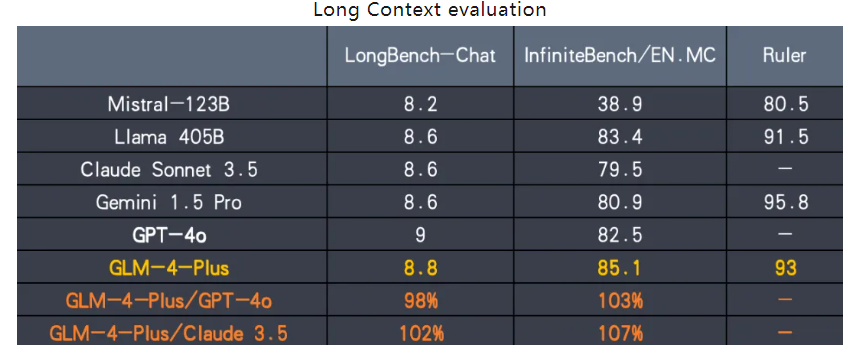

GLM-4-Plus performs well in multiple key areas. In terms of language ability, the model has reached international leading levels in terms of comprehension, instruction following, and long text processing, and its performance is comparable to GPT-4 and Llama3.1 with 405B parameters. It is particularly worth mentioning that GLM-4-Plus improves the reasoning effect of long texts through a precise long and short text data mixing strategy.

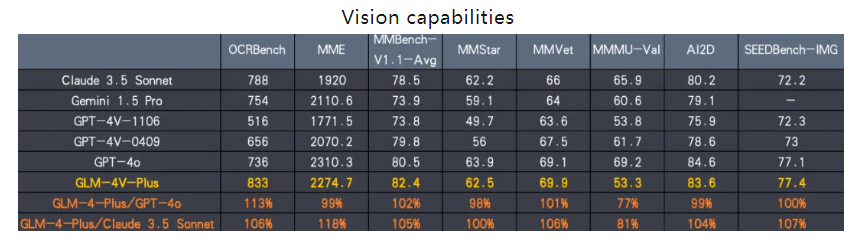

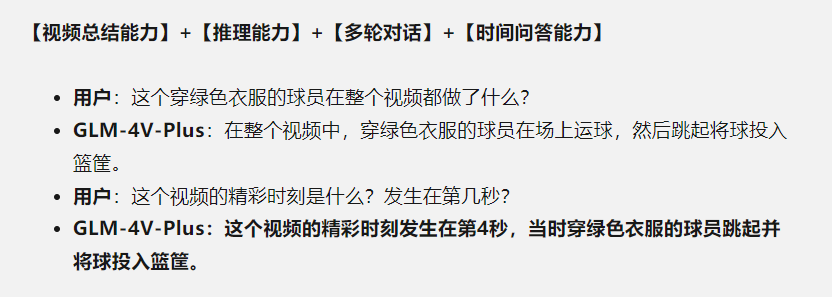

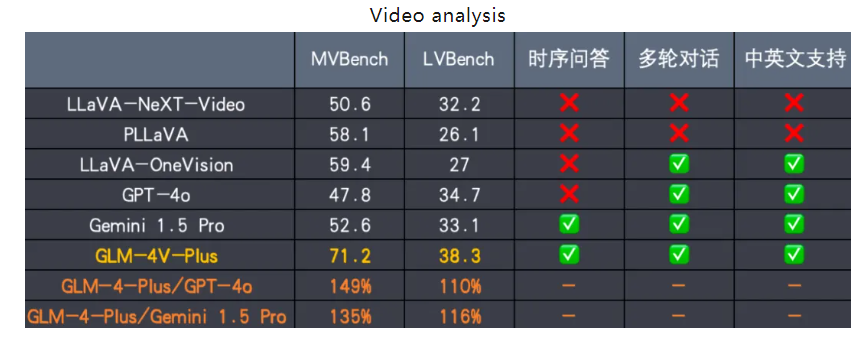

In the field of visual intelligence, GLM-4V-Plus demonstrates excellent image and video understanding capabilities. It not only has time perception capabilities, but also can process and understand complex video content. It is worth noting that the model will be launched on the Zhipu open platform and become the first general video understanding model API in China, providing powerful tools for developers and researchers.

For example, if you give it a video like this and ask it what the player in green does throughout the video, it can accurately describe the actions taken by the player and tell you exactly at which second the highlight of the video is:

Screenshot from official

CogView-3-Plus has already approached the current best models such as MJ-V6 and FLUX in terms of image performance. At the same time, the video generation model CogVideoX has launched a more powerful version 5B, which is considered to be the best choice among the current open source video generation models.

The most anticipated feature is that Zhipu's Qingyan APP will soon launch a "video call" function, which is the first AI video call function open to the C-end in China. This function spans the three major modalities of text, audio and video, and has real-time reasoning capabilities. Users can have smooth conversations with AI, and can respond quickly even if they are frequently interrupted.

As long as the camera is turned on, AI can see and understand what the user sees, and accurately execute voice commands.

The video call function was launched on August 30, and will be first opened to some Qingyan users, while also accepting external applications.

Reference: https://mp.weixin.qq.com/s/Ww8njI4NiyH7arxML0nh8w