AlibabaThe cloud computing division of AI Models ——Qwen2-VL. The power of this model lies in its ability to understand visual content, including pictures and videos, and can even analyze videos up to 20 minutes long in real time, which is quite powerful.

It performs very well in third-party benchmarks compared to other leading state-of-the-art models such as Meta’s Llama3.1, OpenAI’s GPT-4o, Anthropic’s Claude3Haiku, and Google’s Gemini-1.5Flash.

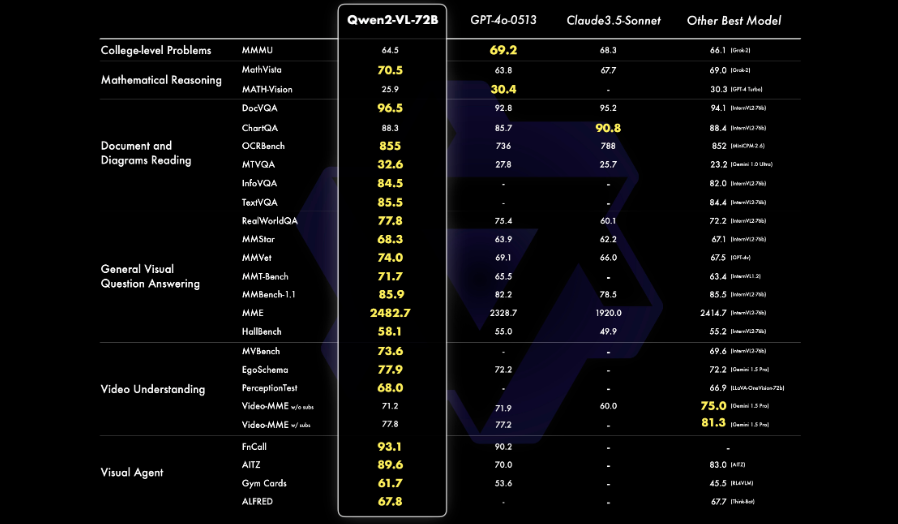

Alibaba evaluated the model's visual capabilities from six key dimensions: complex university-level problem solving, mathematical ability, document and table understanding, multilingual text image understanding, general scenario question answering, video understanding, and agent-based interaction. Its 72B model demonstrated top performance in most indicators, even surpassing closed-source models such as GPT-4o and Claude 3.5-Sonnet.

The details are shown in the following figure:

Superior image and video analysis capabilities

Qwen2-VL can not only analyze static images, can also summarize the video content, answer questions related to it, and even provide online chat support in real time.

As the Qwen research team wrote in a blog post about the new Qwen2-VL series of models on GitHub: “In addition to static images, Qwen2-VL extends its capabilities to video content analysis. It can summarize video content, answer questions related to it, and maintain a continuous flow of conversation in real time, providing live chat support. This capability enables it to act as a personal assistant, helping users by providing insights and information extracted directly from video content.”

Officials say that it can analyze videos longer than 20 minutes and answer questions about the content. This means that Qwen2-VL can be a powerful assistant in online learning, technical support, or any other occasion where you need to understand the video content.

Qwen2-VL's language capabilities are also quite powerful, supporting English, Chinese, and multiple European languages, Japanese, Korean, Arabic, Vietnamese, and other languages, allowing global users to use it easily. In order to help everyone better understand its capabilities, Alibaba also shared relevant application examples on their GitHub.

Three versions

This new model has three versions with different parameters, namely Qwen2-VL-72B (72 billion parameters), Qwen2-VL-7B and Qwen2-VL-2B. Among them, the 7B and 2B versions are provided under the open source and relaxed Apache2.0 license, allowing enterprises to use them for commercial purposes at will.

The largest 72B version is not yet publicly available and is only available through a dedicated license and API.

Qwen2-VL also introduces some new technical features, such as Naive Dynamic Resolution support, which can handle images of different resolutions to ensure consistency and accuracy of visual interpretation, and Multimodal Rotary Position Embedding (M-ROPE) system, which can synchronously capture and integrate position information between text, images and videos.

The model link is as follows:

-

Qwen2-VL-2B-Instruct:https://www.modelscope.cn/models/qwen/Qwen2-VL-2B-Instruct

-

Qwen2-VL-7B-Instruct:https://www.modelscope.cn/models/qwen/Qwen2-VL-7B-Instruct