Zyphra published a blog post on August 27, announcing the release of the Zamba2-mini 1.2B model.It has a total of 1.2 billion parameters and is claimed to be an end-side SOTA small language model. Its memory usage is less than 700MB under 4-bit quantization.

SOTA stands for state-of-the-art. It does not refer to a specific model, but to the best/most advanced model currently available in this research task.

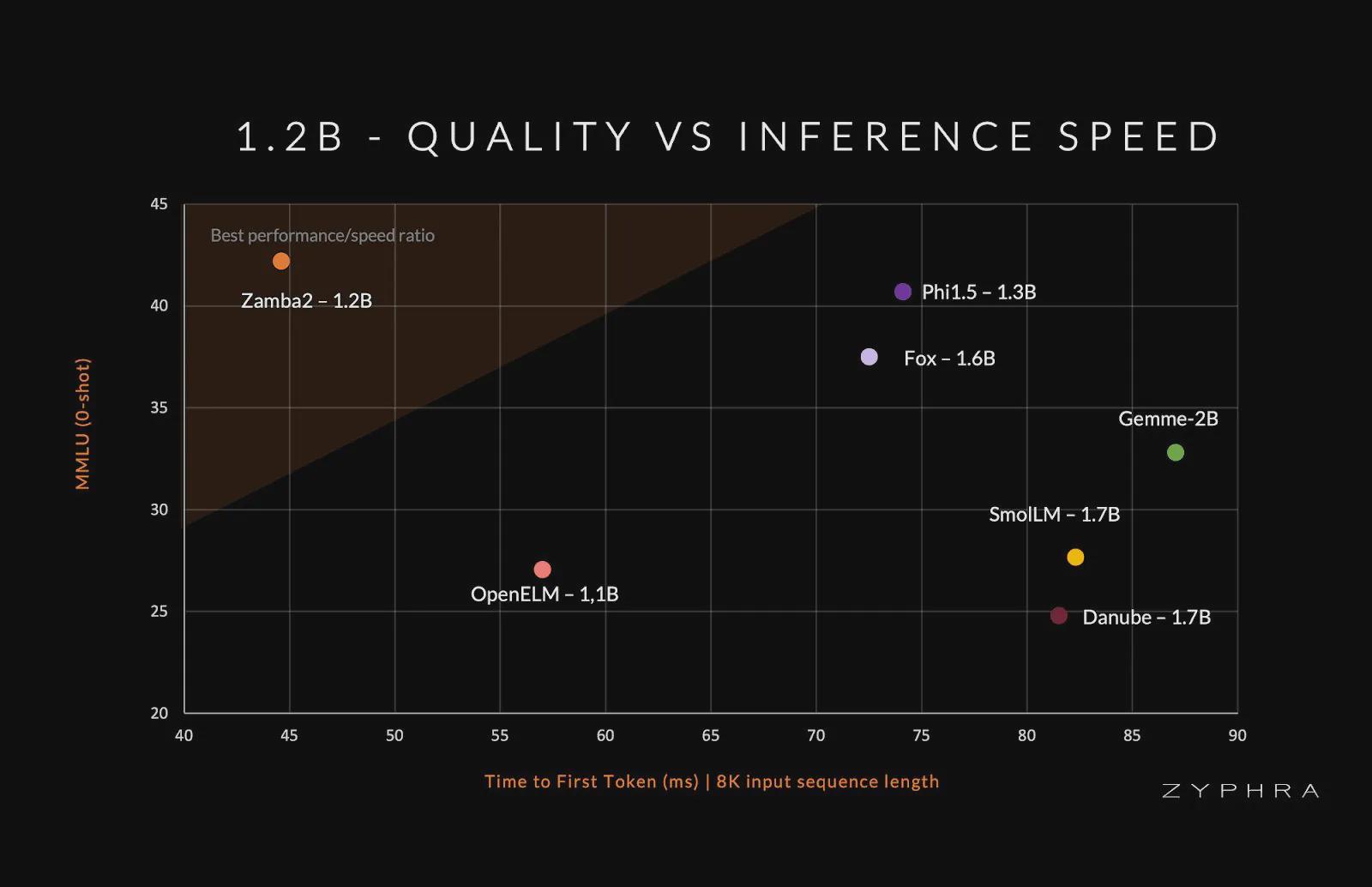

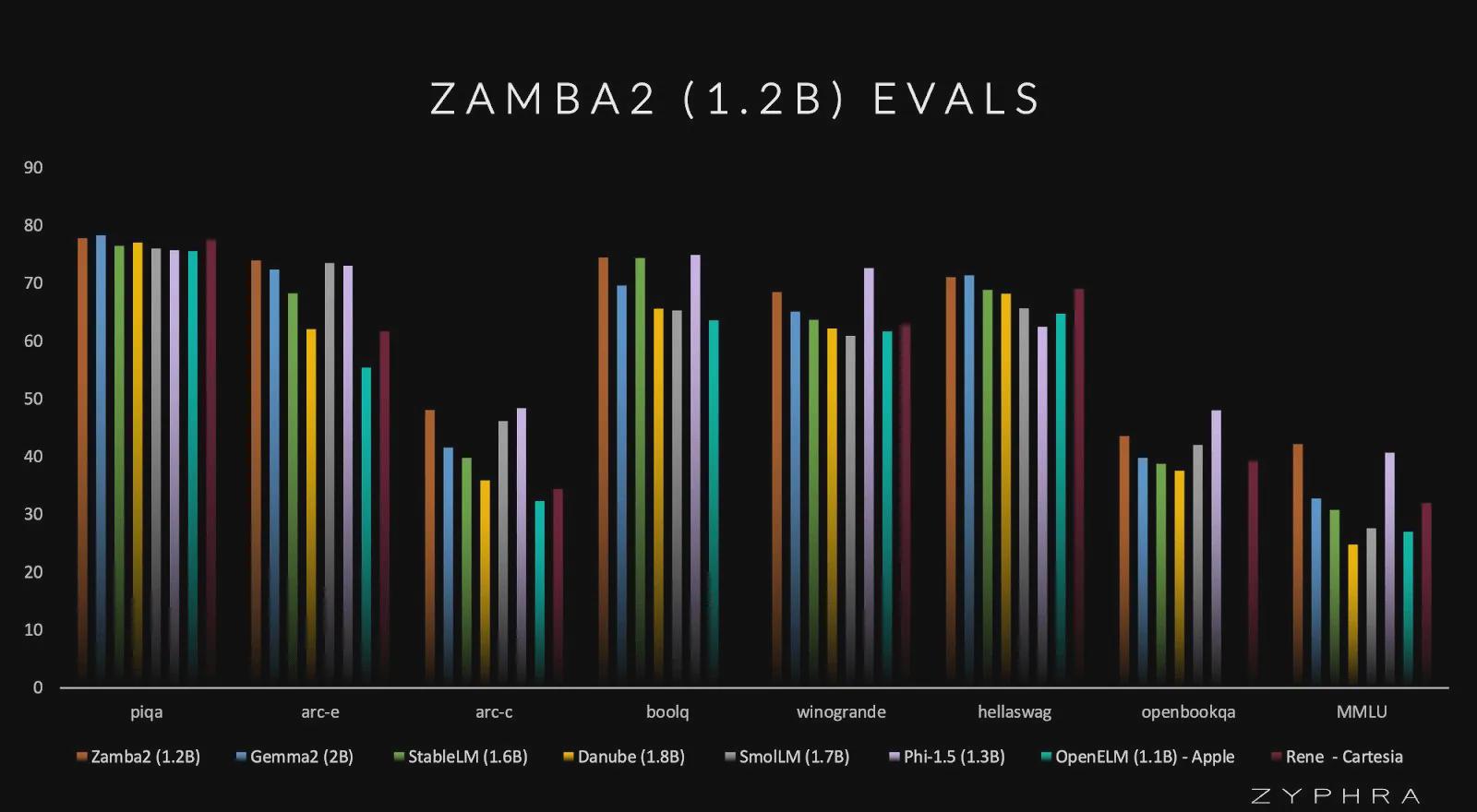

Although small in size, the Zamba2-mini 1.2B is comparable to larger models including Google's Gemma-2B, Huggingface's SmolLM-1.7B, Apple's OpenELM-1.1B, and Microsoft's Phi-1.5.

In the reasoning task, the outstanding performance of Zamba2-mini is particularly remarkable. Compared with models such as Phi3-3.8B, the first token time of Zamba2-mini (the delay from input to output of the first token) is half of the previous one, and the memory usage is reduced by 27%.

Zamba2-mini 1.2B is mainly achieved through a highly optimized architecture that combines the advantages of different neural network designs, which can not only maintain the high-quality output of large and dense transformers, but also run with the computational and memory efficiency of smaller models.

One of the key advances of Zamba2-mini compared to its predecessor Zamba1 is the integration of two shared attention layers.

This two-layer approach enhances the model’s ability to preserve information at different depths, improving overall performance. Including rotational position embeddings in the shared attention layer also slightly improves performance, demonstrating that Zyphra is committed to making incremental yet impactful improvements in model design.

Zamba2-mini is pre-trained on a massive dataset of three trillion tokens from Zyda and other public sources.

This massive dataset is rigorously filtered and iterated to ensure the highest quality training data, and is further refined in an annealing phase, which includes training on 100 billion very high quality tokens.

Zyphra has committed to making Zamba2-mini available under the Apache 2.0 license.Open SourceModel.

Attach reference address