LG AI Research announced on August 7thSouth KoreaThe firstOpen Source AI Models EXAONE 3.0, marking South Korea's entry into the global AI field dominated by U.S. tech giants and emerging companies in China and the Middle East.

The EXAONE 3.0 open source model is based on the Decoder-only Transformer architecture.The number of parameters is 7.8B, the training data volume (tokens) is 8T, and it is a bilingual model for English and Korean.

"Among the EXAONE 3.0 language model lineup built for various purposes, the 7.8B instruction adjustment model is being open-sourced in advance so that it can be used for research," said an LG press release. "We hope that the release of this model will help AI researchers at home and abroad conduct more meaningful research and help the AI ecosystem move one step forward."

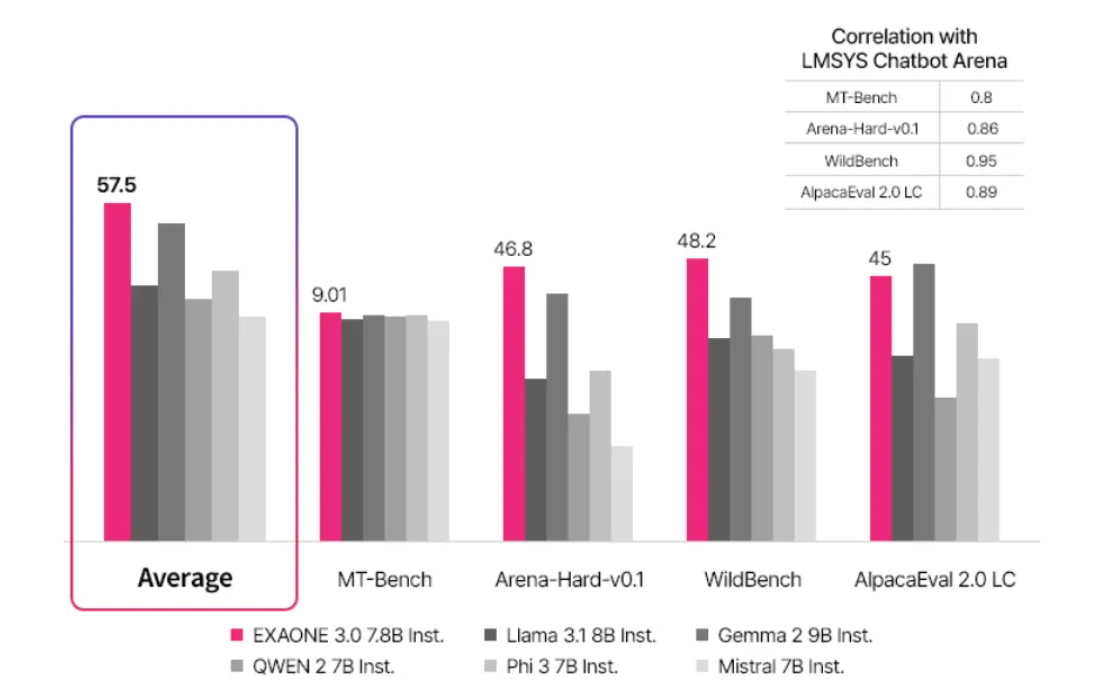

Official tests show that the model's English ability has reached the "world's top level".The average score of real use cases ranks first, surpassing other models such as Llama 3.0 8B and Gemma 2 9BIn terms of mathematics and coding, EXAONE 3.0 also ranked first in average score, and its reasoning ability was also strong.

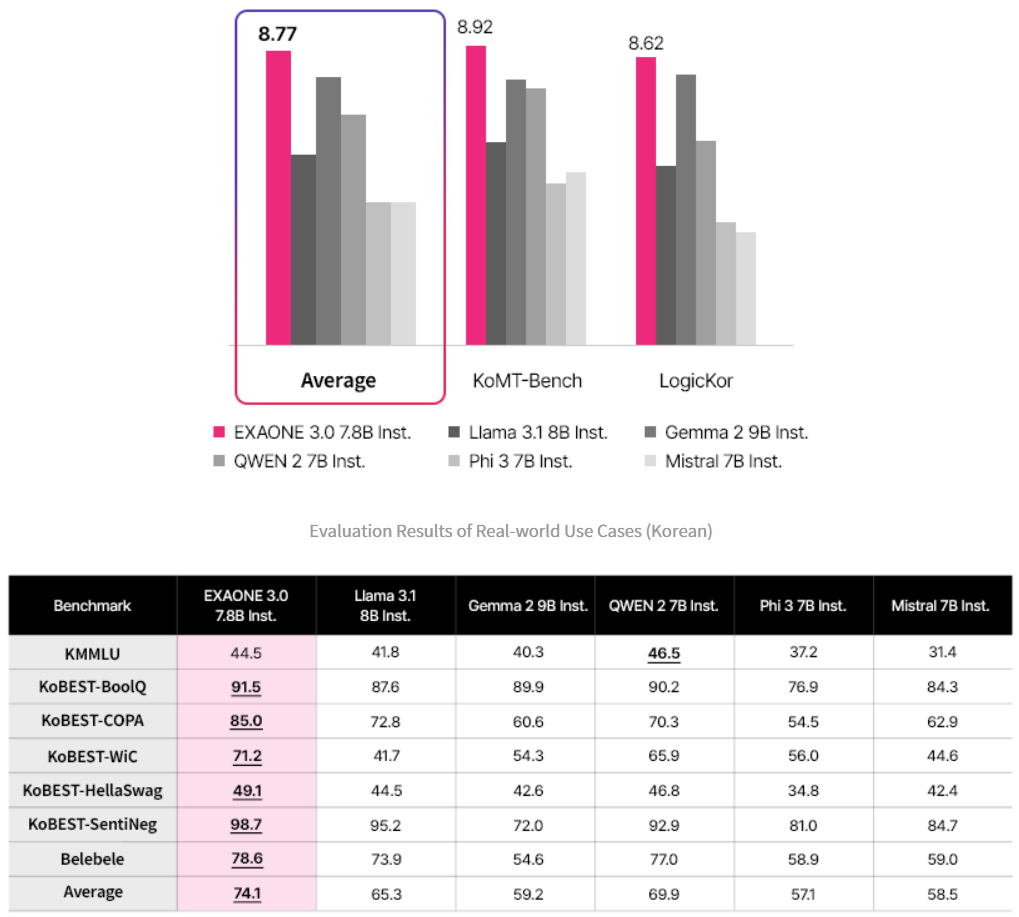

In Korean,EXAONE 3.0 ranks first in both the average scores of real-world use cases and single benchmarks.

LG claims that EXAONE 3.0 is better than its predecessor.Inference time was reduced by 56%, memory usage was reduced by 35%, and operating costs were reduced by 72%; Compared with the first released EXAONE 1.0, the cost is reduced by 6%.

The model has been trained on 60 million cases of professional data related to patents, codes, mathematics and chemistry, and plans to expand to 100 million cases in various fields by the end of the year.

The EXAONE 3.0 model link is as follows:

https://huggingface.co/LGAI-EXAONE/EXAONE-3.0-7.8B-Instruct