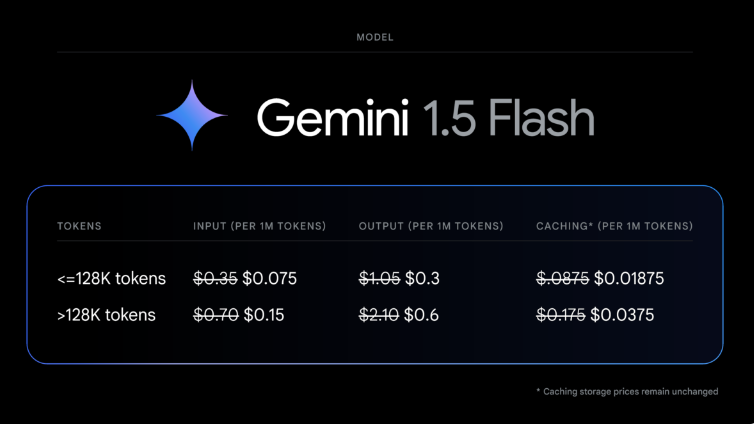

recent,Googleexist AI ModelsIn the price war, another big move was made, announcing its fast GeminiThe price of 1.5Flash AI models will be reduced by up to 78%. This is undoubtedly good news for developers. According to Google's announcement, the cost of input tokens will drop to $0.075 per million tokens, while the cost of output tokens will drop to $0.30 per million tokens for tips below 128,000 tokens. Similar price adjustments will apply even for longer tips and caches.

Gemini 1.5 Flash is widely used in scenarios that require fast response and low latency, such as summarization, classification, and multimodal understanding. Google's new API and AI studio also support better PDF understanding functions, which can analyze PDFs containing visual content such as graphics and images, demonstrating its powerful multimodal processing capabilities.

In addition, Google has expanded the language support for Gemini1.5Pro and Flash models, which now covers more than 100 languages. This means that developers from different regions can develop in a language environment that they are familiar with, thus avoiding the problem of blocked responses due to the use of unsupported languages. At the same time, Google has also opened the fine-tuning function of Gemini1.5Flash to all developers. The fine-tuning function enables developers to provide additional data based on specific tasks, customize the basic model, and thus improve model performance. In this way, developers can reduce the context size of the prompt, thereby reducing latency and costs, while improving the accuracy of the model.

The news of Google's price cut comes just after OpenAI recently announced a 50% reduction in its GPT-4o API access fees. Obviously, given the high cost of developing and operating AI models, price competition in the industry has become quite fierce.