according toTsinghua UniversityAccording to official news, the research group of Professor Fang Lu from the Department of Electronic Engineering of Tsinghua University and the research group of Academician Dai Qionghai from the Department of Automation have taken a different approach.The first full-forward intelligent optical computing training architecture was developed.Tai Chi-IILight trainingchip, achieving efficient and accurate training of large-scale neural networks in optical computing systems.

The research results were published online in the journal "Full Forward Training of Optical Neural Networks" on the evening of August 7, Beijing time.nature》journal.

According to the inquiry, the Department of Electronics of Tsinghua University is the first unit of the paper, and Professor Fang Lu and Professor Dai Qionghai are the corresponding authors of the paper.Xue Zhiwei, a doctoral student at the Department of Electronics at Tsinghua University, and Zhou Tianquan, a postdoctoral fellow, are co-first authors., Xu Zhihao, a doctoral student in the Department of Electronics, and Dr. Yu Shaoliang from the Zhijiang Laboratory participated in this work. This project was supported by the Ministry of Science and Technology, the National Natural Science Foundation of China, the Beijing National Research Center for Information Science and Technology, and the Tsinghua University-Zhijiang Laboratory Joint Research Center.

The reviewer of Nature pointed out in the review that "the ideas proposed in this paper are very novel, and the training process of this type of optical neural network (ONN) is unprecedented. The proposed method is not only effective but also easy to implement. Therefore,It is expected to become a widely adopted tool for training optical neural networks and other optical computing systems. "

According to Tsinghua University, in recent years, intelligent optical computing with high computing power and low power consumption has gradually entered the stage of computing power development. The universal intelligent optical computing chip "Tai Chi" has for the first time pushed optical computing from principle verification to large-scale experimental application, with a system-level energy efficiency of 160TOPS/W.However, existing optical neural network training relies heavily on GPUs for offline modeling and requires precise alignment of physical systems..

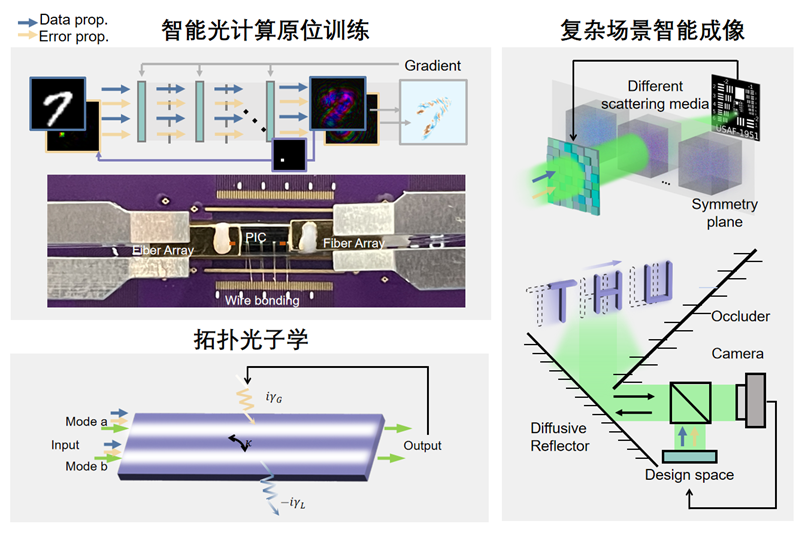

According to Xue Zhiwei, the first author of the paper and a doctoral student in the Department of Electronics, under the Taiji-II architecture, the back propagation in gradient descent is transformed into the forward propagation of the optical system, and the training of the optical neural network can be achieved by using two forward propagations of data and error. The two forward propagations have a natural alignment feature, which ensures the accurate calculation of the physical gradient. Since there is no need for back propagation,The Taichi-II architecture no longer relies on electrical computing for offline modeling and training, and accurate and efficient optical training of large-scale neural networks has finally been achieved..

The research paper shows that Taiji-II is able to train a variety of different optical systems and has demonstrated excellent performance in a variety of tasks:

- Large-scale learning: It has broken the contradiction between computational accuracy and efficiency, and increased the training speed of optical networks with millions of parameters by an order of magnitude.The accuracy of representative intelligent classification tasks increased by 40%.

- Intelligent imaging of complex scenes: In low-light environments (light intensity per pixel is only sub-photon), all-optical processing with an energy efficiency of 5.40×10^6 TOPS/W is achieved.System-level energy efficiency improved by 6 orders of magnitude. Intelligent imaging with a kilohertz frame rate is achieved in non-line-of-sight scenarios, improving efficiency by 2 orders of magnitude.

- In the field of topological photonics: non-Hermitian singularities can be automatically searched without relying on any model priors, providing new ideas for the efficient and accurate analysis of complex topological systems.

Attached paper link:

https://www.nature.com/articles/s41586-024-07687-4