In useStable DiffusionWhen drawing with tools, we often encounter this kind of problems, mainly manifested in

(1) After a lot of drawing, I finally got a picture that I was satisfied with. However, there were some flaws in some parts of the picture, and my overall satisfaction with the picture could only reach 95%.

(2) After a lot of drawing, you finally get a picture that you are very satisfied with. You want to get multiple pictures with similar effects. If you regenerate them, since SD generates pictures randomly by default, the elements of the newly generated pictures may not be what you want.

The above two scenarios are actually the same problem: we hope to make local adjustments to the generated images, repair the flaws on the photos, and finally generate multiple images with the desired effects.

If there are defects in the pictures generated by SD, of course they can be repaired well using traditional PS methods, but since we are using SD tools, we still hope to find a corresponding solution.

For this kind of problem, I have been thinking about what methods SD can use to achieve it. This is a relatively open question. I personally think that there is no one method that can adapt to all scenarios, so this is just a discussion of methods. Netizens are also welcome to leave messages for exchange and discussion.

Today I will introduce you to a common method:Fixed random number seedOf course, I will write about other methods later.

1. Random number seed (Seed)

The random number seed is equivalent to the DNA of the image and determines the content of the picture.

Normally, we do not need to specify a random seed, that is, use the default value of -1. In this way, the random seed will be randomly generated, so that each generated image is different.

At the bottom level, the random number seed is the noise map before the image is diffused, and each seed number represents a different noise map. The essence of Stable Diffusion is that it can "denoise" the image and make it look like something we are familiar with.

If you use the same denoising method, the same settings for hint, resolution, etc., and fix the same random number, you will have the same "denoising" process, and thus always produce the same image.

In the SD Web UI interface, set the random number parameter (the default value is -1, indicating random generation)

2. What parameters in SD affect the random number seed

In Stable Diffusion, the following parameter settings affect the random number seed (Conclusion from Doubao’s reply)

1. Sampling Method:Different sampling methods may affect the effect of random number seeds. For example, common sampling methods such as Euler and Euler a have differences in the process of handling random number generation and image generation.

2. Sampling Steps:The number of sampling steps will change the number of iterations in the generation process, which in turn affects the way the random number seed works and the final generated image effect.

3. Prompt:The specific content of the prompt word and the accuracy of the description will affect the model's interpretation and application of the random number seed.

4. Model version and training data: Different versions of Stable Diffusion models and the differences in the training data they are based on may cause the same random number seed to produce different results.

5. Control Parameters: If control parameters such as ControlNet are used, they will also interact with the random number seed to affect the characteristics and style of the generated images.

6. Image Size: The image size setting will affect the calculation and distribution of random numbers during the generation process, thus affecting the effect of the random number seed.

7. The value of the random number seed itself: Different values will naturally lead to completely different random generation results.

In short, in Stable Diffusion, multiple parameters interact with each other and jointly determine the final impact of the random number seed on the generated image. It is necessary to continuously experiment and adjust to find the parameter combination that best meets your needs.

3. Use random number seeds to implement image fine-tuning test

The random number seed provides the randomness of creation and the maximum restoration. Usually, we set the random number seed to -1 when generating pictures. When we draw lots many times to generate a satisfactory picture, we can set the random number seed to the random number seed of the currently generated picture. The subsequent pictures will be generated with reference to this seed. In this way, we can cleverly use the fixed seed random number to improve the picture quality or fine-tune the picture content on the basis of generating the "same" image.

Let's do a comparative test to verify the effect.

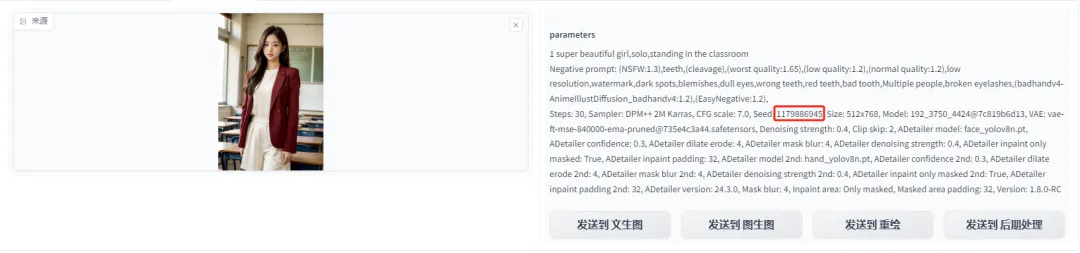

Common parameter settings

- Large model: majicMIX realistic 麦橘写实_v7.safetensors

Model download address (you can also get the network disk address at the end of the article)

LiblibAI: https://www.liblib.art/modelinfo/bced6d7ec1460ac7b923fc5bc95c4540

- VAE model: vae-ft-mse-840000-ema-pruned.safetensors

- Positive prompt words: 1 super beautiful girl, solo, standing in the classroom,

- Reverse prompt words: (NSFW:1.3),teeth,(cleavage),(worst quality:1.65),(low quality:1.2),(normal quality:1.2),low resolution,watermark,dark spots,blemishes,dull eyes ,wrong teeth,red teeth,bad tooth,Multiple people,broken eyelashes,(badhandv4-AnimeIllustDiffusion_badhandv4:1.2),(EasyNegative:1.2),

- Sampling method: DPM++ 2M Karras

- Sampling iteration number: 30

- Cue word relevance (CFG Scale): 7

- Image width and height: 512*768

- Open the After Detailer plug-in and select face_yolov8n and hand_yolov8n.pt as the model.

We draw lots to choose a picture.

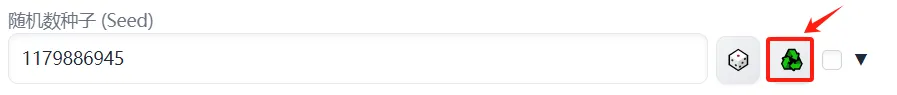

The random number seed is: 1179886945.

We can click the small icon in the red box in the random number seed column of the SD Web UI interface to get the random number seed value of the generated image and set it as the random number seed value of the current image.

We now fix the random number seed and randomly adjust a parameter that affects the random number seed to see how the effect of generating images changes.

(1) Adjust the prompt words

Add keywords of character expressions in positive prompts.

|

smiling |

crying(cry) |

|

|

|

angry |

excited |

|

|

From the above, we can see that by fixing the random number seed and adding appropriate prompt words, the overall image style and main elements do not change much, except for the beautiful woman's expression.

(2) Adjust the sampling method

|

DPM++ 2M Karras |

Euler |

|

|

From the above, we can see that adjusting the sampling method has a relatively large impact on the main elements of the image, which shows that the sampling method has a very large impact on random numbers.

(3) Sampling iteration steps

|

Iteration step number 30 |

Iteration number 40 |

|

|

From the above, we can see that fixing the random number seed and adjusting the sampling iteration steps have very little effect on the overall effect of the image. This is easy to understand, because the image rendered with 40 sampling iteration steps is further rendered based on the original 30 steps. The style and theme elements are basically finalized, and the effect is not very obvious. Of course, this is based on the assumption that the sampling iteration steps are within a certain reasonable range.

(4) Adjust the width and height of the image

|

Image 512*768 |

Picture 768*768 |

|

|

From the above, we can see that fixing the random number seed and adjusting the image width and height settings have a relatively large impact on the main elements of the image. This shows that the image width and height settings have a very large impact on the random number.

From the above comparisons, we can see that if you want to fine-tune the image, the best way is to add prompt words or adjust the number of sampling iterations. Of course, the examples listed here are not comprehensive. Interested friends can try it with pictures of different styles. Here we mainly provide ideas for discussion.

In addition, there is also randomness in image fine-tuning, so it is not certain whether this processing method has a significant effect on image fine-tuning. It is recommended that you try more, compare more, and experience more in actual use.

Okay, that’s all for today’s sharing. I hope that what I shared today will be helpful to you.

The model is placed in the network disk, and those who are interested can take it!

https://pan.quark.cn/s/b3df771404e2