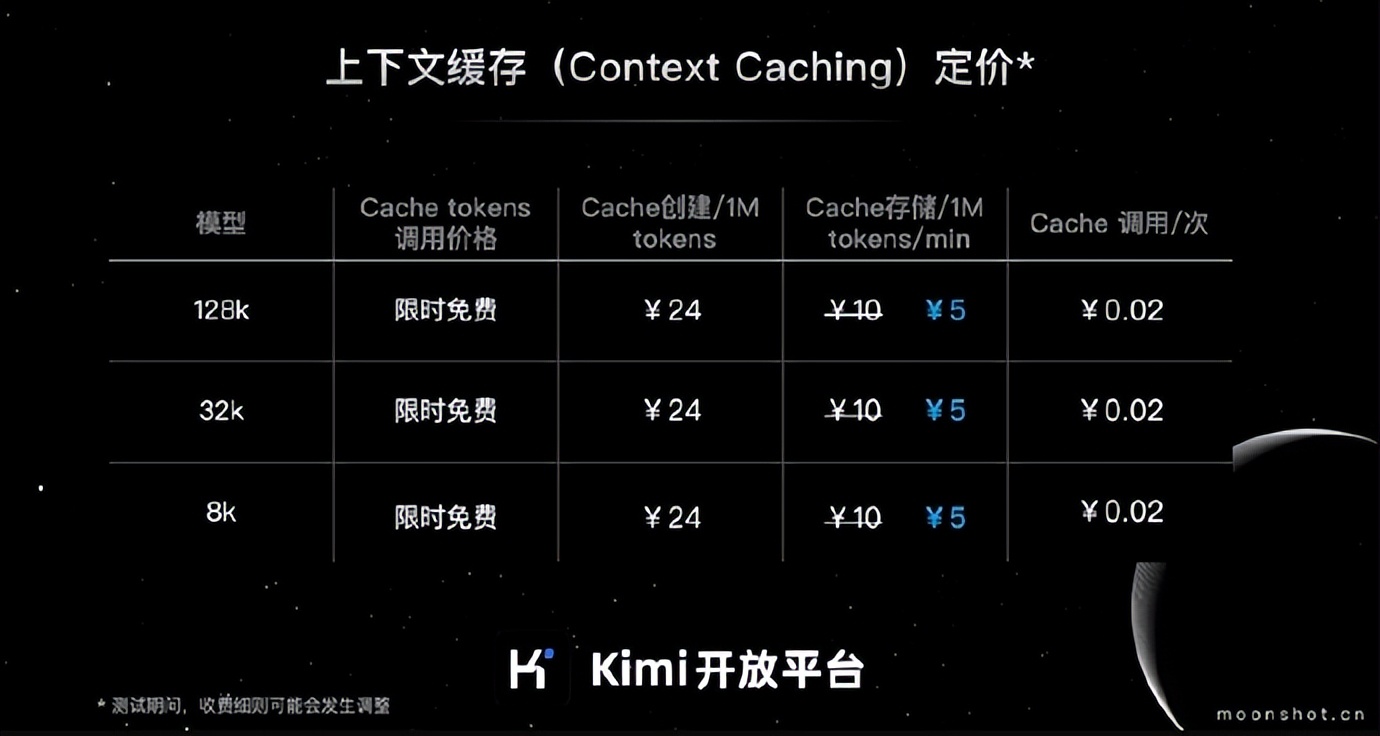

AI unicorn companiesDark Side of the MoonAnnounced today,Kimi open platformThe context cache Cache storage cost is reduced by 50%, and the Cache storage cost is reduced from $10 / 1M tokens / min to $5 / 1M tokens / min, effective immediately.

July 1, Kimi open platform context caching (Context Caching) function opened public beta. The official said that the technology in the API price remains unchanged under the premise of the developer can reduce the maximum 90% long text flagship large model use cost, and improve the model response speed.

IT home with Kimi open platform context caching function public beta details are as follows:

Technical Brief

Context caching is described as a data management technique that allows a system to pre-store large amounts of data or information that will be frequently requested. When a user requests the same information, the system can provide it directly from the cache without recalculating or retrieving it from the original data source.

Applicable scenarios

Context caching is suitable for frequent requests and repeated references to a large number of initial context scenarios, which can reduce the cost of long text models and improve efficiency. Officially, the maximum cost reduction is 90 %, and the first Token delay reduction is 83%. The applicable business scenarios are as follows:

- QA Bot with lots of preset content, e.g. Kimi API helper

- Frequent queries for a fixed set of documents, such as public company disclosure Q&A tools

- Periodic analysis of static codebases or knowledge bases, such as various Copilot Agents

- Pop-up AI apps with huge instantaneous traffic, e.g. Coax Simulator, LLM Riddles

- Agent-like applications with complex interaction rules, etc.

Billing Instructions

The Context Cache charging model is divided into three main parts:

Cache creation costs

- Call the Cache creation interface, after successfully creating a Cache, the actual amount of Tokens in the Cache will be billed. 24$/M token

Cache storage costs

- Cache storage fees are charged by the minute during the Cache's survival time. $10 / M token / minute.

Cache call charges

- Charges for Cache calls to incremental tokens: per-model pricing

- Cache call times charge: Cache survival time, the user through the chat interface request has been created successfully Cache, if the content of the chat message and the survival of the Cache match successfully, according to the number of times to call the Cache call charge. 0.02 yuan / times

Public test time and eligibility instructions

- Public test period: 3 months after the function is launched, the price may be adjusted at any time during the public test period.

- Public beta eligibility: Context Caching is available to Tier 5 users during the beta period, with other users to be released at a later date.