Scenarios from the sci-fi movie Her seem to be coming into reality.GPT-4oofVoice FunctionIt's finally on.Grayscale testSome ChatGPT Plus users have already had a sneak peek at this exciting new feature, which allows OpenAI to not only tell jokes, learn to purr, and even act as a "second language coach" to help practice speaking.

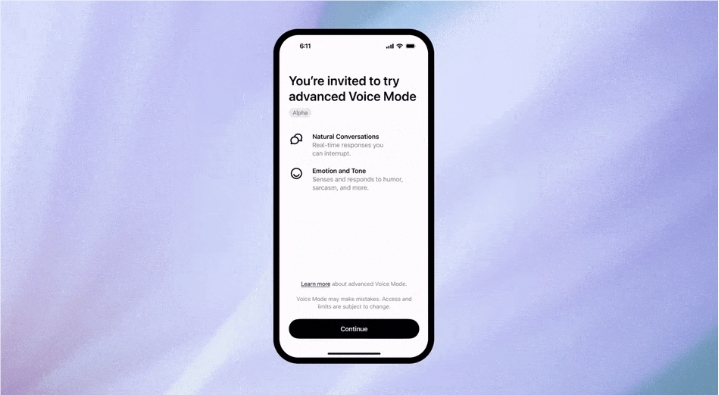

GPT-4o's voice mode delivers a more natural, real-time dialog experience. Users can interrupt the AI at will, and it even senses and responds to the user's emotions. This feature is expected to be available to all ChatGPT Plus users this fall. What's even more exciting is that video and screen sharing features will also be available soon, allowing users to communicate with ChatGPT "face-to-face".

The output capacity of GPT-4o has also been dramatically increased. The number of output tokens for the new model has skyrocketed from 4,000 to 64,000, which means that the equivalent of four full-length movie scripts can be obtained at once.OpenAI quietly rolled out this beta version of the new model, gpt-4o-64k-output-alpha, in its official webpage.

To ensure security and quality, OpenAI has been rigorously testing the GPT-4o voice feature for the past few months. With more than 100 red teamers, they tested 45 languages and trained the model to speak using only four preset voices to protect user privacy. In addition, content filtering was essential, and the team took steps to block the generation of violent and copyright-related content.

Netizens have tested the GPT-4o Voice Mode with impressive results. Some people found that it can answer questions quickly and with almost no delay; some people used it to imitate different voices and accents; others let it act as a soccer match commentator and even tell stories vividly in Chinese. These cases demonstrate the power of GPT-4o in speech recognition and generation.

It is worth mentioning that although OpenAI claims that the video and screen sharing features will be launched later, some users have already experienced these features in advance. For example, one user showed ChatGPT his new pet cat's den, and after seeing it, ChatGPT commented that "it must be very cozy" and asked how the cat was doing.

In addition, the long output feature of GPT-4o has quietly gone live.OpenAI officially announced the availability of the GPT-4o Alpha version to beta testers, which supports up to 64K tokens per request, equivalent to a 200-page novel. This feature was introduced based on user demand for longer output content.

However, longer outputs also imply higher computation and price.GPT-4o Long Output is priced at $6 per million input tokens and $18 per million output tokens, which is an increase compared to the previous model. Nonetheless, some researchers believe that Long Output is mainly used for use cases such as data conversion and is very helpful in scenarios such as writing code and improving writing.

Overall, GPT-4o's voice function and long output capability will undoubtedly bring users a richer and more convenient interactive experience. We have reason to believe that with the continuous progress of technology, AI will show its unique value in more fields.