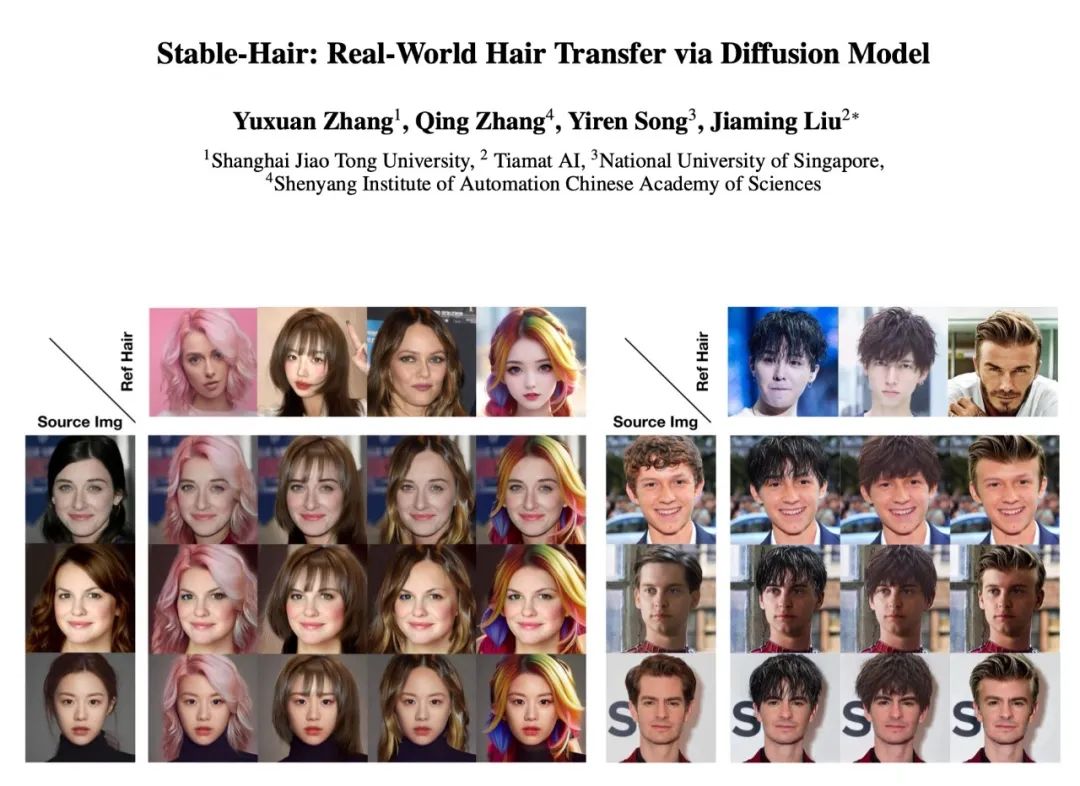

Most of the new AI technologies released recently are centered around face and posture. My focus is also mainly on projects such as face authenticity and costume change. After seeing this project today, I realized that hair is also very important, so I want to recommend this project to everyone.

This AI hair-changing technology is a framework based on a diffusion model jointly developed by Shanghai Jiao Tong University and Tuige Digital.Stable-HairMaybe you are not familiar with Backspace Digital. It was actually jointly established by the Tiamat team incubated by ShanghaiTech University and senior industry practitioners.

With this glow technology, you can have more control over the generation of hair when generating portraits.

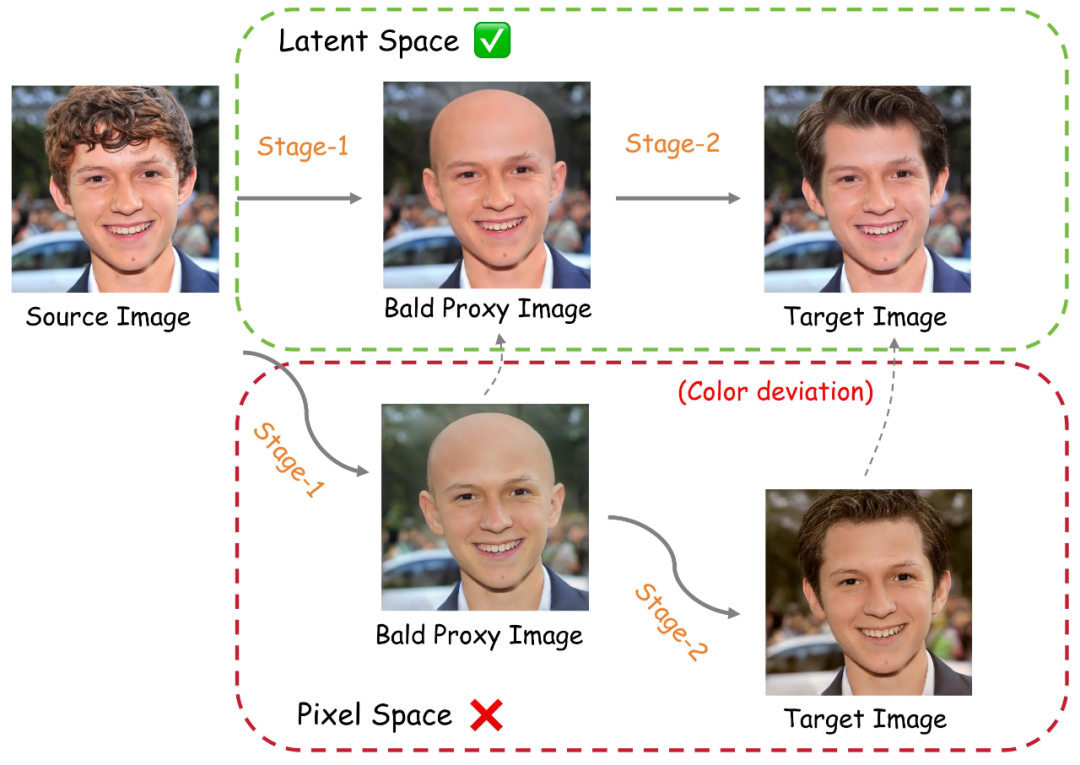

Stable-Hair uses a two-stage design:

- The first stage uses a bald head converter to turn the original image into a bald head

- The second stage is to precisely transplant the target hairstyle.

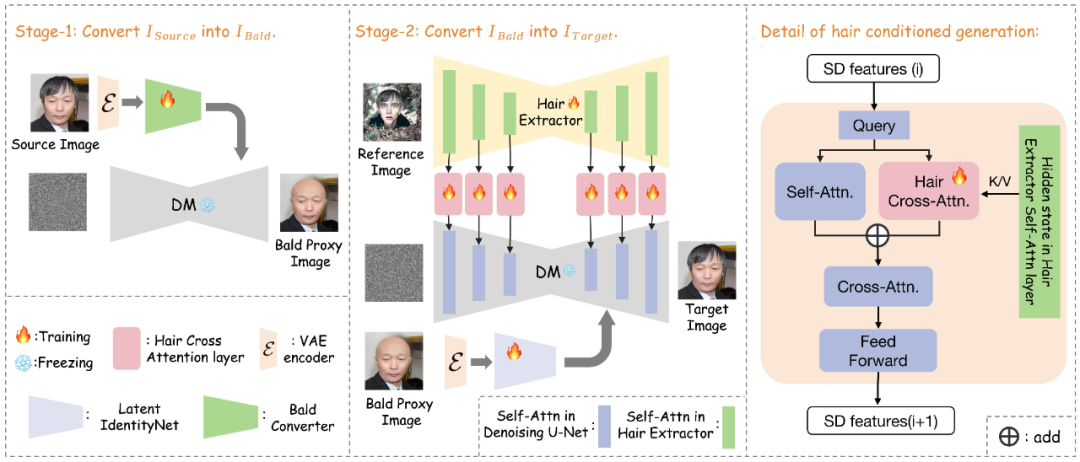

The core of the framework consists of three modules:

- Hairstyle extractor: responsible for extracting hairstyle features from reference images

- Latent Identity Network: Ensuring Content Consistency of Source Images

- Hairstyle cross-attention layer: ensuring transplant accuracy and realism

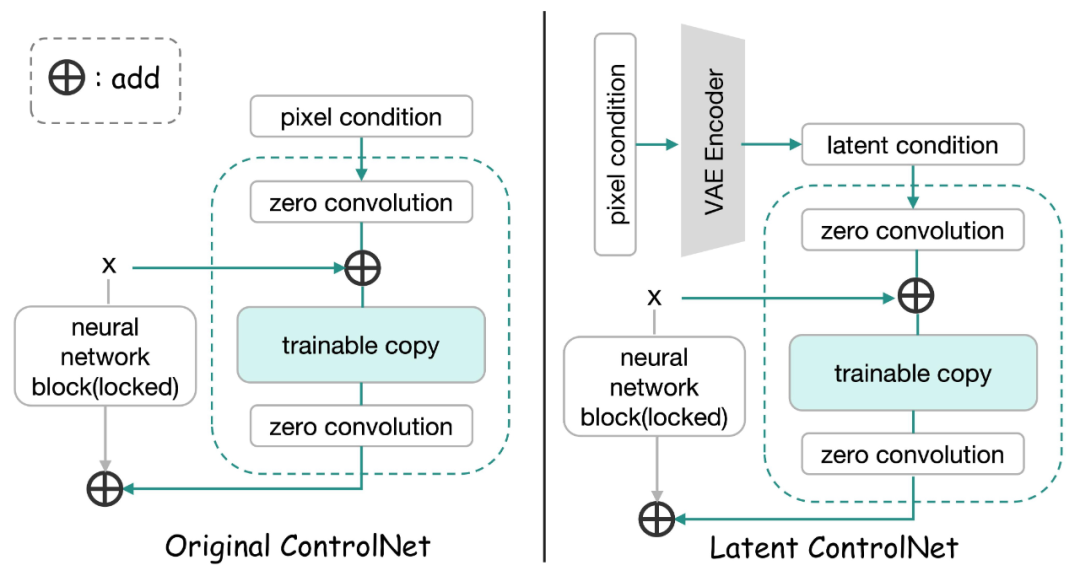

The researchers also developed a new Latent ControlNet architecture.

It can map the entire process from pixel space to latent space, effectively solving the color inconsistency problem common in previous methods.

Whether it is subjective evaluation or objective indicators, Stable-Hair comprehensively surpasses existing methods.

It can not only accurately transplant various complex hairstyles, but also well maintain the structure and identity characteristics of the source image.

It can even achieve cross-domain hairstyle transfer, which was unimaginable before.

Official website: https://xiaojiu-z.github.io/Stable-Hair.github.io/

Code: https://github.com/Xiaojiu-z/Stable-Hair