ElonMuskMemphissupercomputingThe cluster (Memphis Supercluster) is live, and according to Musk, it uses 100,000 liquid-cooled H100s on a single RDMA fabric that is"The world's most powerful AI training cluster".

Such a huge amount of computing power naturally requires a staggering amount of electricity, with each H100 GPU consuming at least 700 watts of power, which means that the entire datacenter needs more than 70 megawatts of power to run at the same time, and that doesn't even include the power consumed by the other servers, network, and cooling equipment. Amazingly, Musk is currently using 14 large mobile generators to power the massive facility, as an agreement with the local power grid has yet to be finalized.

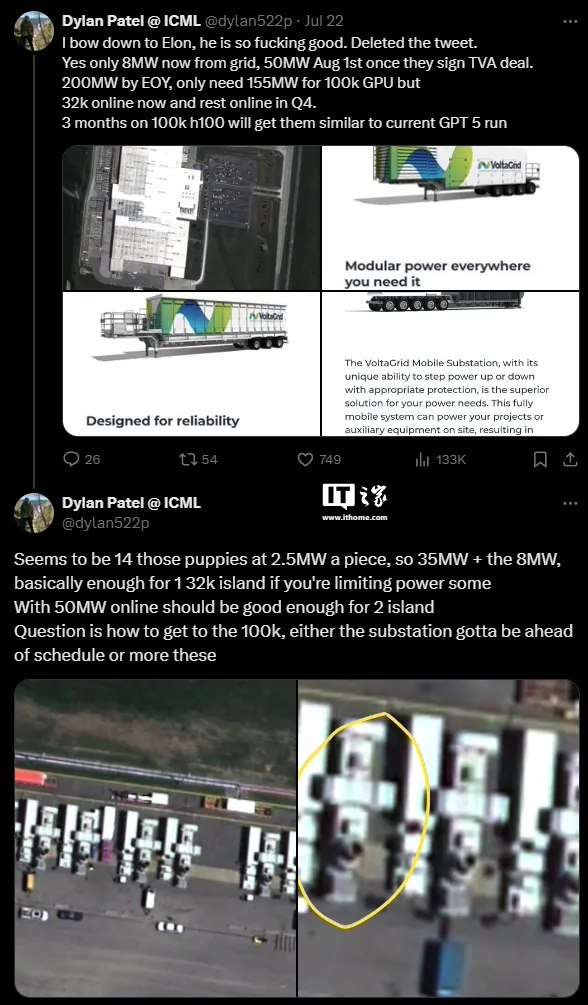

AI and semiconductor analyst Dylan Patel initially said on social media that Musk's Memphis supercomputing cluster may not be able to run due to power constraints. He noted that only 7 megawatts of power is currently being drawn from the grid, which can only support about 4,000 GPUs running. The Tennessee Valley Authority (TVA) will provide 50 megawatts of power to the facility by August 1, provided xAI can sign off on the agreement. patel also observed that the 150-megawatt substation at the xAI site, which is expected to be completed by the fourth quarter of 2024, is still under construction.

However, through satellite imagery analysis, Patel quickly tweeted that he had discovered Musk's solution -- the Using 14 VoltaGrid mobile generators connected to what looks like four mobile substations.

Each semi-trailer-sized generator delivers 2.5 megawatts of power, for a staggering 35 megawatts of power from 14 of them. Combined with the 8 megawatts of power from the grid, that's a total of 43 megawatts, enough to run 32,000 H100 GPUs at limited power.

If the Tennessee Valley Authority provides it with the 50 megawatts of power it needs in early August, Musk will have enough power to run 64,000 GPUs at once, Patel said.It takes 155 megawatts of power to run 100,000 GPUs, but xAI needs substations to reach that level. So either the substation will be completed ahead of schedule, or Musk will deploy more mobile generators to meet the power demand.

Huge power consumption and its impact on global warming are major issues facing AI data centers today. All data center GPUs sold in 2023 alone will consume more electricity than 1.3 million average U.S. homes combined, putting a huge strain on the power grid. And simply building more power plants won't meet the needs of data centers; additional infrastructure, such as high-voltage transmission lines and substations, will be needed to move power from the plants to the servers.

In addition to the time and cost of building the power plants needed for AI computing, greenhouse gas emissions must also be considered. While the mobile generators deployed by Musk at the Memphis supercomputing cluster use natural gas as fuel (cleaner than coal or oil), theHowever, it still emits carbon into the atmosphere during operation.

Google recently revealed that its carbon footprint has grown by 48% since 2019 due to data center energy needs. so it's predictable that xAI will face the same problem unless Musk moves to cleaner ways of producing energy.

Musk is pushing hard to make xAI the frontrunner in AI development and will do whatever it takes to do so. Hoping that the use of mobile generators is only a temporary solution, the Memphis supercomputer cluster will need to transition to a cleaner source of energy, which the Tennessee Valley Authority can provide. Since the latter uses a combination of nuclear, hydroelectric, and fossil fuel generation, xAI would have a smaller carbon footprint if it sourced its power from it instead of relying on mobile generators that only use natural gas.