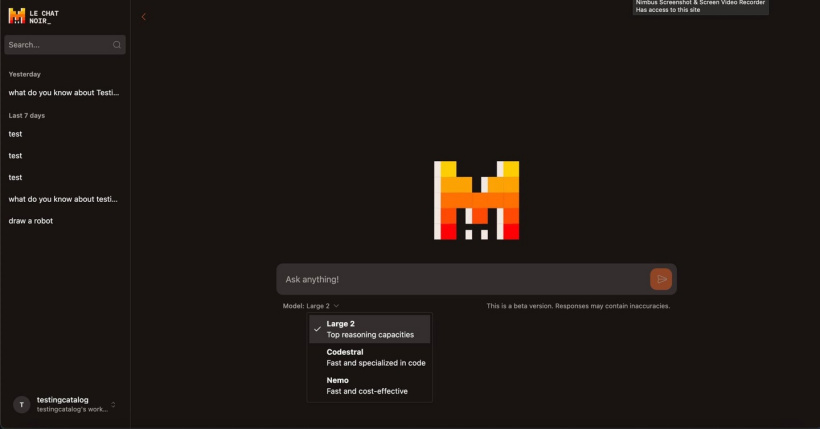

AI competition is becoming increasingly fierce. After Meta released the open source Llama 3.1 model yesterday, French artificial intelligenceStartups Mistral Also joined the competition,Launched the new generation flagship model Mistral Large 2.

Model Introduction

The model has a total of 123 billion parameters and is more powerful than its predecessor in terms of code generation, mathematics, and reasoning, and provides stronger multi-language support and advanced function calling capabilities.

Mistral Large 2 has a 128k context window and supports dozens of languages including Chinese and more than 80 encoding languages. The model has an accuracy of 84.0% on MMLU and has very significant improvements in code generation, reasoning, and multi-language support.

Mistral said one of the key points of the training wasMinimize model hallucination issuesThe company says Large 2 was trained to be more discerning in its responses, admitting when it doesn’t know something rather than making up something that seems plausible.

Open method

According to an official press release, AI ModelsOne of the concerns,"Open Authorization" for non-commercial research purposes, including opening weights, supporting third parties to fine-tune according to their preferences, etc.

If a commercial/enterprise wishes to use Mistral Large 2, a separate license and usage agreement needs to be purchased from Mistral.

performance

It has fewer parameters than Llama 3.1’s 405 billion parameters (the internal model settings that guide its performance), but still performs close to the former.

Mistral Large 2, available on the company’s main platform and through cloud partners, builds on the original Large model, bringing advanced multi-language capabilities and improved performance in inference, code generation and math.

Officially called a GPT-4-level model, its performance in multiple benchmarks is very close to GPT-4o, Llama 3.1-405, and Anthropic's Claude 3.5 Sonnet.

Mistral noted that the product will continue to “drive the boundaries of cost-effectiveness, speed, and performance,” while providing users with new capabilities, including advanced function calls and retrieval, to build high-performance AI applications.