Llama3.1Leaked! You heard it right, this one has 405 billion parametersOpen Source Model, which has caused a stir on Reddit. This may be the closest thing to GPT-4o yet.Open Sourcemodel, and even surpasses it in some aspects.

Llama3.1 is composed ofMetaDeveloped by (formerly Facebook)Large Language ModelsAlthough it has not been officially released yet, the leaked version has already caused a stir in the community. This model not only includes the base model, but also the benchmark results of 8B, 70B and the maximum parameter 405B.

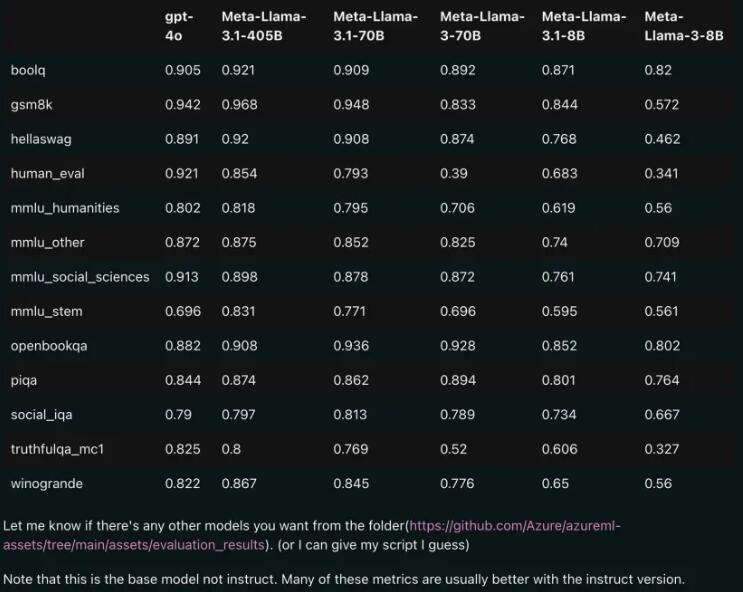

Performance comparison: Llama3.1 vs GPT-4o

Judging from the leaked comparison results, even the 70B version of Llama3.1 has surpassed GPT-4o on multiple benchmarks. This is the first time that an open source model has reached the SOTA (State of the Art) level on multiple benchmarks, which makes people sigh: the power of open source is really strong!

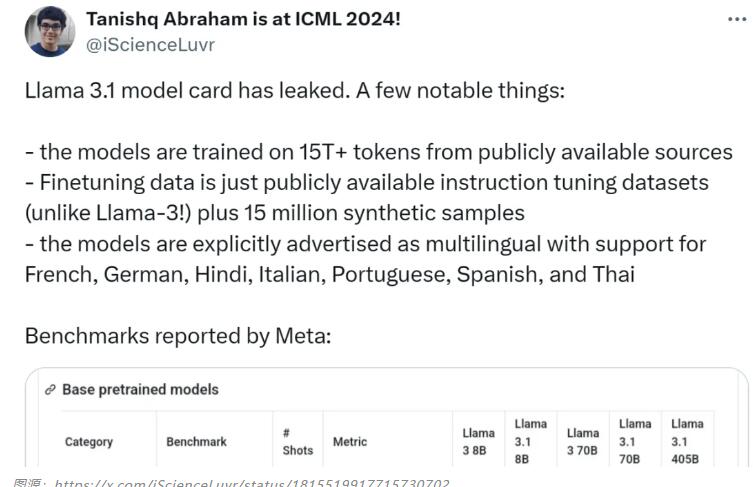

Model highlights: multi-language support, richer training data

The Llama 3.1 model is trained using 15T+ tokens from public sources, and the pre-training data cutoff is December 2023. It supports not only English, but also multiple languages including French, German, Hindi, Italian, Portuguese, Spanish, and Thai. This makes it perform well in multilingual conversation use cases.

The Llama3.1 research team attaches great importance to the security of the model. They adopted a multi-faceted data collection method, combining artificially generated data with synthetic data to mitigate potential security risks. In addition, the model also introduced boundary prompts and adversarial prompts to enhance data quality control.

Model card source: https://pastebin.com/9jGkYbXY#google_vignette