July 18, 2024OpenAILaunches its most cost-effective modelGPT-4o mini, what is it used for? How is it different from GPT4-o?

To sum up, there are three important points:

- Lower prices;

- Faster speed;

- Excellent effect; (GPT-4oOnly slightly behind, still superior to most other mainstream models)

Lower price

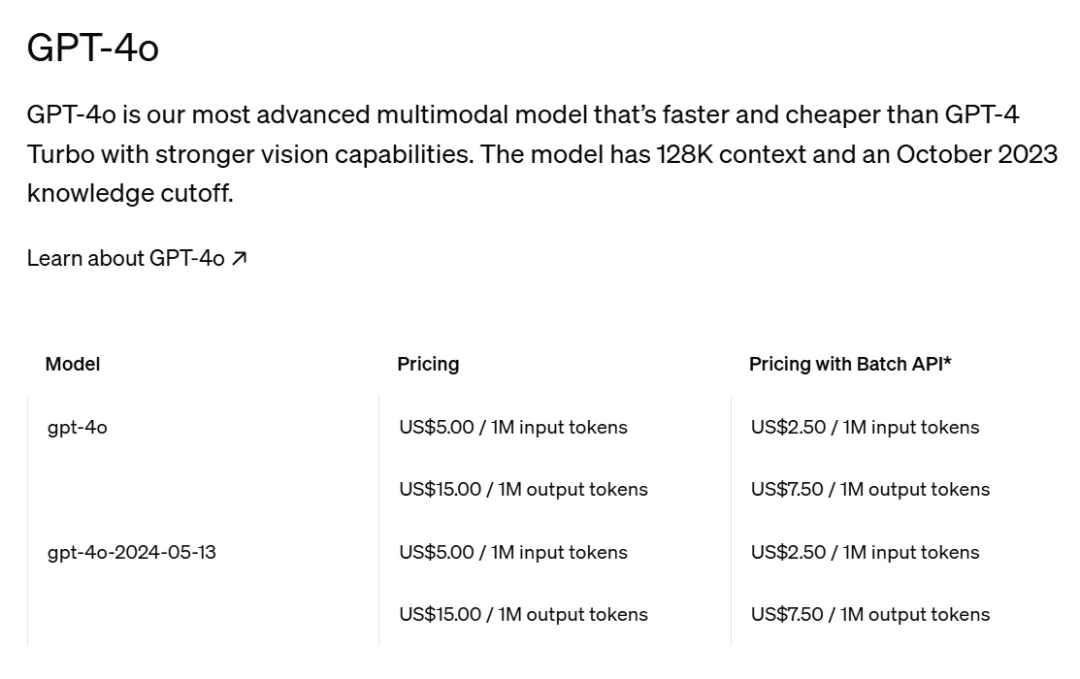

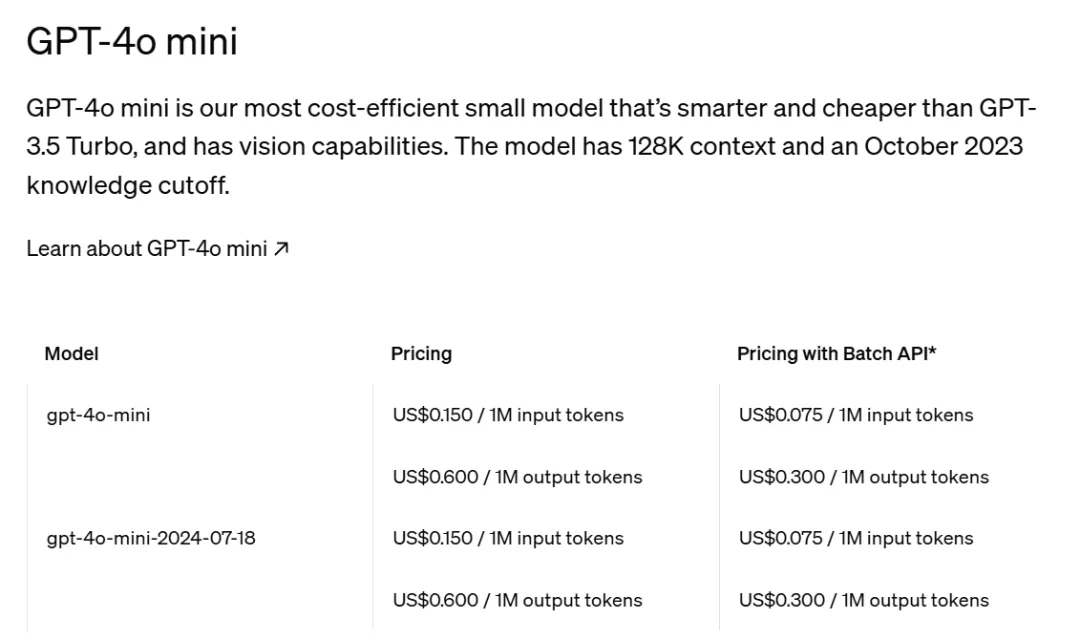

From the official quotes of the model's input and output tokens, GPT-4o's million input tokens are US$5.00, while GPT-4o mini is US$0.15, which is only 3% of GPT-4o's cost. GPT-4o's million output tokens are US$15.00, while GPT-4o mini is US$0.60, which is only 4% of GPT-4o's cost.

This price reduction is really enough. Compared with the mainstream models of domestic large model manufacturers that have reduced their prices to 1 to 10 yuan for millions of tokens a while ago, it is still very competitive. For details, see the article [AI Dynamics] A picture to understand the timeline of AI manufacturers' large model API price reductions, millions of tokens "rolled" to cabbage prices!

The two pictures below are the official quotations of GPT-4o and GPT-4o mini released by OpenAI.

The screenshot below shows the prices of GPT-4o mini and GPT-3.5 Turbo issued by the OpenAI Developer X Platform account over time.

Faster

GPT-4o mini, with its low cost and low latency, supports a wide range of tasks, such as chaining or parallelizing multiple model calls (e.g., calling multiple APIs), passing large amounts of context to models (e.g., a complete code base or conversation history), or interacting with customers through fast, real-time text responses (e.g., a customer support chatbot).

However, I have not been able to personally compare and test the speed yet. Before comparing GPT-4o and GPT-4, I intuitively felt that the response speed was indeed much faster.

Excellent results

GPT-4o mini outperforms GPT-3.5 Turbo and other small models on academic benchmarks for text intelligence and multimodal reasoning, and supports the same range of languages as GPT-4o.

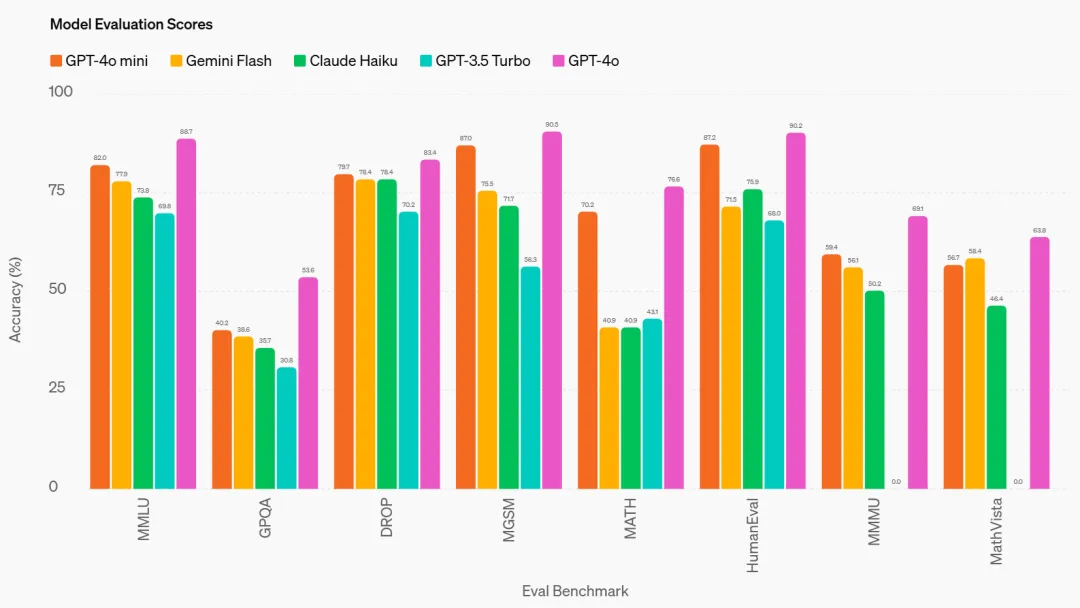

GPT-4o mini has been evaluated on several key benchmarks, which OpenAI describes as follows:

Reasoning tasks: GPT-4o mini outperforms other small models on reasoning tasks involving text and vision, scoring 82.0% on the text intelligence and reasoning benchmark MMLU, compared to 77.9% for Gemini Flash and 73.8% for Claude Haiku.

Mathematical and Coding Skills: GPT-4o mini performs well on mathematical reasoning and coding tasks, outperforming previous small models on the market. On MGSM, which measures mathematical reasoning, GPT-4o mini scored 87.0%, while Gemini Flash scored 75.5% and Claude Haiku scored 71.7%. On HumanEval, which measures coding performance, GPT-4o mini scored 87.2%, while Gemini Flash scored 71.5% and Claude Haiku scored 75.9%.

Multimodal Reasoning: GPT-4o mini also showed strong performance on the multimodal reasoning evaluation MMMU, scoring 59.4%, while Gemini Flash scored 56.1% and Claude Haiku scored 50.2%.

Below is a screenshot of the benchmark evaluation results.