Zhipu AI Announced that a new video understanding model has been trained CogVLM2-Video, andOpen Source.

It is reported that most current video understanding models use frame averaging and video tag compression methods, which results in the loss of temporal information and cannot accurately answer time-related questions. Some models that focus on temporal question-answering datasets are too limited to specific formats and applicable fields, causing the models to lose broader question-answering capabilities.

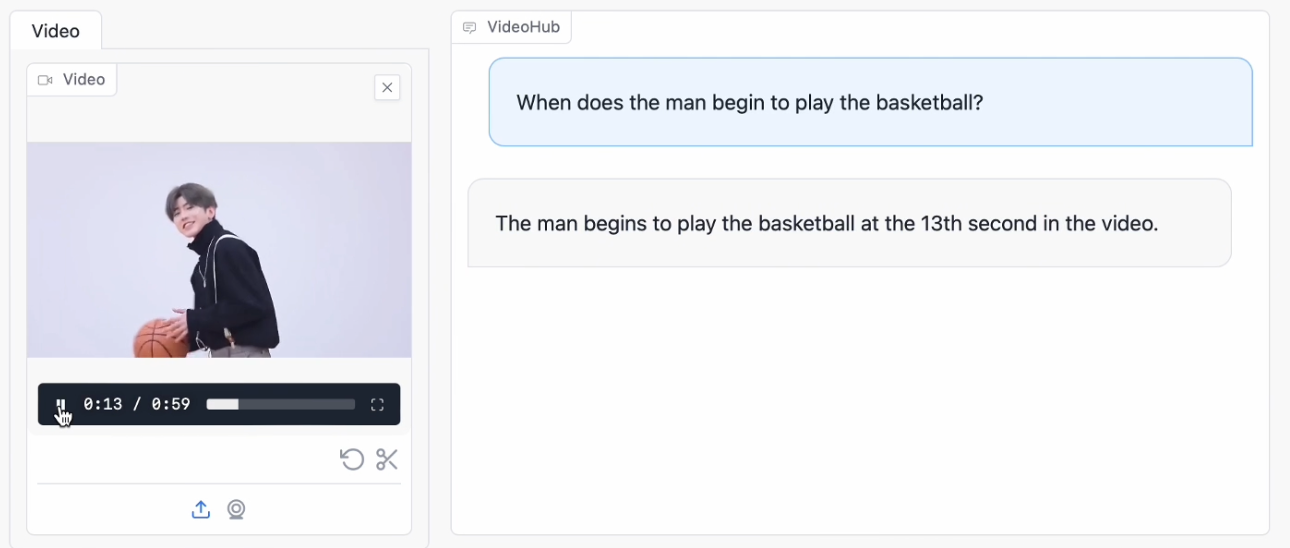

▲ Official effect demonstration

Zhipu AI proposed aAutomatic time positioning data construction method based on visual model, generating 30,000 time-related video question-answering data. Based on this new dataset and existing open-domain question-answering data, we introduced multi-frame video images and timestamps as encoder inputs and trained the CogVLM2-Video model.

Zhipu AI said that CogVLM2-Video not only achieved state-of-the-art performance on public video understanding benchmarks, but also excelled in video subtitle generation and temporal positioning.

Attached related links:

-

Project website:https://cogvlm2-video.github.io

-

Online Trial:http://36.103.203.44:7868/