A new force is emerging in the field of AI. Abu Dhabi Technology Innovation Institute (TII) announced that it will open source its new big modelFalcon2, a model with 11 billion parameters, which has attracted global attention for its outstanding performance and multilingual capabilities.

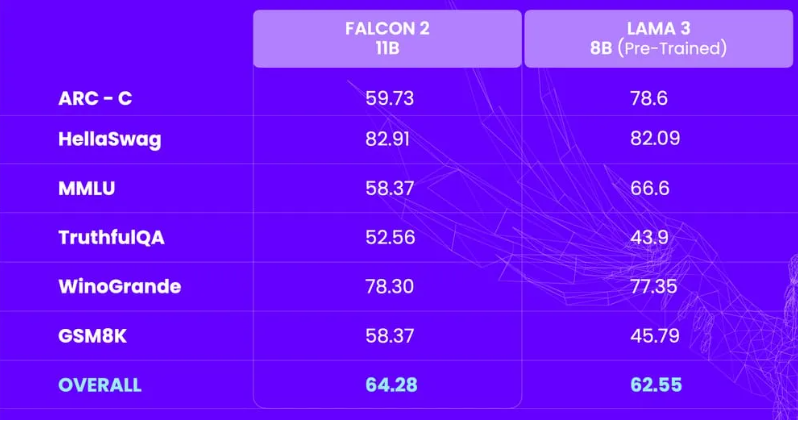

Falcon2 has two versions: a basic version that is easy to deploy and can generate text, code, and summaries; the other is a VLM model with visual conversion function that can convert image information into text.Open Source Big ModelIn multiple rights protection test rankings, Falcon211B's performance surpassed Meta's Llama38B and tied for first place with Google's Gemma7B, proving its excellent performance.

Falcon211B's multilingual capabilities enable it to easily handle tasks in multiple languages, including English, French, Spanish, German, and Portuguese, enhancing its potential for application in different scenarios. As a large visual model, Falcon211B VLM has broad application potential in industries such as healthcare, finance, e-commerce, education, and law, and can recognize and interpret images and visual content in the environment.

Falcon211B used more than 5.5 trillion tokens for pre-training on the open source dataset RefinedWeb it built. This dataset is high-quality, filtered and deduplicated. TII enhanced it by selecting corpus and adopted a four-stage training strategy to improve the model's contextual understanding ability.

It is worth mentioning that Falcon2 is a large model with powerful performance and low consumption, which can run efficiently with only one GPU, making it highly scalable, easy to deploy, and even integrated into lightweight devices such as laptops. This provides great convenience for small and medium-sized enterprises and individual developers, and allows commercial use.

Dr. Hakim Hacid, executive director and acting principal investigator of TII’s Inter-Center for Artificial Intelligence, said that as generative AI techniques evolve, developers are recognizing the advantages of smaller models, including reducing computing resource requirements, meeting sustainability standards, and providing enhanced flexibility.

As early as May 2023, TII first open-sourced the Falcon-40B large model, which ranked first in the huggingface open source large language model rankings, beating a series of well-known open source models. Falcon-40B is trained on a 1 trillion tokens dataset and can be used for text question answering, summarization, automatic code generation, language translation, etc., and supports fine-tuning for specific business scenarios.

TII was established in 2020 as a research institute under the Abu Dhabi Department of Higher Education and Science and Technology. Its goal is to promote scientific research, develop cutting-edge technologies and commercialize them to promote the economic development of Abu Dhabi and the UAE. TII currently has more than 800 research experts from 74 countries, has published more than 700 papers and more than 25 patents, and is one of the world's leading scientific research institutions.

The open source of Falcon2 is not only TII's commitment to technology sharing, but also a bold exploration of the future development of AI. The open source Falcon2 will reduce computing resource requirements, meet sustainability standards, and enhance flexibility, perfectly integrating into the emerging trend of edge AI infrastructure.

Model address: https://huggingface.co/tiiuae/falcon-11B