Alibaba CloudThousand Questions on TongyiOpen SourceTwo modelsVoice Base Model SenseVoice (for speech recognition) and CosyVoice (for speech generation).

SenseVoice focuses onHigh-precision multi-language speech recognition, emotion recognition, and audio event detection, has the following characteristics:

-

Multi-language recognition: Using more than 400,000 hours of data training, supporting more than 50 languages,The recognition effect is better than the Whisper model

-

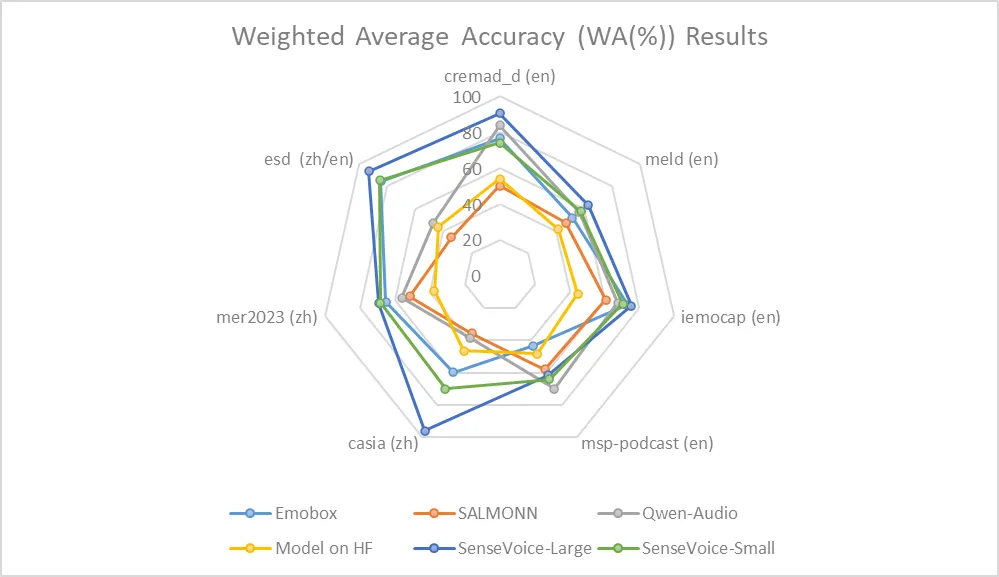

Rich text recognition: It has excellent emotion recognition and can be used on test dataAchieve or exceed the performance of the best emotion recognition models;Supports sound event detection capabilities, including music, applause, laughter, crying, coughing, sneezing and other common human-computer interaction events for detection

-

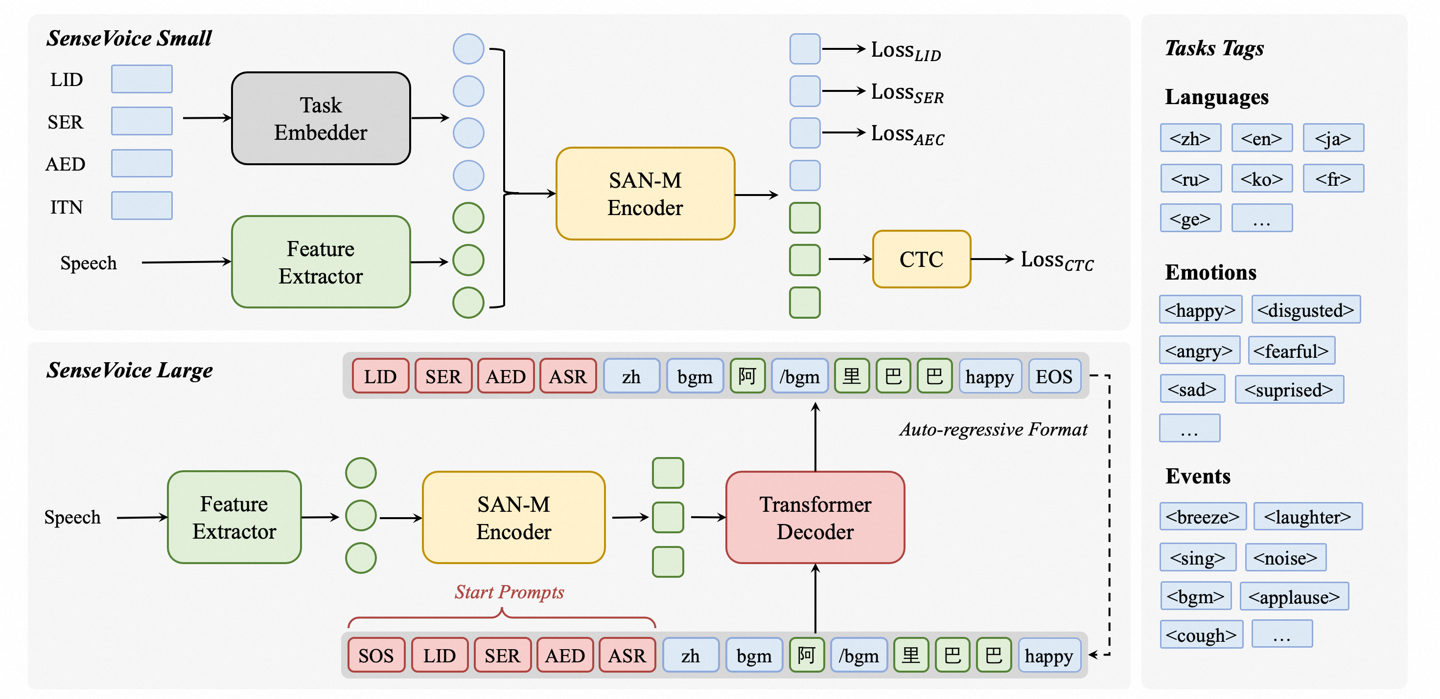

Efficient reasoning: The SenseVoice-Small model uses a non-autoregressive end-to-end framework with extremely low inference latency. Inference of 10s audio takes only 70ms.15 times better than Whisper-Large

-

Fine-tuning customization: It has convenient fine-tuning scripts and strategies to help users fix long-tail sample problems according to business scenarios

-

Service deployment:It has a complete service deployment link, supports multiple concurrent requests, and supports client languages such as python, c++, html, java and c#

Compared with open source emotion recognition models, the SenseVoice-Large model canAchieved the best results on almost all data, and the SenseVoice-Small model can also outperform other open source models on most data sets.

The CosyVoice model also supports multilingualism, timbre, and emotion control. The model performs well in multilingual speech, zero-sample speech generation, cross-lingual voice cloning, and command following.

Related Links:

SenseVoice:https://github.com/FunAudioLLM/SenseVoice

CosyVoice:https://github.com/FunAudioLLM/CosyVoice