Since last year, the AI industry has relentlessly staged "iPhone moments", such as ChatGPT's human-computer communication, HeyGen's making foreigners speak Chinese, Runway's transformation of static into motion, etc., and the center of the AI stage is becoming the focus of the struggle for supremacy of all parties.

But at the same time, in the reverse side of these AI applications are also staging a "butterfly effect" brought about by the "disaster".

For example, the seemingly ordinaryAI face-changingFrom celebrities and presidents to white-collar workers and students, it's not so spectacular or subversive, but it's enough to make us "jump". And"One-click undressing"The advent of apps has further eroded people's sense of security.

What kind of people are using these apps for evil? Do they have no control over the world? Is this the value that AI brings to humanity?

Is Artificial Intelligence aiding the enemy?

I saw your "nude photos".

If one day you take the subway to work or ride your bike to school as usual, and while you are in your seat getting ready for the day's work or classes, a friend sends you a message that says "I've seen your nude photos", how would you react?

It's not a joke.

Previously, in Almendralejo, Spain, a town of 30,000 people, a topic was going "viral" with nude photos of more than 30 girls from the school on the Internet. What's even more horrifying is that the girls were clearly victims, but in this small town there was no support from their neighbors.

In the predictable darkness, they persevered with the support of their families until the police released information about the suspects, which put the storm to rest, but the sinfulness of the crime showed us the dark side of AI.

The Spanish National Judicial Police (PJS) has provided some explanations and reports on the case: there were ten people involved, three of whom used ClothOff, a free artificial intelligence (AI) application that allows the user to "undress" anyone they want, which means that anyone can commit a crime at a low cost through an AI application like this one. This means that anyone can commit a crime at a fraction of the cost of an AI app.

Such culpability hasn't tempered the technology, but rather it's evolving at an even faster pace.

In our country there is also such a case, a Guangzhou girl in the subway to take beautiful photos, by individual netizens, with AI a key to undress software to generate nude photos. What's more, after the AI undressing process, there are still netizens who send the generated nude photos to various WeChat groups, and continue to carry out rumors, which has a non-negligible impact on the parties involved.

Not only the general public has become a victim, American pop star Taylor Swift is also plagued by "AI fake photos" incident, a large number of AI-generated fake pornographic, bloody photos of Taylor in a number of social media platforms, the number of views has exceeded ten million, triggering a social media shock, but also attracted the attention of the U.S. White House.

This isn't the first time Taylor has been the victim of AI "deep fakes". Not too long ago, AI technology was used to imitate her voice and image to promote merchandise that consumers mistakenly thought was endorsed by Taylor.

Metaverse New Voice believes that while the use of AI technology to produce false information has been around for a long time, with the rapid development of generative AI over the past year or so, the technology for deeply faking videos, images, and audio is more mature and common, and the cost of faking is cheaper.

What communications scholar Robert Chesney has imaginatively called, "The Liar's Dividend," unprecedented AI deep-fake anxiety has made that once-distant issue suddenly real and urgent.

So what should be done about these heinous acts? Should ordinary people be victimized in this age of truth and falsehood?

Isn't there a way to stop the dark side of AI?

Highly realistic fake images, audio, video, etc., generated using artificial intelligence algorithms are referred to as "deepfake" content.

The concept first appeared in 2017 and since then with the help of this technologyScamsor manipulation of public opinion is becoming more frequent globally. In the U.S., for example, the year-over-year increase in AI scams in the past year exceeded 50%.

To this day, however, people have not been able to effectively address the problem. This is because advances in AI counterfeiting capabilities have far outpaced the development of information-awareness technologies.

Today, anyone can quickly and inexpensively generate images, audio and even video that are difficult to recognize as authentic and difficult to trace. In contrast, forgery techniques are difficult to popularize because of their subject matter and software specificity. In addition, fact-checking requires more time and effort.

According to a survey conducted by Mizuho Research Institute and Technology Japan, 70% of Japanese respondents believe that it is difficult to determine whether information on the Internet is true or false, but only 26% of respondents said that they would perform some kind of verification after coming into contact with suspicious information.

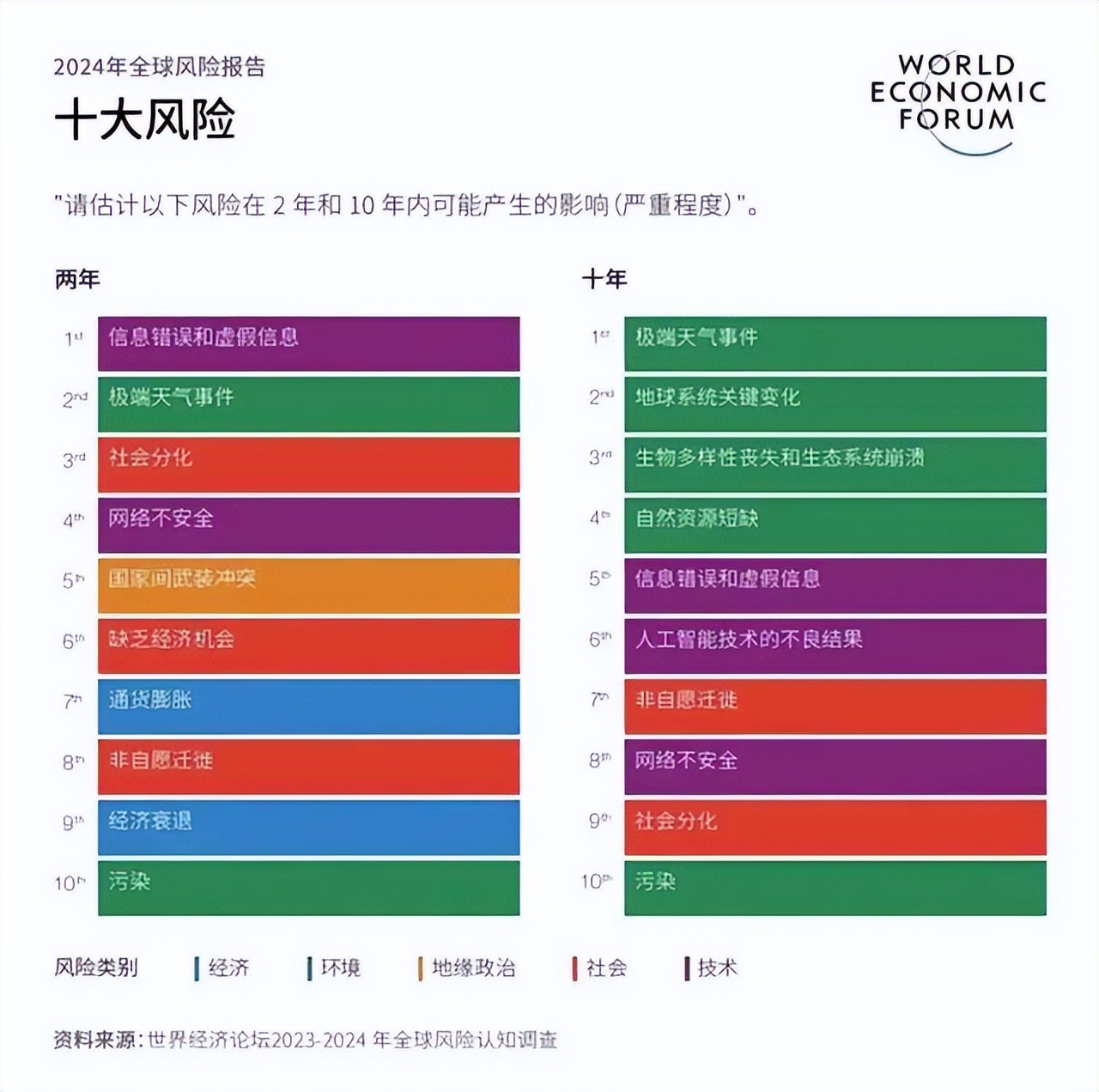

At the beginning of 2024, the World Economic Forum released its Global Risks Report 2024, which listed AI-generated misinformation and disinformation as one of the "top 10 global risks for the next two years", fearing that it would further aggravate the already polarized and conflict-ridden global situation.

Metaverse New Voice found that a lot of AI deep falsification can not say whether it is not distinguishable, or half-hearted, deep falsified information can always be widely spread under the mentality of "watch and do not be afraid of big things".

Legislation as a way to combat AI crime

As we have seen, since 2016, countries have been releasing relevant policies and regulations, but the progress still cannot catch up with the advancement of technology. In October last year, Biden signed the first U.S. executive order on AI regulation to establish safety and privacy protection standards for AI, but it was criticized for lacking enforcement effectiveness.

In the EU, although the European Parliament passed the Artificial Intelligence Bill on March 13, the relevant provisions in the bill will be implemented in phases, with some of the rules not coming into effect until 2025. Japan's ruling Liberal Democratic Party (LDP) has only recently announced plans to propose that the government introduce generative AI legislation within the year.

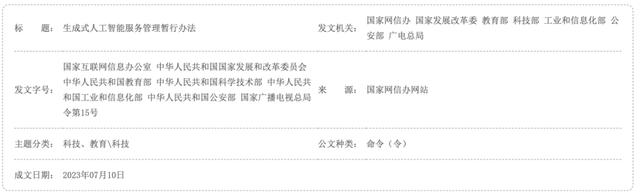

In recent years, China has also issued a number of regulations and policies, such as the Opinions on Strengthening Ethical Governance of Science and Technology, the Administrative Provisions on Deep Synthesis of Internet Information Services, and the Interim Measures for the Administration of Generative Artificial Intelligence Services, which, on the one hand, give "encouragement" to the innovation and development of AI-related technologies, and on the other hand, "set rules" and "draw red lines" to ensure that the development and use of related technologies comply with laws and regulations and respect social morality and ethics. On the one hand, it "encourages" the innovative development of AI-related technologies, and on the other hand, it "establishes rules" and "draws red lines" to ensure that the development and use of related technologies comply with laws and regulations and respect social morality and ethics.

Whether it is the problem of false information brought about by generative AI or the risks of AI applications, the impacts transcend national boundaries. Therefore, the regulation and governance of AI should be addressed through international cooperation, and countries should work together to prevent risks and join hands to build an AI governance framework and standards and norms with broad consensus.

The new voice of the meta-universe believes that AI technology for evil does deserve anxiety, but there is no need to be over-anxious. Just like the development of the times, human society also adapts to new changes in self-regulation, and new technologies break the balance for a short time, and soon return to stability under the self-regulation of society. In the final analysis, whether it is "AI face" or "a key to undress", the fault is not in the AI, nor in the technology, and the development of regulations is only to make the evil happen when there is a law to follow.

In fact, the really scary thing was never the AI deepfakes, but the viral spread of deepfakes, and the spread concerns each and every one of us.

Final Thoughts

Warren Buffett has compared it to "It's like we're letting the genie out of the bottle, especially when we invented nuclear weapons, which have had some negative consequences. The power of that scares me sometimes, and that genie can no longer be put back in the bottle."

The situation with AI may be similar in that it has been unleashed and is sure to play an important role in various aspects, we only hope that this role is more positive.