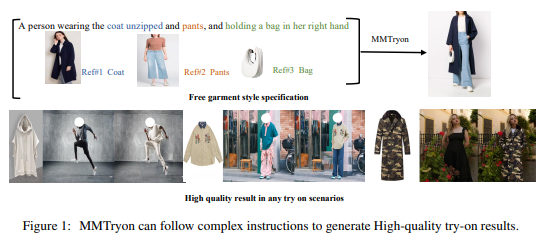

Recently, Sun Yat-sen University and ByteDance's Digital Human team made a big news. They proposed aMMTryonofVirtual Try-OnThis thing is not simple. Just input a few pictures of clothes and add a few text instructions on how to wear them, and you can generate the model's try-on effect with one click, and the quality is very high.

Imagine that you select a coat, a pair of pants, and a bag, and then "snap", they are automatically put on the portrait. Whether you are a real person or a cartoon character, you can get it done with one click. This operation is so cool!

Moreover, MMTryon is more powerful than that. In terms of single-image dressing, it uses a large amount of data to design a clothing encoder that can handle various complex dressing scenes and any clothing styles. As for combined dressing, it breaks the reliance of traditional algorithms on fine clothing segmentation, and can be done with a single text instruction, and the generated effect is both realistic and natural.

In the benchmark test, MMTryon directly won the new SOTA, which is not a lie. The research team also developed a multi-modal multi-reference attention mechanism to make the dressing effect more accurate and flexible. Previous virtual try-on solutions either only allowed you to try on a single piece or were helpless with the style of the dress. But now, MMTryon can solve all of them for you.

Moreover, MMTryon is very smart. It uses a clothing encoder with rich representation capabilities and a novel scalable data generation process, which allows the dressing process to achieve high-quality virtual dressing directly through text and multiple trial objects without any segmentation.

A large number of experiments on open source datasets and complex scenarios have proven that MMTryon is superior to existing SOTA methods both qualitatively and quantitatively. The research team also pre-trained a clothing encoder, using text as a query to activate the features of the area corresponding to the text, getting rid of the reliance on clothing segmentation.

What’s even more amazing is that in order to train the combination of dressing, the research team proposed a data augmentation mode based on a large model and constructed a 1 million enhanced data set, allowing MMTryon to have a realistic virtual try-on effect on various types of dressing.

MMTryon is like a black technology in the fashion industry. It can not only help you try on clothes with one click, but also serve as a fashion design assistant to help you choose clothes. In terms of quantitative indicators and human evaluation, MMTryon surpasses other baseline models and has a great effect.

Paper address: https://arxiv.org/abs/2405.00448