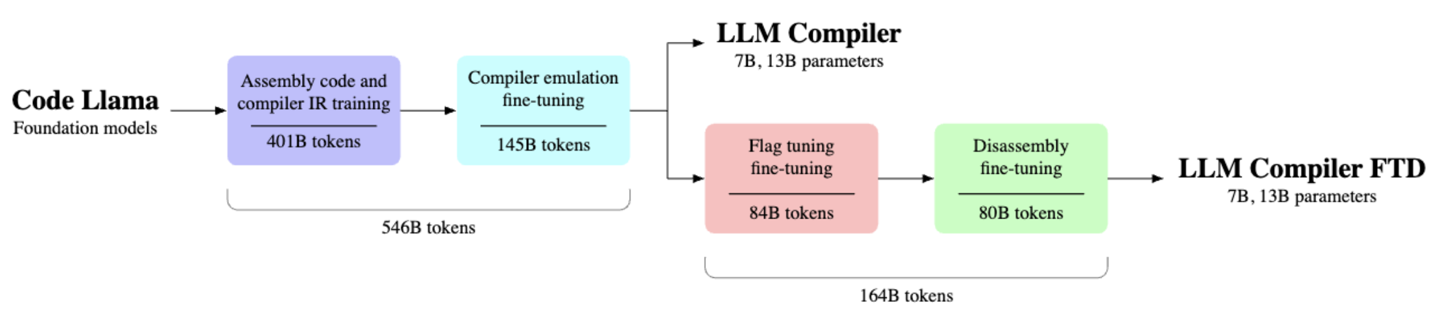

Meta The day before yesterday, a product called "LLM Compiler", which is based on Meta's existing Code Llama and focuses on code optimization. The relevant model has been launched on Hugging Face, providing two versions with 7 billion parameters and 13 billion parameters, allowing academic and commercial use. The project address is as follows:Click here to visit.

Meta believes that although the major language models in the industry have demonstrated outstanding capabilities in various programming code tasks, such models still have room for improvement in code optimization. The currently launched LLM Compiler model is a pre-trained model designed specifically for code optimization tasks. It can simulate the compiler to optimize the code, or "convert the optimized code back to the original language."

LLM Compiler was trained on a massive corpus of 546 billion LLVM-IR and assembly code tokens and is said to be able to achieve a "code optimization potential" of 77%., developers can freely use related models with other AI models to improve the quality of generated code.