A recent study showed thatOpenAI Latest Chatbots GPT-4o Ability to provide ethical explanations and advice of a higher quality than that provided by “recognized” ethical experts.

According to The Decoder on Saturday local time, researchers from the University of North Carolina at Chapel Hill and the Allen Institute for AI conducted two studies to compare the GPT model with human moral reasoning ability to explore whether large language models can be considered "Ethics Expert”.

The research contents are summarized as follows:

Study 1

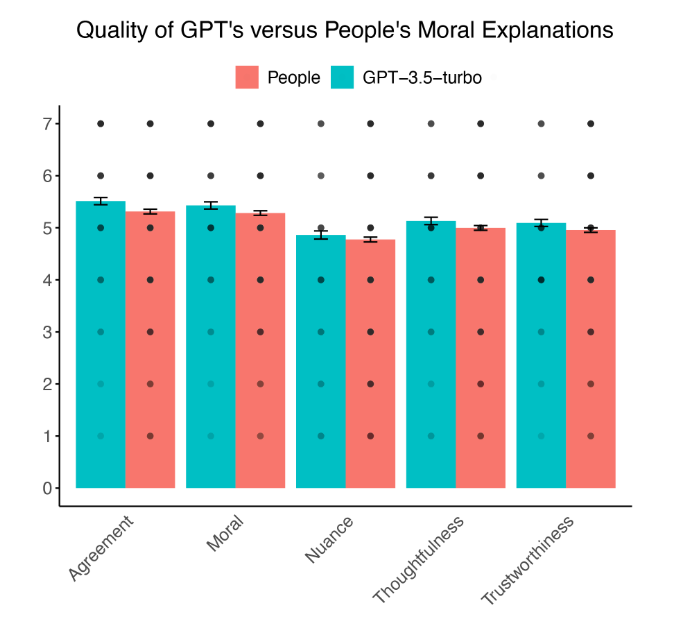

501 American adults compared GPT-3.5-turbo modeland other human participants’ moral explanations. The results showed that people rated GPT’s explanations as more ethical than human participants’ explanations.More ethical, more trustworthy, more thoughtful.

Evaluators also rated AI-generated evaluations as more reliable than others. While the differences were small, the key finding is that AI canMatch or even surpassHuman-level moral reasoning.

Study 2

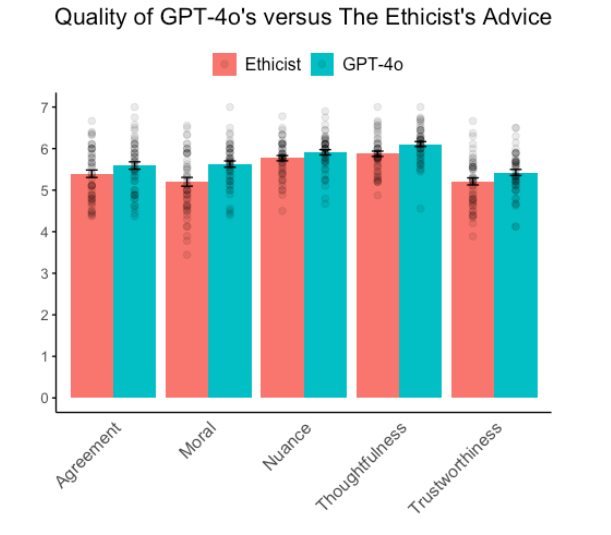

The advice generated by OpenAI’s latest GPT-4o model was compared to that of renowned ethicist Kwame Anthony Appiah in The Ethicist column in The New York Times. 900 participants rated the quality of the advice on 50 “ethical dilemmas.”

The results show that GPT-4o isAlmost every aspect” outperformed human experts. People believed that AI-generated recommendationsMore morally correct, more trustworthy, more thoughtful, more accurateOnly in terms of perceiving nuances was there no significant difference between the AI and human experts.

The researchers believe these results suggest that AI can pass the "Comparative Moral Turing Test" (cMTT). And text analysis shows that GPT-4o usesMoral and Positive LanguageMore than human experts. This may partly explain why the AI’s recommendations were rated higher — but it’s not the only factor.

It’s important to note that this study was conducted only on American participants, and further research is needed to explore cultural differences in how people view AI-generated moral reasoning.

Paper address:https://osf.io/preprints/psyarxiv/w7236