aboutStable diffusion 3The advantages will not be elaborated here, here we will mainly talk about how ordinary users can deploy it locally.

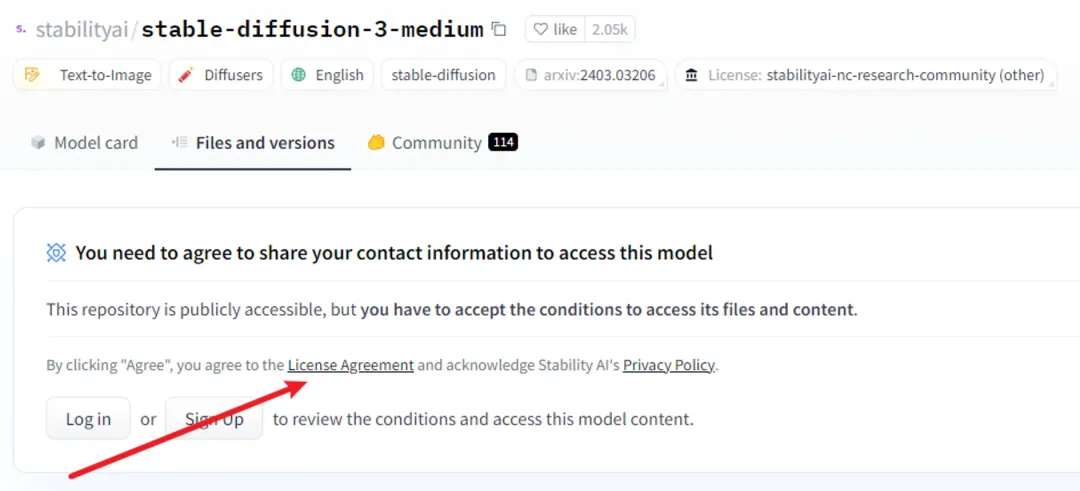

Currently, the SD3 model has been open sourced in HuggingFace. The address is:https://huggingface.co/stabilityai/stable-diffusion-3-medium

However, to download the model, you need to log in to your Hugging Face account and sign a license agreement.

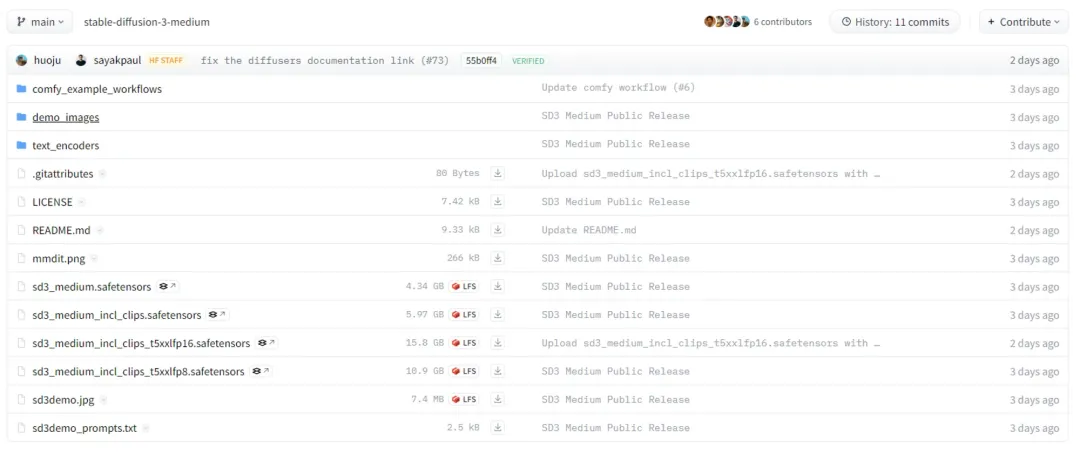

After signing, you can see the file list of the entire project.

The above file can be roughly divided into three parts:

1. comfy_example_workflows

This part mainly contains three Comfyui workflow files, which will be used below.

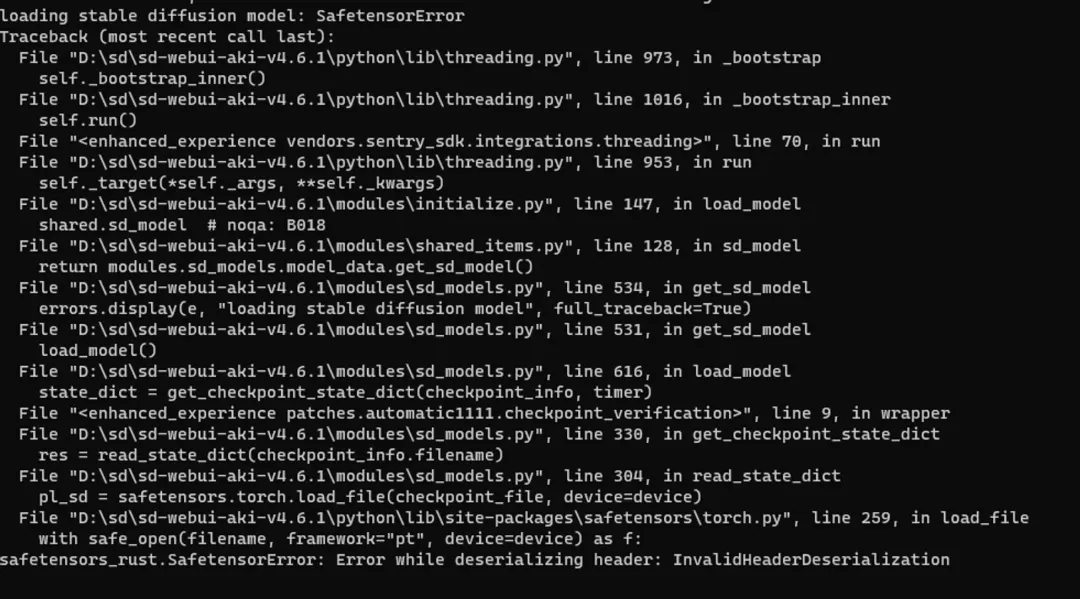

The reason why Comfyui is used is that the current (as of the time of article publication) Stable diffusion webui does not support SD3, so it is not possible to run SD3's large model. I also tried to load sd3_medium.safetensors with the current Stable diffusion webui, but it reported this error.

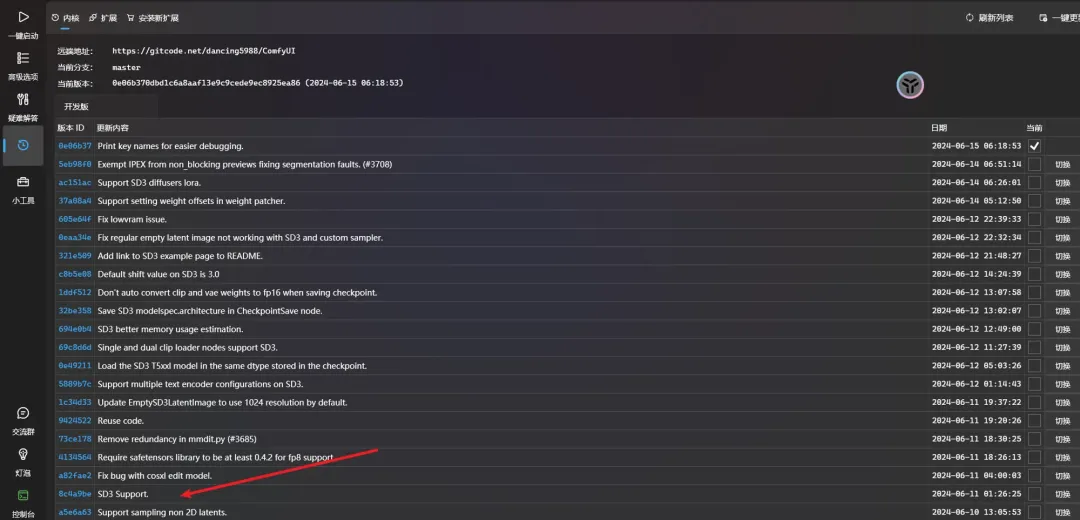

Comfyui currently supports it, but you need to pay attention to upgrading the kernel version. Lower versions are not supported. As you can see from the picture below, the June 11 version has just started to support SD3 (it can be seen that Comfyui received the news very early).

I personally don't recommend just upgrading to version 8c4a9be, because the subsequent release notes actually contain a lot of updates about SD3, which shows that 8c4a9be is just a relatively rough version. I personally upgraded directly to the latest version.

2. text_encoders

There are four model files in text_encoders, namely:

├── text_encoders/ │ ├── clip_g.safetensors │ ├── clip_l.safetensors │ ├── t5xxl_fp16.safetensors │ └── t5xxl_fp8_e4m3fn.safetensorsThere are three different text encoders here, namely two CLIP models and a T5 model. The T5 model also provides two quantization versions, one 16-bit quantization (fp16) and the other 8-bit quantization (fp8).

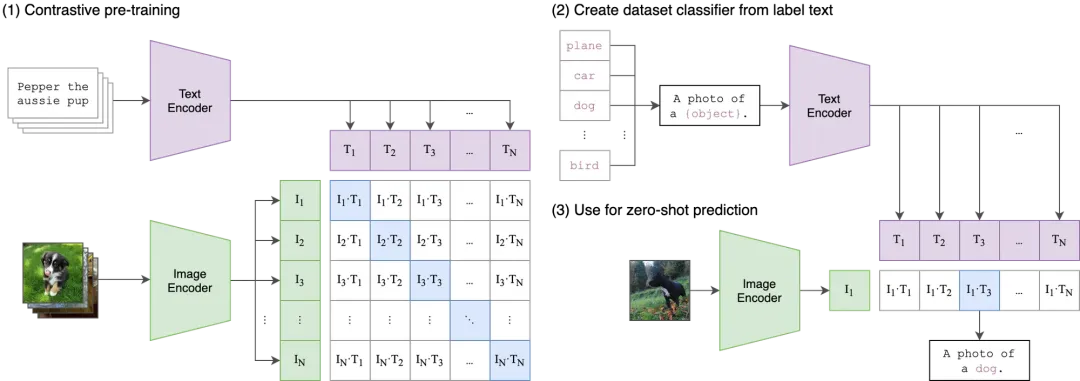

Let me add here,CLIP model[1]Originally developed by OpenAI, it is a multimodal pre-trained model that can understand the relationship between images and text. CLIP is trained on a large number of image and text pairs to learn a representation method that can align text descriptions with image content. This representation method enables CLIP to understand the content of the text description and match it with the image content.

In simple terms,CLIP[2]It is to convert our prompt words into a "language" (vector) that the SD model can understand, so that the SD model knows what kind of pictures to generate.

3. Checkpoints

This time, SD3 released a total of four large models, namely:

├── sd3_medium.safetensors (4.34G) ├── sd3_medium_incl_clips.safetensors (5.97 G) ├── sd3_medium_incl_clips_t5xxlfp8.safetensors (10.9G) └── sd3_medium_incl_clips_t5xxlfp16.safe tensors (15.8G)sd3_medium.safetensors is a relatively pure base model that only contains the relevant weights of MMDiT and VAE, but does not contain any of the text encoders mentioned in point 2 above.

sd3_medium_incl_clips.safetensors is a combination of sd3_medium + clip_g + clip_l. This model will be smaller, but the performance of the model without the T5XXL encoder will be different.

sd3_medium_incl_clips_t5xxlfp8.safetensors is a combination of sd3_medium + clip_g + clip_l + t5xxl_fp8_e4m3fn, a model that strikes a balance between quality and resource requirements.

sd3_medium_incl_clips_t5xxlfp16.safetensors is a combination of sd3_medium + clip_g + clip_l + t5xxl_fp16. The quality should be higher, but the video memory occupied is also the highest.

Next, enterPracticeIf you have already installed Comfyui, you can skip the first part.

1. Install Comfyui

The first step is to download the installation package. Here I have prepared two forms: Quark Cloud Disk and Baidu Cloud Disk

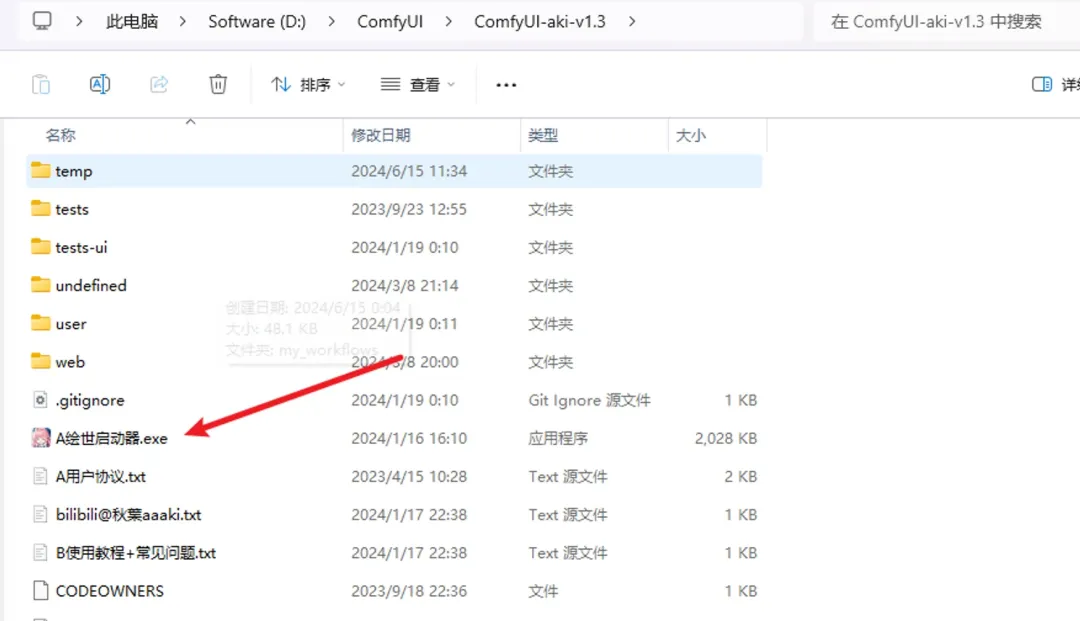

# Quark Cloud Disk is the installation package provided by Qiuye, so it contains more content https://pan.quark.cn/s/64b808baa960#/list/share/377bd955c75a411c8d1d01f366255cdb-ComfyUI*101aki # Baidu Cloud Disk is downloaded and uploaded by myself, and only an integrated package is put in it for the convenience of friends who don’t have Quark Cloud Disk Link: https://pan.baidu.com/s/103QhzN5R7m-19-JFW6Z9Yw Extraction code: 6666The second step is to unzip and install. Double-click the launcher. The layout of the launcher is basically the same as the previous SD launcher.

After the startup is successful, click one-key start, and this screen appears, which means you are halfway there.

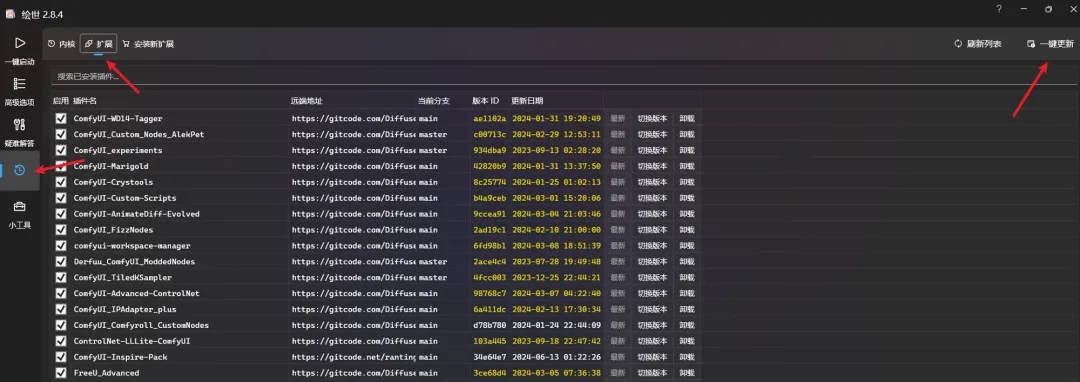

The third step is to upgrade the kernel version. If you have already upgraded, you can skip it.

I have upgraded to the latest version, including the extended version.

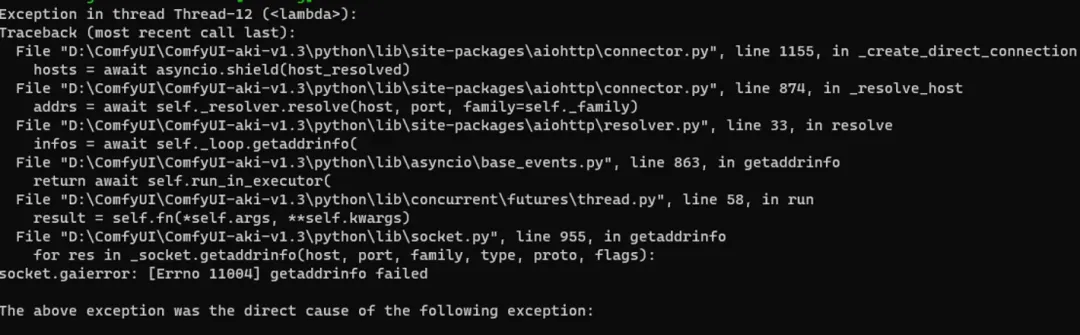

There are some errors when I start it (probably due to the version upgrade), but after actual testing, it does not affect the output of SD3.

2. Download the model

We don't need to use all the above models this time, we only need to use these four models:

- sd3_medium.safetensors

- clip_g.safetensors

- clip_l.safetensors

- t5xxl_fp8_e4m3fn.safetensors

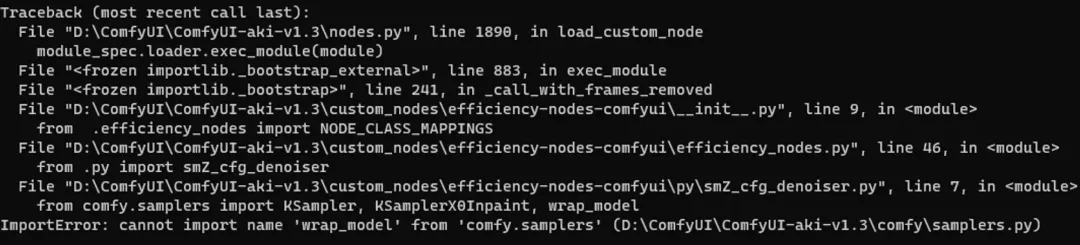

After downloading, put sd3_medium.safetensors into the models\checkpoints folder, and the other three into the models\clip folder.

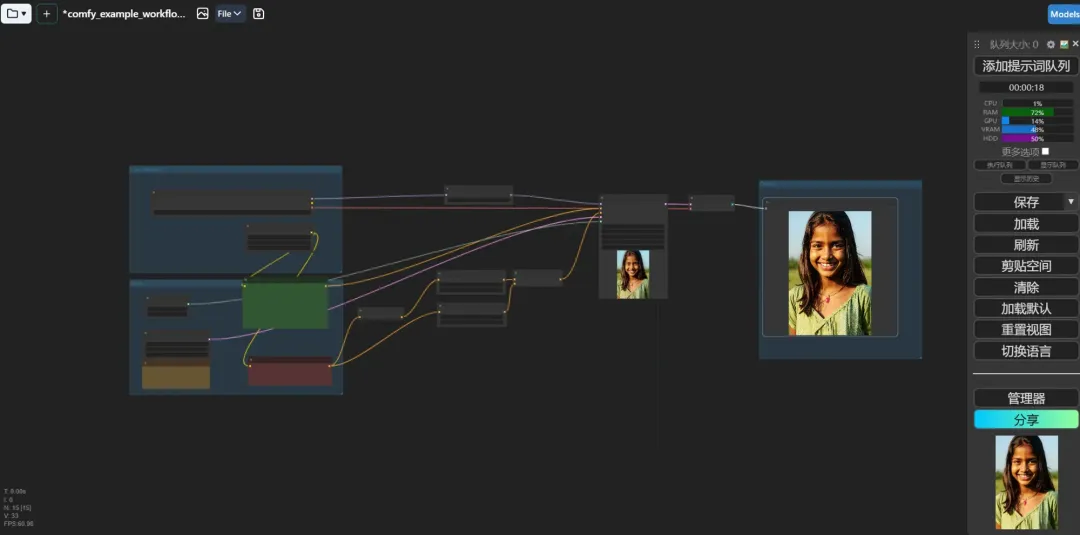

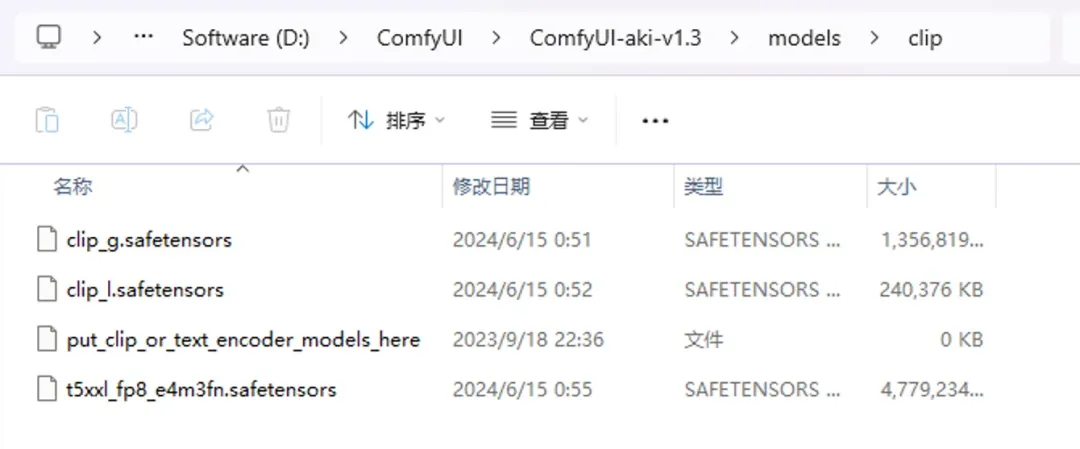

3. Import workflow

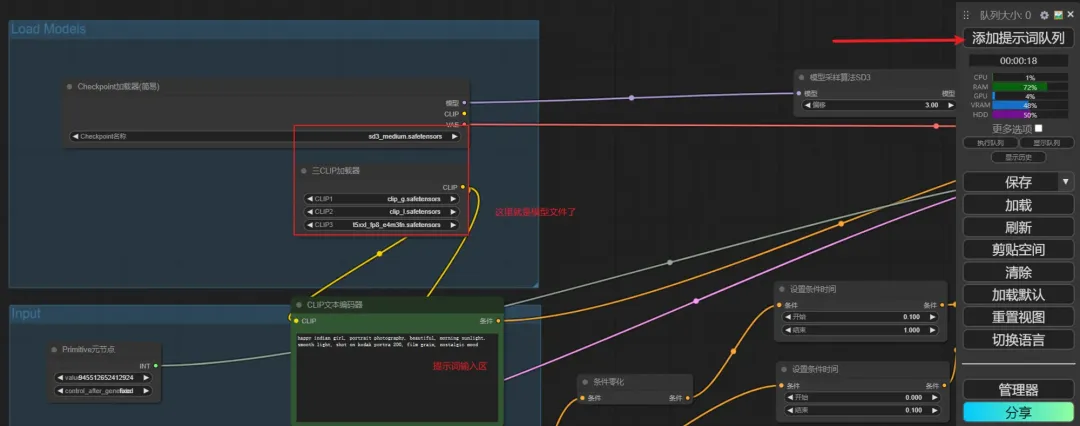

The one used here is sd3_medium_example_workflow_basic.json under the comfy_example_workflows folder

Click the folder icon in the upper left corner and a list of workflow files will pop up.

Click Import to import the previous sd3_medium_example_workflow_basic.json file

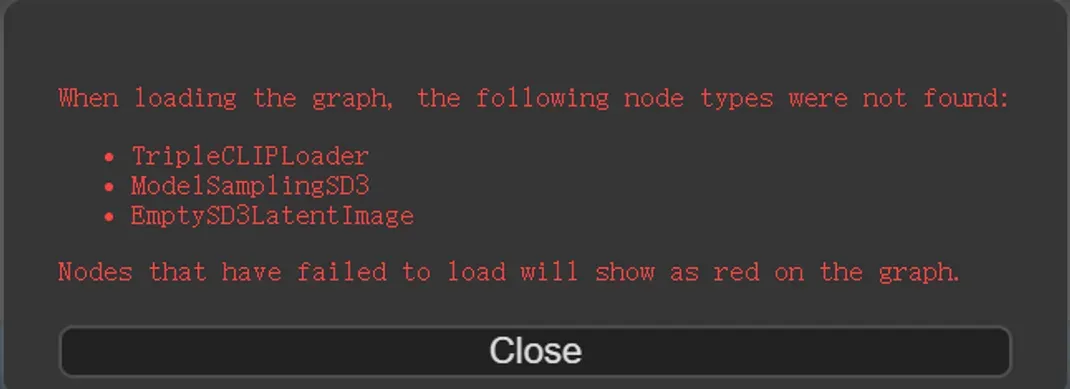

If you import the workflow first and don't put the model, you may get this prompt, which actually tells you that the model failed to load, or your kernel version is not upgraded.

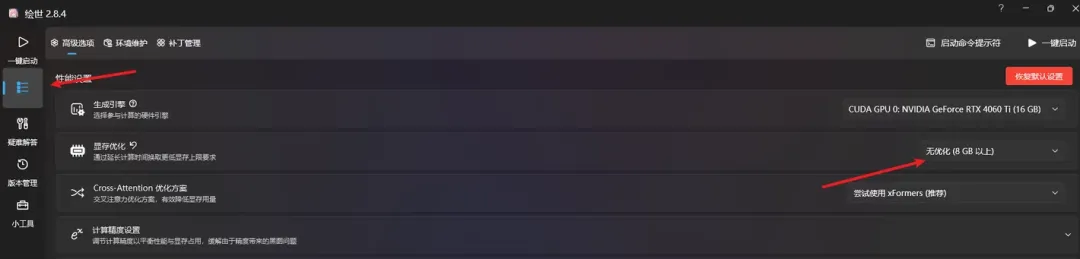

Secondly, you may also need to adjust the video memory optimization options, otherwise the image may fail to be drawn (depending on your video memory situation)

Finally, click the "Add prompt word queue" button to generate the picture.

4. Test results

I have made a simple comparison of facial painting, hand painting, font writing and typesetting, full-body photos, animals and plants. I used SDXL (with Refiner) and SD3 for the comparison, and controlled the image size to be 1024. However, there are slight differences in some detail parameters (CFG Scale). Please see the VCR below.

Note: The photos on the left are all generated by SDXL, and the photos on the right are all generated by SD3

4.1 Face painting

Prompt words: happy indian girl,portrait photography,beautiful,morning sunlight,smooth light,shot on kodak portra 200,film grain,nostalgic mood,

Personal opinion: SDXL is slightly inferior, because it looks like a little girl, but the face looks like a middle-aged woman, which is not a good match.

4.2 Hand painting

Prompt words: a girl sitting in the cafe, playing guitar, comic, graphic illustration, comic art, graphic novel art, vibrant, highly detailed, colored, 2d minimalistic

Personal opinion: SDXL is still a little bit worse, the legs are obviously problematic, the hands of SD3 are slightly better, but not that amazing

4.3 Fonts and Typesetting

Clue provided by SD3: A vibrant street wall covered in colorful graffiti, the centerpiece spells "SD3 MEDIUM", in a storm of colors

Previous prompt: Epic anime artwork of a wizard atop a mountain at night casting a cosmic spell into the dark sky that says "Stable Diffusion 3" made out of colorful energy

Personal opinion: From the above two sets of pictures, we can see that the font writing ability of SD3 is indeed much higher than that of SDXL, but it cannot be guaranteed to be perfect.

4.4 Full body photo

Prompt words: a full body portrait of an old hipster man with a ponytail, cigar in mouth, smoke, badass

Personal opinion: I actually wanted them to draw a full-body photo here. SD3 is a little better for understanding, but I prefer SDXL in terms of style.

Prompt words: Editorial portrait, full body, 1male, dynamic pose, futuristic fashion, cinematic,

Personal opinion: It feels similar, but SD3 draws the whole body

4.5 Plants

Prompt words: a frozen cosmic rose, the petals glitter with a crystalline shimmer, swirling nebulas, 8k unreal engine photorealism, ethereal lighting, red, nighttime, darkness, surreal art

4.6 Anthropomorphic Animals

Prompt words: full body, cat dressed as a Viking, with weapon in his paws, battle coloring, glow hyper-detail, hyper-realism, cinematic

4.7 Some rollovers of SD3

You may have seen some cases of SD3 crashing on the Internet. I also encountered this when testing. Sometimes when drawing some people, the drawings do appear strange or even wrong. It is said that this is because of their NSFW Filter(Filter out non-compliant adult content), and judge all human images as NSFW, resulting in the accidental deletion of some harmless adult images.

For example, this group of prompt words: A girl lying on the grass

Personal opinion: This set of SDXL is obviously better, the neck and hands of SD3 feel weird

There is also this group: A couple lying on the beach in the sun

Both sides of this group are obviously not drawn well, and I also found a point that when drawing two people hugging or other actions between them, the hands of the SD model are particularly prone to errors. I think this is a point that they can improve in the future.

This is also a classic case.

There is one last point, which was also discovered during testing, that is, the images generated by SD3 are obviously more vivid, and the exposure is just right, while the images generated by SDXL are darker and a bit underexposed.

From the above test, we can see that the overall effect of SD3 is better than that of SDXL, which is reflected in theDetails, colors, lightingThe exposure will be better.Close to real photos; In addition, the ability to understand fonts, typesetting, and prompts is also stronger, but there are still some shortcomings in some aspects. I hope that it can be continuously optimized in the future.