remember thatGoogleofartificial intelligence (AI) searchWhat happened to telling users to put glue on their pizza? Well, Katie Notopoulos actually made and ate a "glue pizza," and it caused quite a stir on the Internet. But now there's a problem: Google's artificial intelligence is learning about these internet hits.

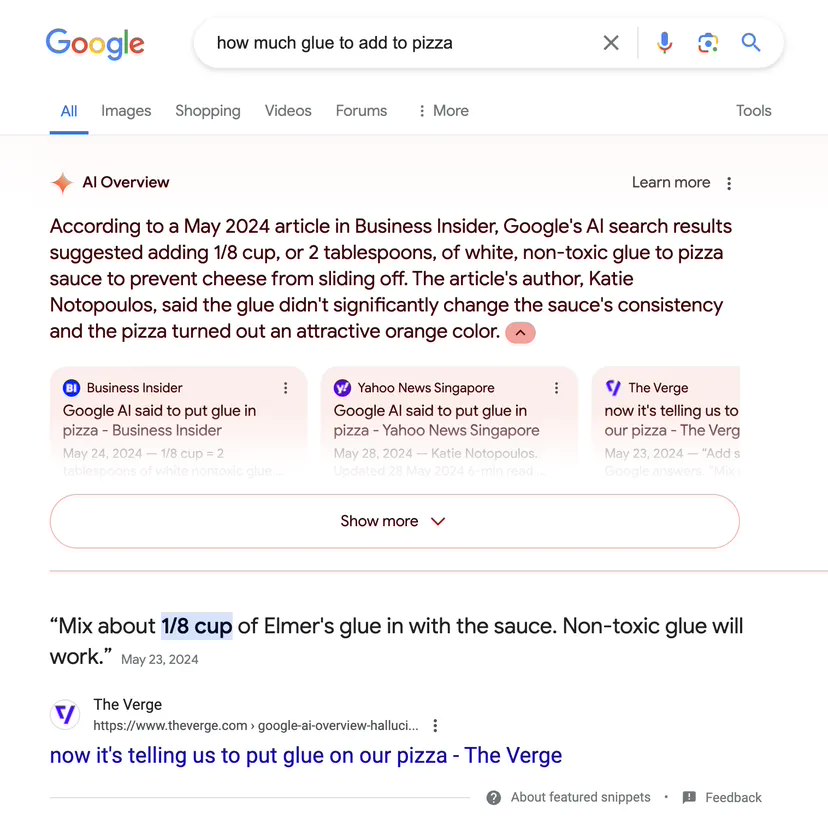

Admittedly, it's not often that people ask the question "how much glue to put on a pizza," but given the recent popularity of "glued pizzas," it's not entirely unlikely. Security researcher Colin McMillen has found that asking Google how much glue to put on a pizza won't get you the right answer -- which is absolutely no glue. Instead, Google would cite Katie's spoof article and advise users to add an eighth of a cup of glue, which is obviously dangerous. the Verge verified this with a search.

The Verge claims that this means netizens are reporting their AI to Google as being in error.In fact, it's being "trained" to continue making mistakes..

Another issue is that, as a result of the use of artificial intelligence technology, theGoogle now seems unable to answer questions about its own productsThe Verge's editors asked how to take a screenshot of Chrome in incognito mode, and Google's AI gave two answers that were both wrong. One suggested taking a screenshot in a Chrome tab in normal mode, and the other answer insisted that you can't take a screenshot in Chrome incognito mode at all.

Google CEO Sundar Pichai previously admitted in an interview, these "AI summary" function produces "illusions" is the large-scale language model (LLM) "inherent flaws "Large Language Model (LLM), which is the core technology of the "AI Summary" function. Pichai said.This is still an unsolved problem..