Hello friends, today I'm sharing the SDXL model for Stable diffusion and the associated hands-on exercises. Previously I've actually covered Stable diffusion models off and on a few times, such as the 1.X and 2.X models, and theSD3 (2B) model to be open sourced on June 12, but actually between 2.X and SD3, around July 2023 or so, StableAI also released a big SDXL model, which is the subject of today's share.

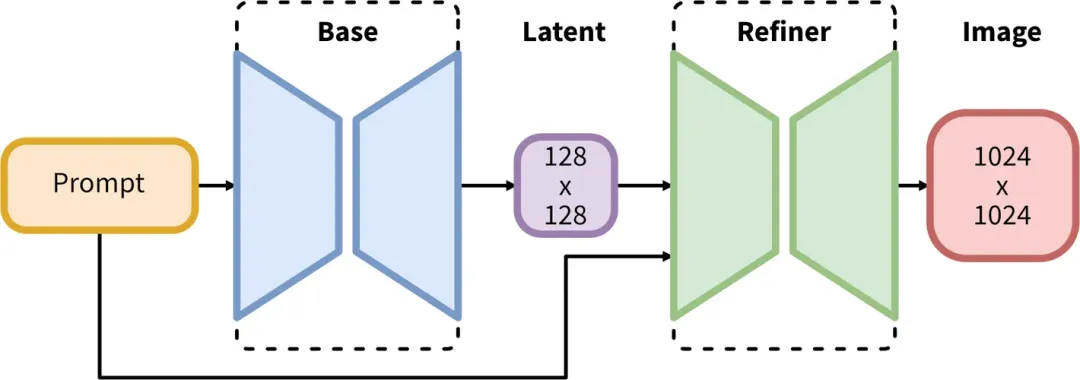

Unlike the previous SD1.5 model, SDXL is structured in a "two-step" approach to map generation:

In the past SD1.5 large model, the generation step is Prompt → Base → Image, which is relatively simple and direct; while this SDXL large model is in the middle of the addition of a step Refiner. what is the role of the Refiner? Simply put, it can automatically optimize the image to improve image quality and clarity, reducing the need for manual intervention.

Simply put, this design of SDXL is to generate an image that looks pretty much the same using a base model (Base), and then polish it using an image refinement model (Refiner), which allows the image to be generated at a higher quality. And before this, we often need to do the tuning by other means, such as HD restoration or face restoration.

In addition to the advantage of a higher quality of output, SDXL has the following advantages:

- Support for higher pixel images (1024 x 1024)

- Better comprehension of cues, and relatively short cues can be used to good effect

- Improvement in amputation of limbs and hands with multiple fingers compared to the SD1.5 model

- More diverse styles

Of course, everything can't be perfect, so SDXL has some limitations:

1, low pixel out of the picture quality is not high

Since SDXL is all trained with 1024x1024 images, this leads both to it generating higher quality at this pixel level. But at the same time it also results in it generating lower quality at lower pixel levels (e.g. 512x512) instead, even less than models such as SD1.5.

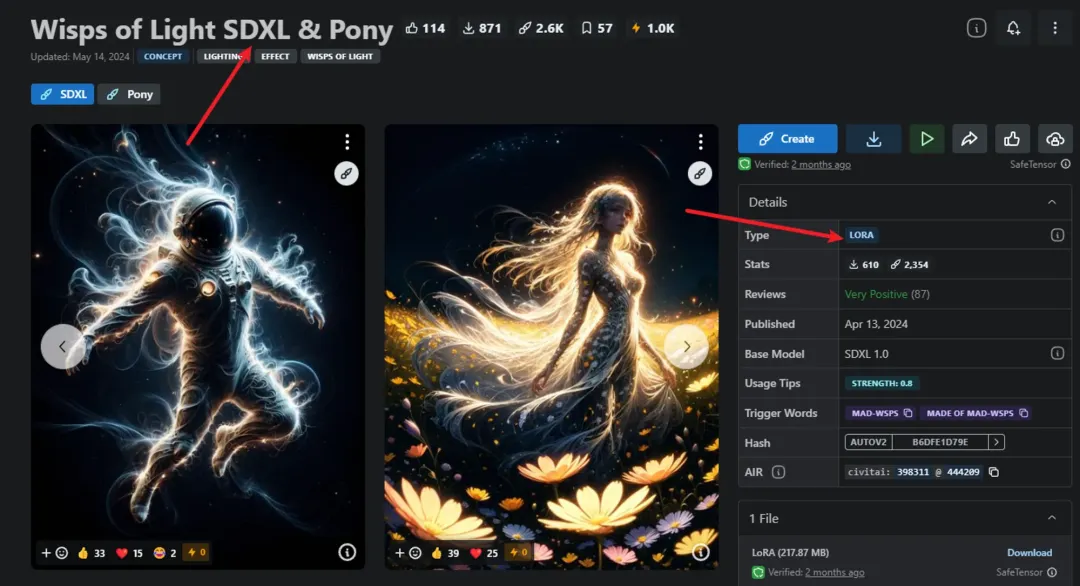

2. Incompatible with old Lora

Some of the past Lora and ControlNet models for SD1.5, 2.x will probably not work, and will have to find some models with SDXL again.

3,Higher GPU memory requirements (this is highlighted below)

4, out of the map also become longer

Well, after briefly talking about the SDXL Big Model and its advantages and disadvantages, it's time to get down to business!

I. Download of the model

The download of the models is a little different this time, as we need to download three models, respectively:sd_xl_base_1.0.safetensors, [1]sd_xl_refiner_1.0.safetensors and [2]sdxl_vae.safetensors[3] .

The addresses of the three models are:

- https://huggingface.co/stabilityai/stable-diffusion-xl-base-1.0/tree/main

- https://huggingface.co/stabilityai/stable-diffusion-xl-refiner-1.0/tree/main

- https://huggingface.co/stabilityai/sdxl-vae/tree/main

If you don't have magic, I've provided a download link to the netbook here as well, except if you don't have a membership, it'll probably take a long time to download as well

💡 Link:https://pan.baidu.com/s/1rd5ysWk8zES2dXjXPDbH4wExtract code: crel

II. Loading of the model

If your models are downloaded, drop sd_xl_base_1.0.safetensors, sd_xl_refiner_1.0.safetensors into models\Stable-diffusion in the root directory, and sdxl_vae.safetensors into models\ VAE.

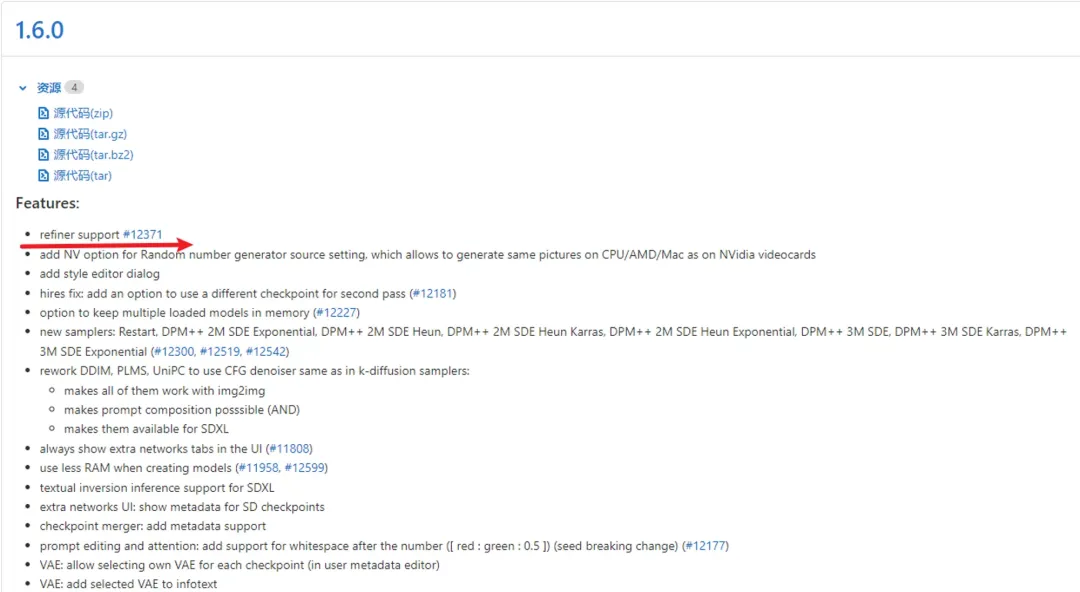

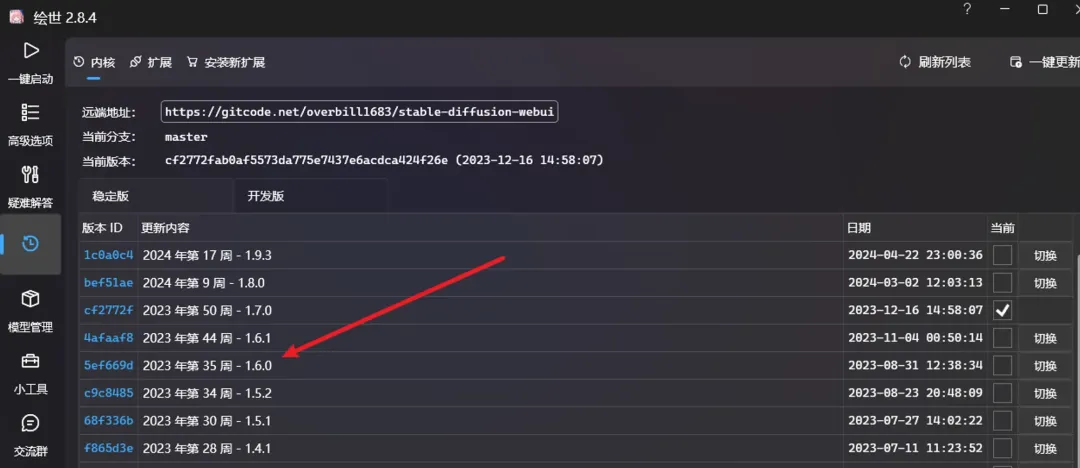

One thing to note is that Refiner model loading is only supported by SD1.6.0 in the release log, so if you want to use it, you have to see if your version is up to it. The SD1.6.0 here refers to the kernel version, not the model version (don't be misled by the abbreviations).

The version of the kernel refers to the version here

Another thing to note is that it seems that the upgraded version is prone to some incompatibility problems, and in the use of SDXL large model does not necessarily have to use Refiner, simply use the base model (base) is also OK, but the effect will be almost, so it is recommended that before upgrading to do a bit of research work.

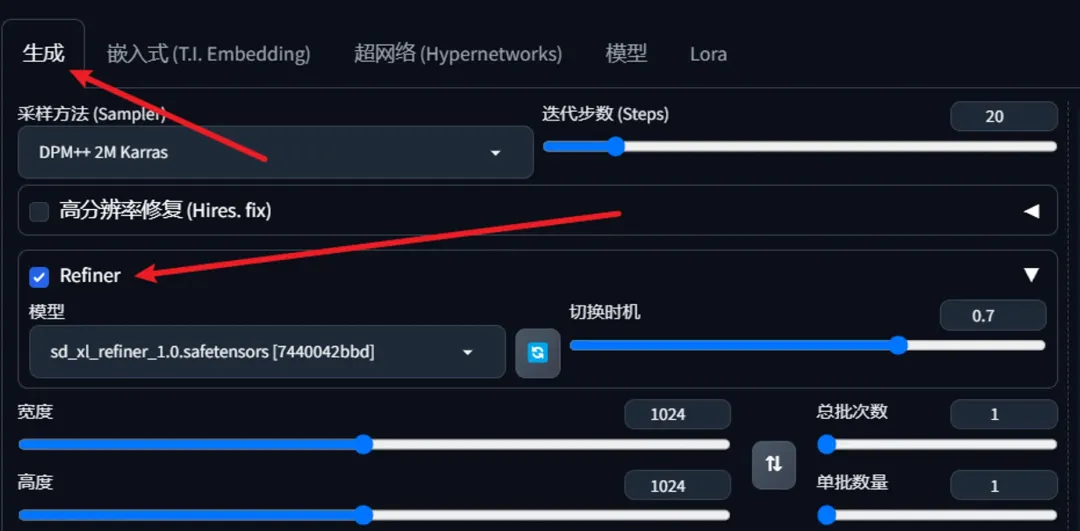

Finally Refiner's location may not be as obvious, inside the Generation tab

Third, the size of the video memory

Since SDXL's model and outgoing size is much larger than the previous SD1.5, it also indirectly results in it requiring more video memory and time for outgoing graphics.

How much video memory do you need? There is a saying on the Internet that the minimum video memory to run SDXL is 8 G. After my test, this data has some reference, in the case of some optimization, 8G of video memory can indeed run; but if you don't do any optimization, 8G of video memory is not enough.

My own video card is a 4060Ti 16G with 32G of RAM, and I've done a couple of small tests based on this setup (all at 1024 x 1024 size)

Tips:

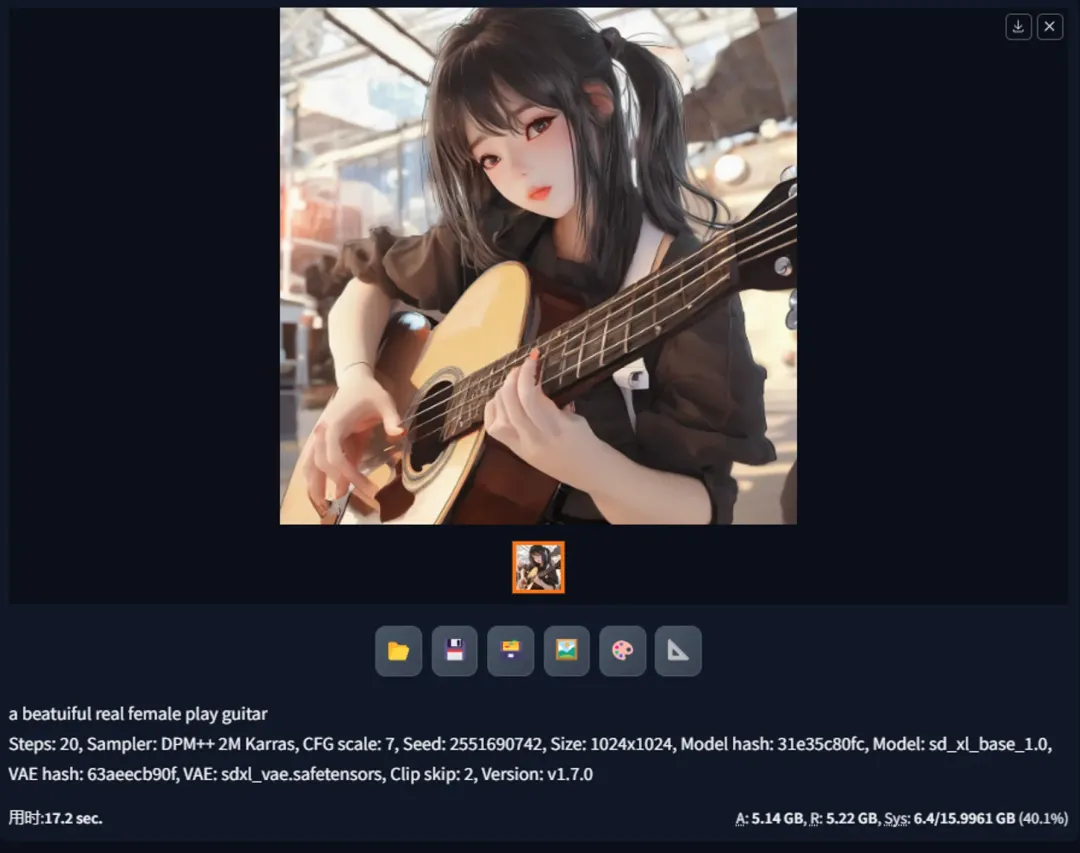

a beatuiful real female play guitar3.1 No Optimization + No Refiner

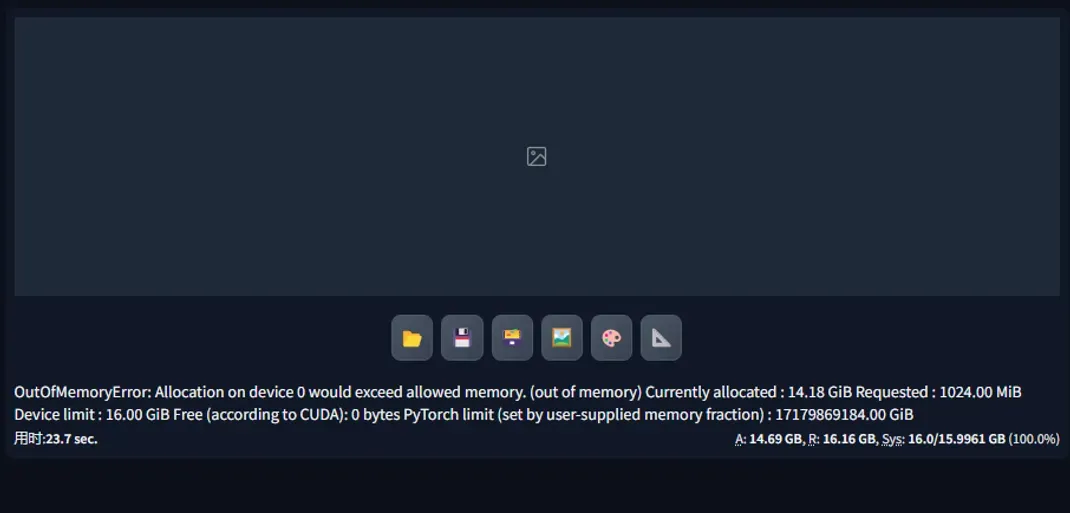

The first test was without any optimization of the image, the results are still surprising, actually burst video memory

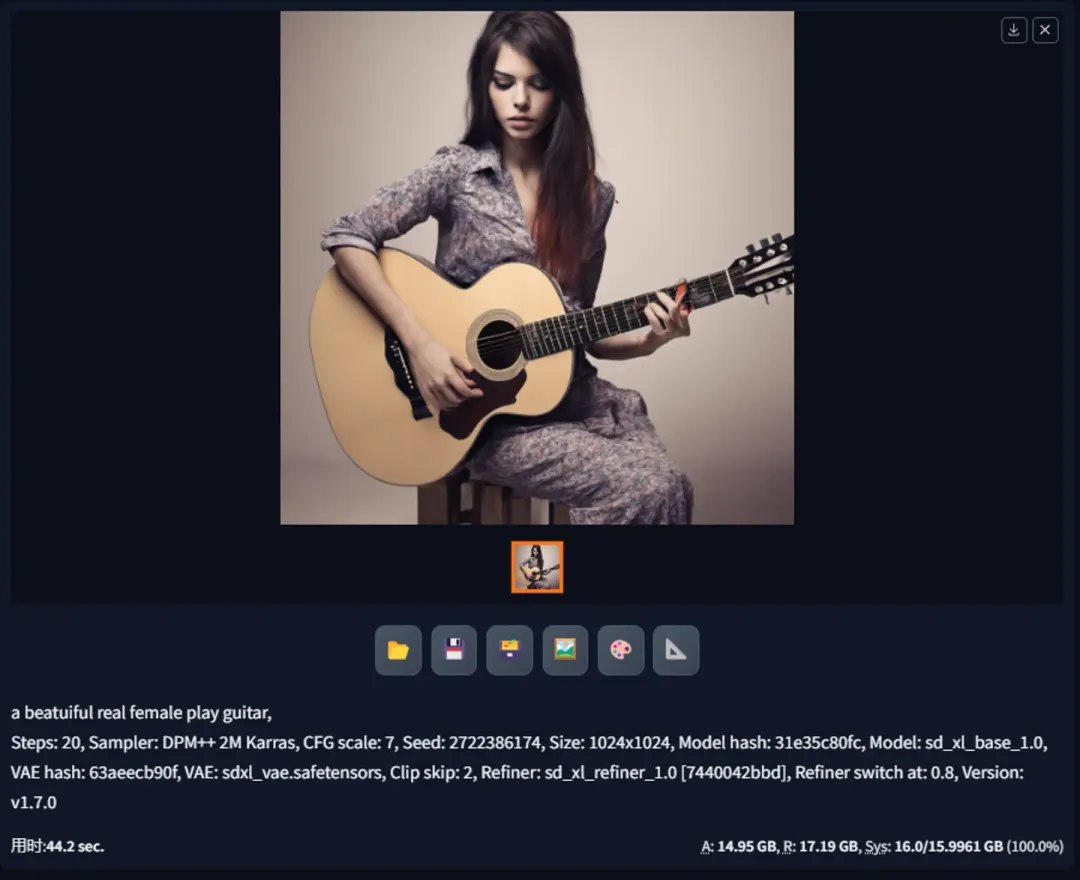

3.2 No Optimization + Use Refiner

The second test is based on the 1st one with Refiner, but surprisingly it didn't blow up the video memory, but also almost reached the limit from the video memory usage below. I'm not sure why it didn't blow the memory when I added the Refiner, so I hope some of you will chime in and explain.

Here is also a brief description of the A, R, and Sys metrics at the bottom:

💡

A, Active: peak amount of video memory used during generation (excluding cache data), personal understanding is the peak amount of video memory used during generation.

R, Reserved: total amount of video memory allocated by the Torch library, which I personally understand to be the total amount of video memory used by the Torch library.

Sys: System: peak amount of video memory allocated by all running programs, out of total capacity, which I personally understand to be the percentage of video memory used.

3.3 Open VAE model semi-precision optimization

After this was turned on (it seems that this doesn't take effect in real time, so I rebooted after each change), I found that it didn't really have much of an effect, and the video memory usage was about the same as the second test.

3.4 Enabling Half-Precision Optimization for VAE + UNet Models

With the addition of the UNet model half-precision optimization, the video memory usage dropped a bit (12G almost runs), and the time to get out of the map dropped a lot! Why did the memory usage drop significantly after adding UNet?An online article [4]It says SDXL used the larger UNet backbone, guess that's what caused it.

3.5 Enabling VAE + UNet Model Half-Precision Optimization + Memory Optimization

The first four tests were done without memory optimization on, and in the fifth test, with memory optimization on, the memory drop will be more noticeable than before (half the drop from 3.4), but it will take slightly longer to get out of the picture.

As you can see here as well, the video memory optimization is pretty obvious, but I also tried medium video memory (4GB+), and SDXL medium memory only (8GB+), but there was no significant difference between the two.

Through the above test, we can also initially get: the claim that the minimum video memory to run SDXL is 8G is somewhat accurate, but this accuracy is most likely to be built on the basis of the half-precision optimization and video memory optimization is turned on. If your video memory can not reach 8G, in addition to the above means, you can also try to use the shared video memory optimization scheme, should also be able to drop a little.

IV. Out-of-the-box testing

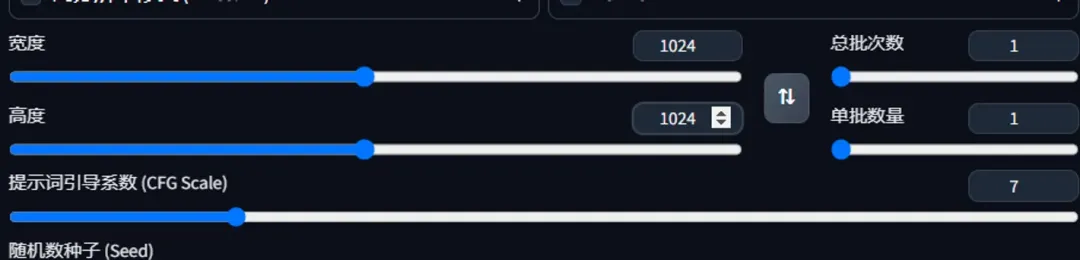

4.1 Setting the Default Image Width and Height

The default width and height of the Autumn Leaf installer is 512x512, but for SDXL models, this size is not suitable, and every time I refresh the page, I have to adjust it again, so I wondered if I could change the default value to 1024x1024.

First step, refresh the page and change the size to 1024x1024

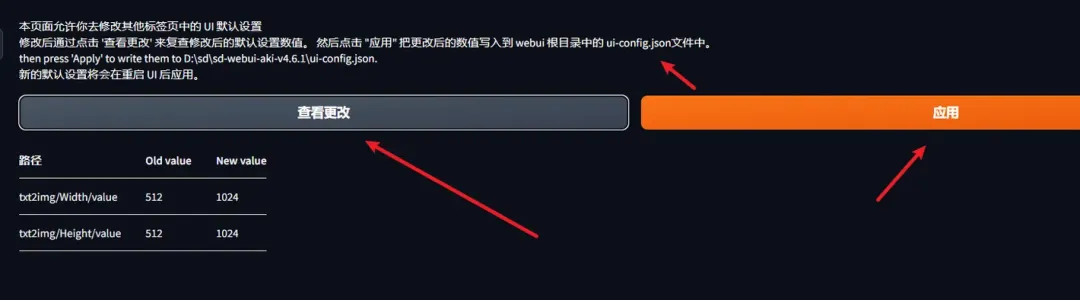

Step 2: Find "Default Settings" in Settings.

Step 3: Click the Apply button. If you want to see what's changed, you can also click the "View Changes" button.

The above steps actually change the ui-config.json configuration of these two parameters, by the way, if you want to take effect must restart the launcher, refresh the page is useless!

txt2img/Width/value

txt2img/Height/value4.2 Comparison of cue word comprehension

The prompt words I gave were from the previous article,Stable diffusion 3: ups and downs, maybe a rainbow at lastThe "Test SD3's:

Epic anime artwork of a wizard atop a mountain at night casting a cosmic spell into the dark sky that says "Stable Diffusion 3" made out of colorful energyIt can be seen that SDXL's ability to understand natural language cue words definitively beats the previous model.

4.3 Tests of spelling ability

I read online that SDXL is a better speller of words, which simply means that it can write on pictures on demand, with the following cue words:

// with the words "future" written on it, literally, in English.

# a cyberpunk girl is wearing a helmet, the helmet with the words "future" written on it, and the helmet is not a helmet.The overall feeling is that the ability is definitely improved, but it's still quite a bit worse than SD3, and that's selecting a few of the better ones from within the pictures that came out

4.4 Excellent cue words to draw on

50+ Best SDXL Prompts For Breathtaking Images[5]

Well, this share here and come to an end, I believe that through this article you should have a very in-depth understanding of the SDXL model, if you think this article is helpful to you, do not forget to share it!