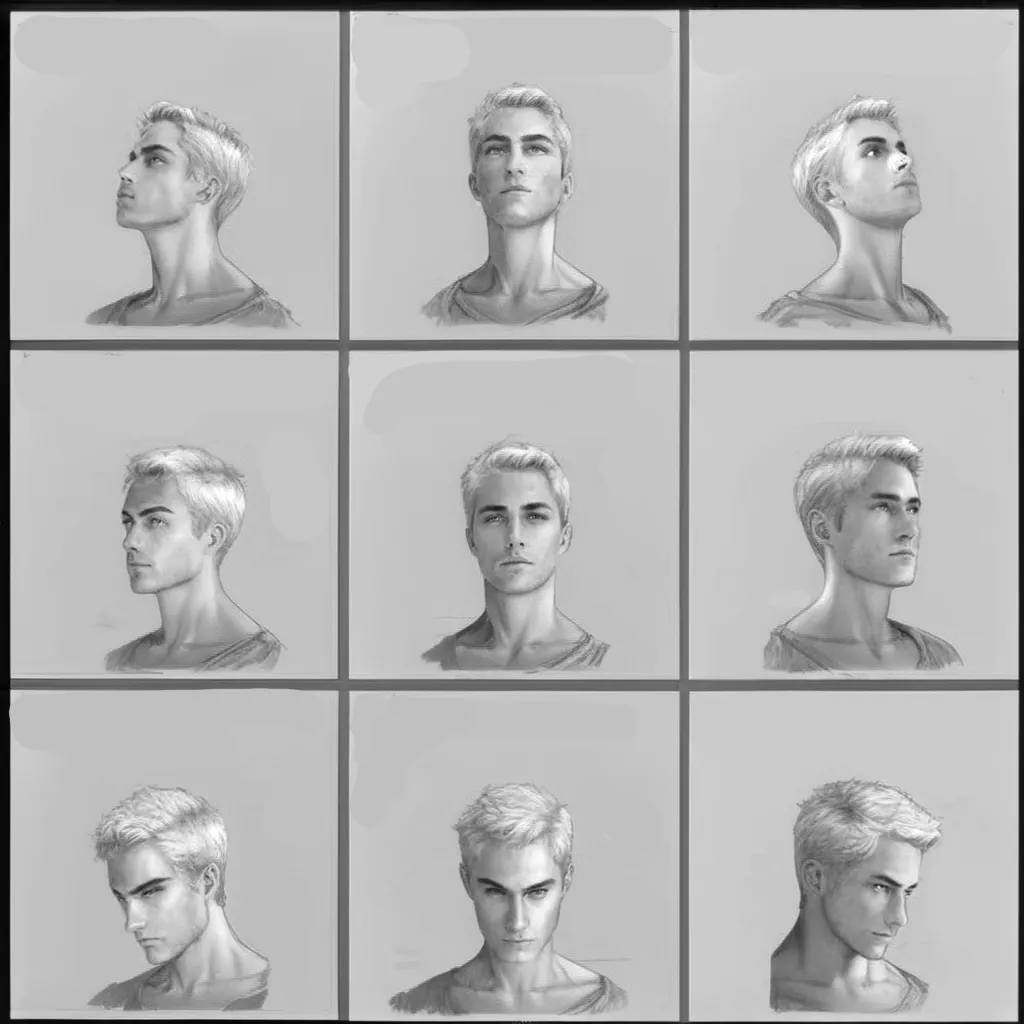

Do you need to create a consistent AI character from different perspectives? For example, the image below shows the effect.

1. Implementation Principle

Create a grid image of the same character from different perspectives, as shown below.

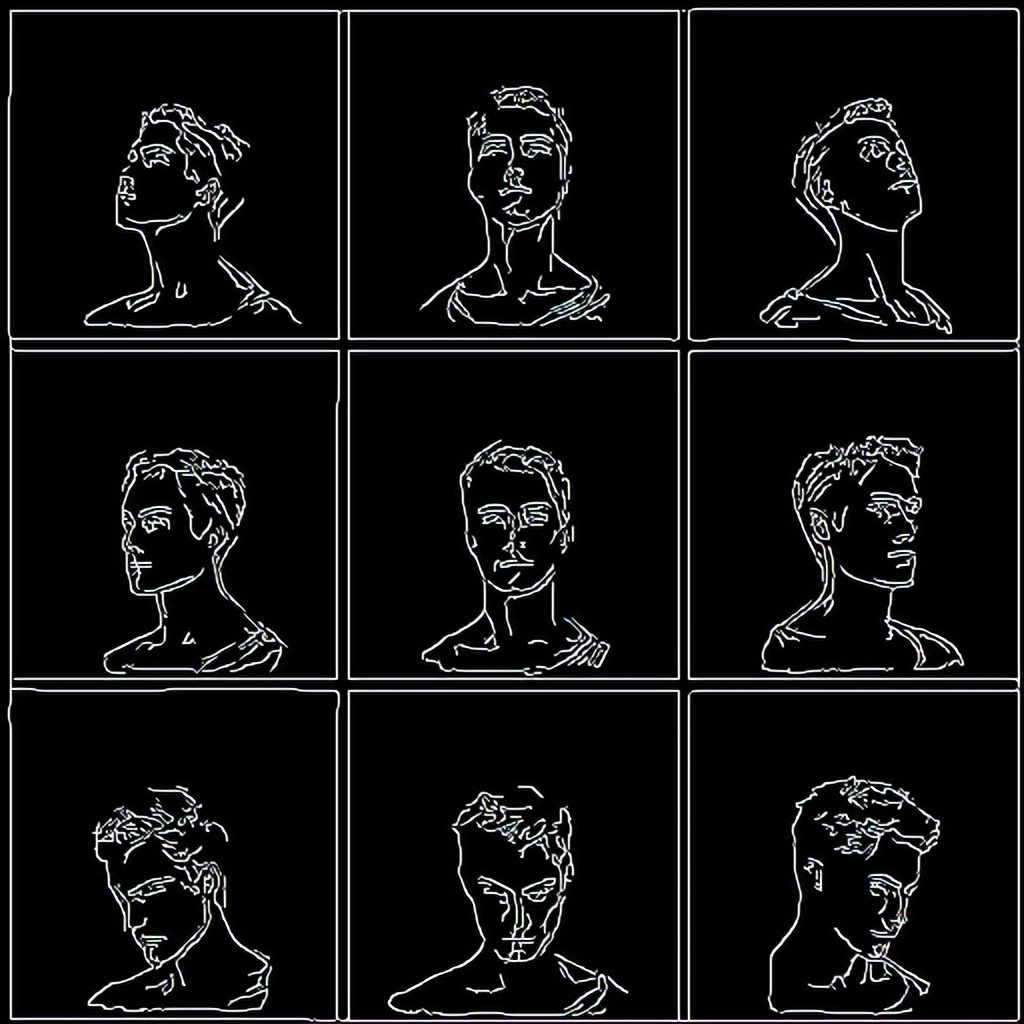

Use ControlNet's Canny SDXL control model to generate the character outline, as shown in the figure below.

Then use IP Apadter FaceID Plus v2 to copy the face from another reference image. Since IP Apadter FaceID only copies the face. It can precisely extract facial features from the reference image, it can accurately transfer the face to a different perspective.

2. Production method

[Step 1]: Selection of large model

It is recommended to use: ProtoVision XL-High Fidelity-No Refiner, version v6.6.0.

Model download address

LiblibAI:https://www.liblib.art/modelinfo/3a3d10aa7fe644158c08a5a43da358db

【Step 2】:Writing prompt words

Let’s take the first picture above as an example to illustrate.

Positive prompt words

Prompt:character sheet, color photo of woman, white background, blonde long hair, beautiful eyes, black shirt

Prompt word: Character sheet, color photo of woman, white background, long blonde hair, beautiful eyes, black shirt

Reverse prompt word

disfigured, deformed, ugly, text, logo

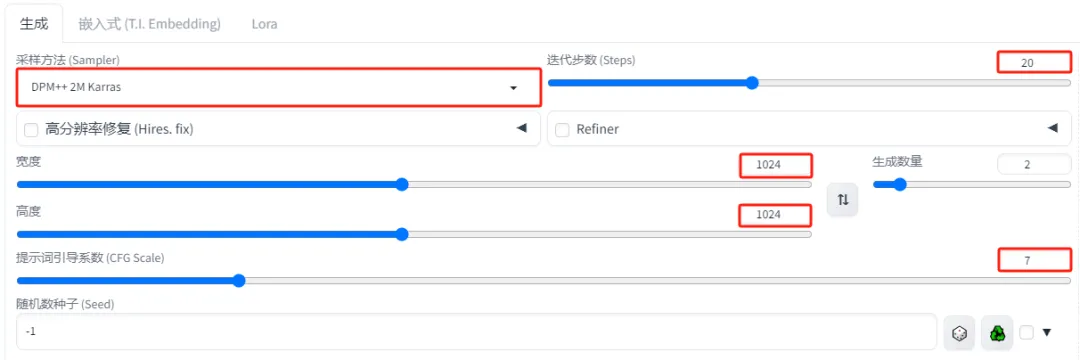

Related parameter settings

- Sampler: DPM++ 2M Karras

- Sampling iteration number: 20

- Image width and height: 1024*1024.

- Prompt word guidance coefficient (CFG): 7

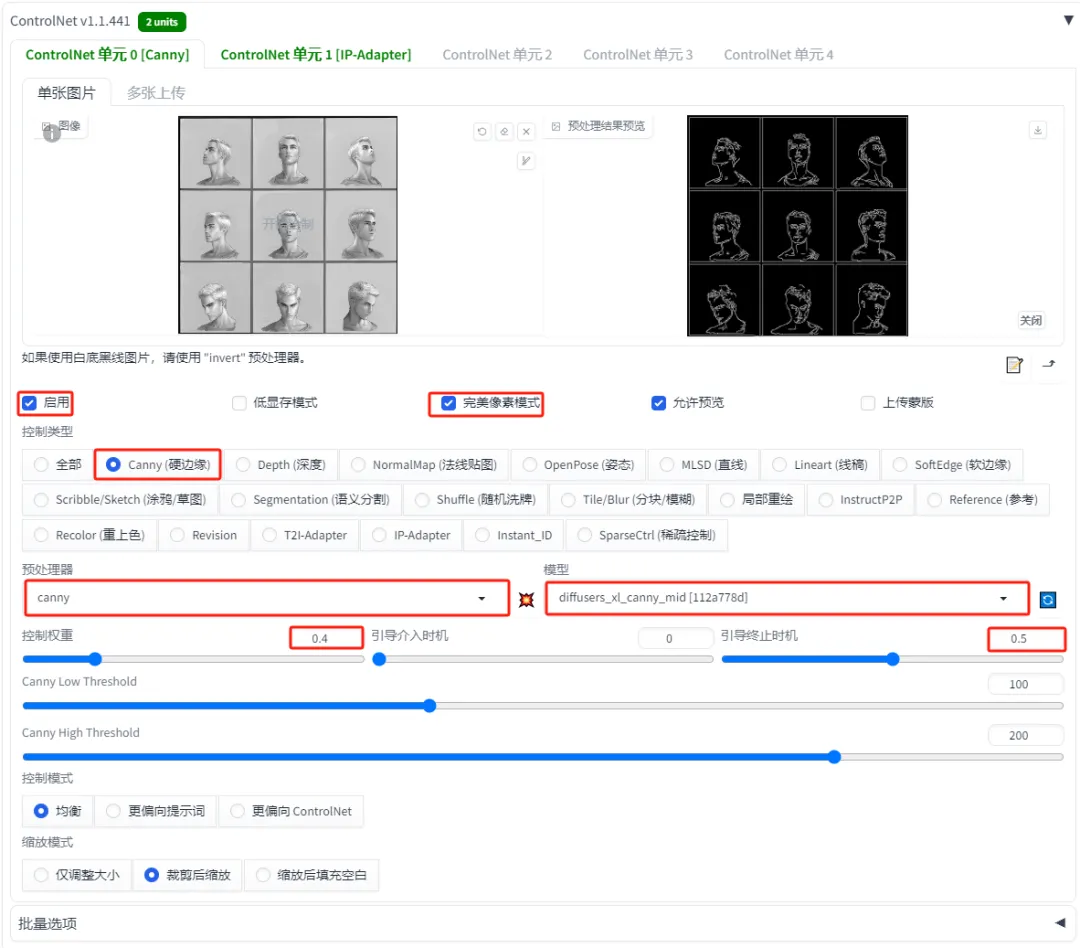

[Step 3]: ControlNet settings

Here we need to configure 2 ControlNet units.

ControlNet Unit 0: canny control model configuration

The relevant parameter settings are as follows:

- Control Type: Select "Canny (Hard Edge)"

- Preprocessor: canny

- Model: diffusers_xl_canny_mid

- Control weight: 0.4

- Guided intervention time: 0

- Guide termination time: 0.5

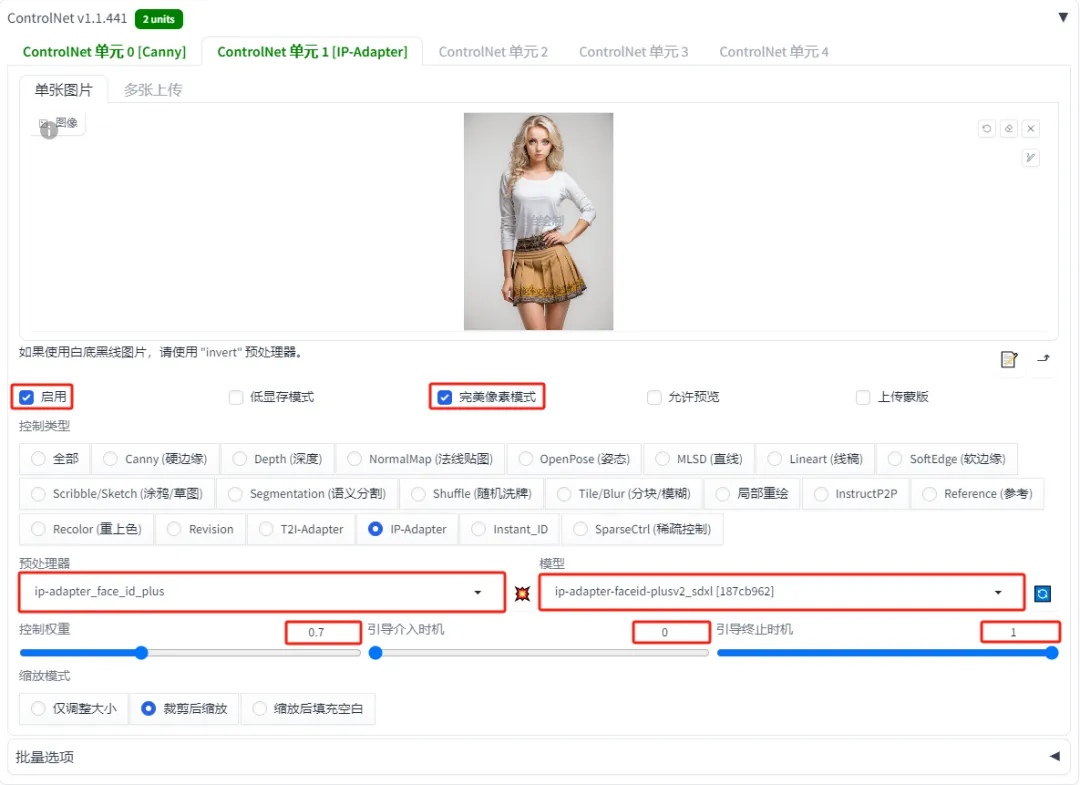

ControlNet Unit 1: Ip-Adapter control model configuration

The relevant parameter settings are as follows:

- Control Type: Select "IP-Adapter"

- Preprocessor: ip-adapter_face_id_plus

- Model: ip-adapter-faceid-plusv2_sdxl

- Control weight: 0.7

- Guided intervention time: 0

- Boot termination time: 1

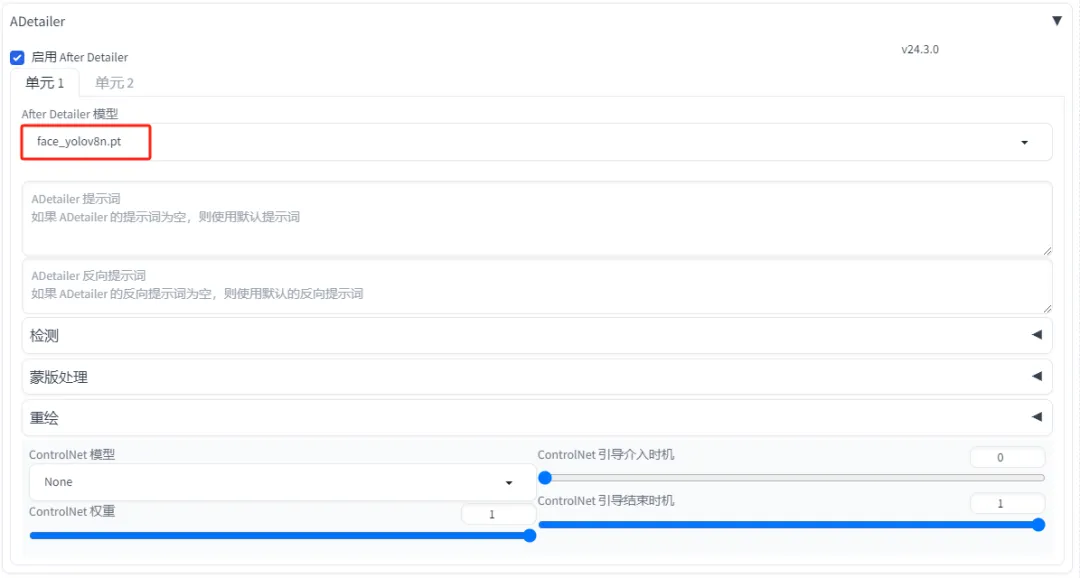

[Step 4]: Use ADetailer to automatically repair the face

Since there are many faces in the picture, ADetailer needs to be turned on to repair the face. Model selection: face_yolov8n.pt

【Step 5】Image generation

Click the [Generate] button and let’s take a look at the final generated image effect.

3. Related instructions

(1) The ControlNet canny and Ip Adapter controllers used here are both based on SDXL, so the large model selected must be a large model based on SDXL.

(2) Since the grid mainly displays portraits of people, the pictures uploaded should preferably be portraits of people. Full-body photos may not produce ideal results.

(3) Free customization: In the above production process, we only need to change the picture of the IP Adapter, and we can also modify the positive prompt words according to our own needs.

(4) If the face does not match the picture

- Increase the controller weight of IP Adapter

- Reduce the control weight and guidance termination timing of Canny ControlNet

Okay, that’s all for today’s sharing. I hope that what I shared today will be helpful to you.