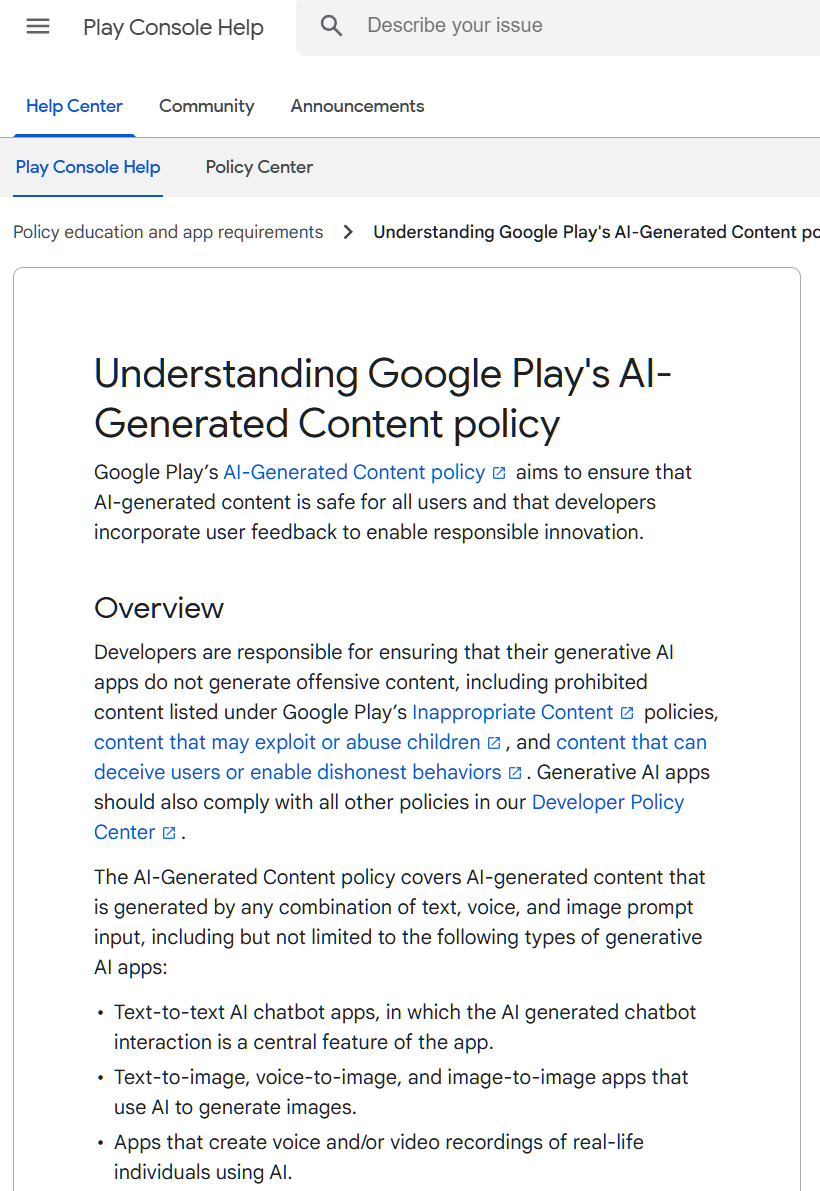

GoogleUpdated guidelines for AI-related applications with the goal of reducing "inappropriate" and "prohibited" content.

Google states in its new policy:Apps that provide generative AI capabilities must prevent the generation of restricted content, including pornography, violence, etc., and require apps to conduct "rigorous testing" of their AI models.

These rules apply to a variety of applications and are briefly summarized below:

-

Apps that generate content via generative AI using any combination of text, voice, and image prompt input.

-

Chatbots, image generation (text-generated images, sound-generated images, images-generated images), and voice and video generation apps.

-

Not applicable to apps that “just carry” AI content, or apps that use AI as a productivity tool.

Google Play It is clear that AI-generated illegal content includes but is not limited to the following cases:

-

AI-generated non-consensual deepfake material.

-

Facilitating fraudLive audio or video recording.

-

Encouraging harmful behavior(e.g. dangerous activities, self-harm).

-

Content generated to facilitate bullying and harassment.

-

Content that is primarily intended to satisfy "sexual needs".

-

AI that enables dishonest behavior generates “official” documents.

-

Create malicious code.

Google will also add new application listing features in the future, striving to make the process of submitting generative AI applications to the store more open, transparent and simplified.