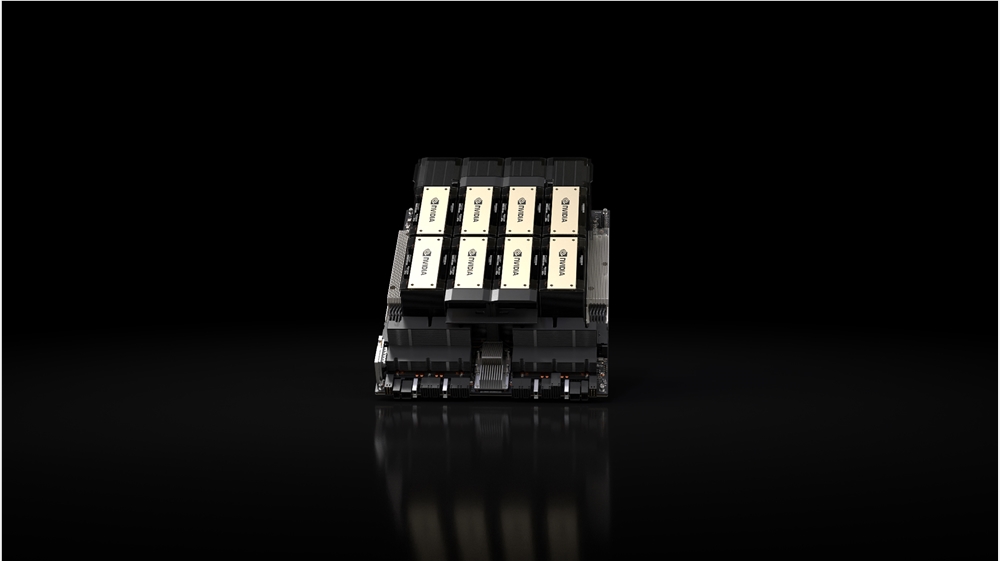

NvidiaThe company announced Monday local time the launch of a new generation of its AI effortsTopchip -- HGX H200. GPU Based on its popular predecessor, the H100, it realized the 1.4x memory bandwidth increase and 1.8x memory capacity increase,Dramatically improved its ability to handle intensive generative AI work.

However, there are still questions about the availability of these new chips in the market, especially given the supply constraints of the H100. NVIDIA has not yet provided a definitive answer to this question. NVIDIA has stated thatFirst H200 chips to be released in Q2 2024NVIDIA is currently working with "global system manufacturers and cloud service providers" to ensure chip availability. NVIDIA spokeswoman Kristin Uchiyama would not comment on production numbers.

With the exception of memory, the H200 is largely identical to the H100 in every other respect. But the memory improvements make it a meaningful upgrade. This new GPU isThe firstIncreased its memory bandwidth to 4.8TB per second with the new, faster HBM3e memory specificationThis is a significant increase from the H100's 3.35TB.Total memory capacity has also been increased from 80GB to 141GB..

The integration of faster and higher-capacity HBM memory is designed to accelerate the performance of compute-intensive tasks, including generating AI models and high-performance computing applications, while optimizing GPU utilization and efficiency," said Ian Buck, vice president of high-performance computing products at NVIDIA, in a video demonstration today.

The H200 is also designed to be compatible with systems that already support the H100. NVIDIA states thatCloud Service Providers need not make any changes when joining H200.. The cloud services divisions of Amazon, Google, Microsoft and Oracle will be among the first to offer new GPUs next year.

The new chip is set to sell for a lot of money once it's launched. While NVIDIA hasn't announced its price, CNBC reports that the previous-generation H100s were estimated to sell for between $25,000 and $40,000 each, runningTopThousands of them are needed for a horizontal system, Uchiyama said, adding that pricing is determined by NVIDIA's partners.

With AI companies still desperately looking for H100 chips, this announcement from NVIDIA is significant.NVIDIA's chips are seen as efficiently handling the huge amounts of data needed to generate image tools and large language modelsoptimaloption. These chips were valuable enough that companies used them as collateral for loans. Who owned the H100 became a hot topic in Silicon Valley, with startups even collaborating to share any availability of these chips.

Uchiyama says H200 launch won't affect H100 production. "You're going to see us increase overall supply this year, and we're in the process of long-term supply purchases," Uchiyama wrote in an email to The Verge.

Looking ahead to next year, it may be an even more auspicious time for GPU buyers. The Financial Times reported in August thatNVIDIA plans to triple H100 production by 2024The goal is to produce up to 2 million next year, compared to about 500,000 in 2023. The goal is to produce as many as 2 million next year, compared to about 500,000 in 2023. But with demand for generative AI still as strong today as it was at the beginning of the year, demand is likely to only get bigger - and that doesn't even include the hotter new chips NVIDIA is rolling out.