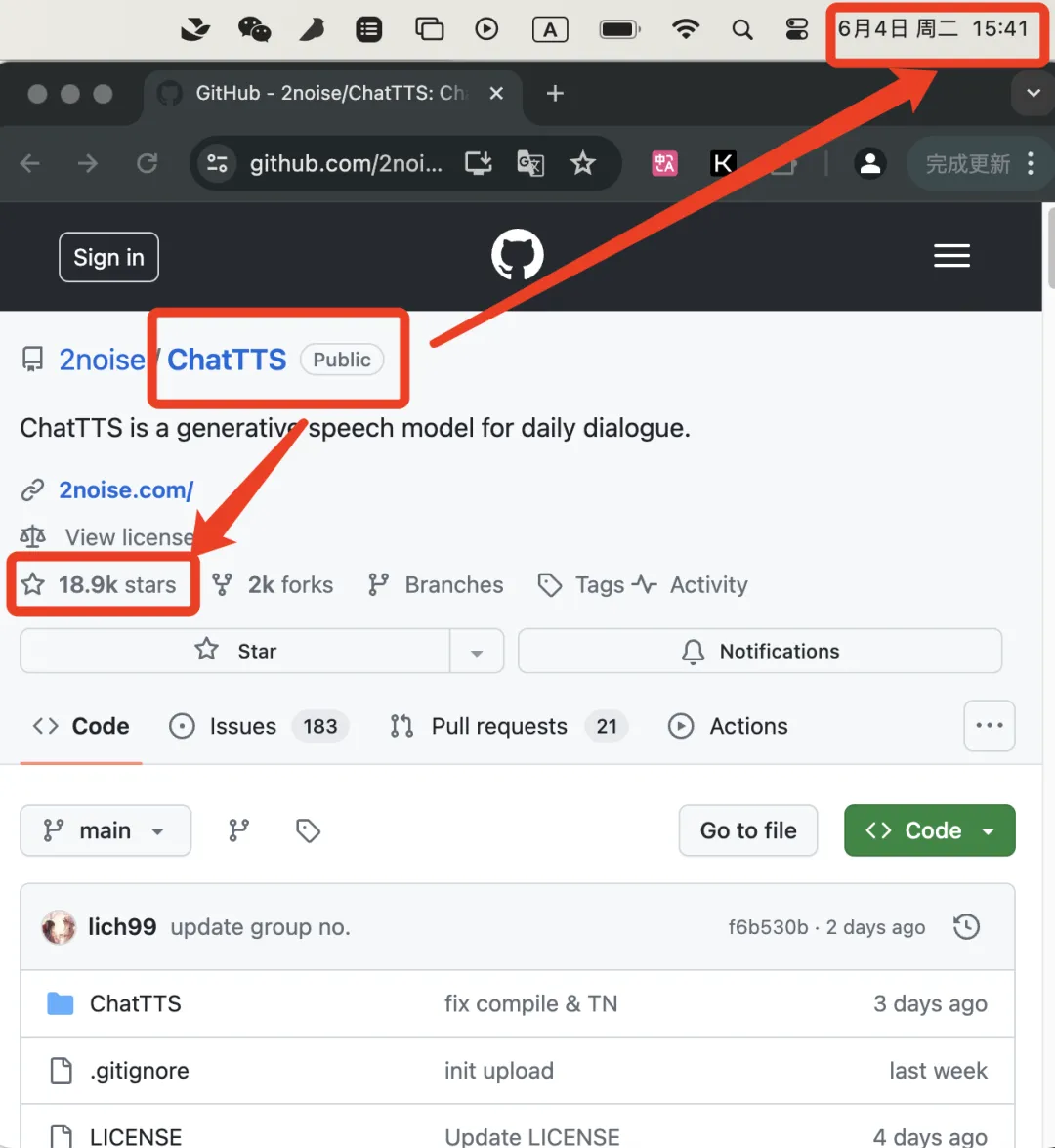

recent,ChatTTS This oneSpeech Generation Projectexist GitHub It quickly gained attention.June 4, 6 days have been achieved18.9 thousand stars🌟. All the netizens said it was amazing! According to this trend, it will soon break through20,000 stars.

Website:https://github.com/2noise/ChatTTS

ChatTTS is a program designed specifically for conversation scenarios.Text to SpeechModel (TTS, or Text-To-Speech), which supports multiple languages, including English and Chinese. The largest model is trained with 100,000 hours of Chinese and English data.Open SourceThe version is the one with 40,000 hours of training and without SFT. This ensures the high quality and naturalness of the sound synthesis.

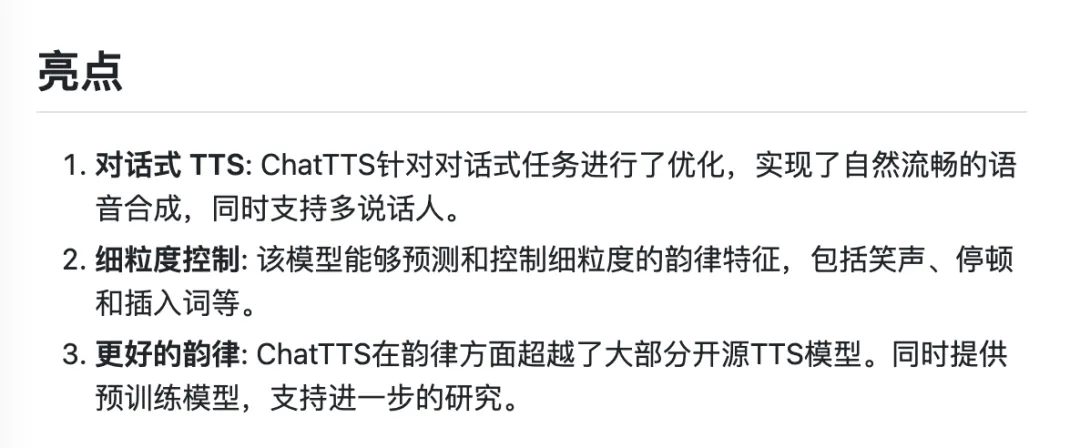

According to the official introduction, ChatTTS has3 highlights:

After listening to the official audio version of the self-introduction in Github, the character's voice is veryRealistic, natural, flow, pauses, laughter.

Then let's try the official prompt words to see how it works:

The code is as follows:

inputs_cn = """chat TTS is a powerful conversational text-to-speech model. It has mixed Chinese and English reading and multi-speaker capabilities. chat TTS can not only generate natural and fluent speech, but also control paralinguistic phenomena such as [laugh] laughter [laugh], pauses [uv_break] and modal particles [uv_break]. This prosody surpasses many open source models [uv_break]. Please note that the use of chat TTS should comply with legal and ethical standards to avoid security risks of abuse. [uv_break]'""".replace('\n', '')params_refine_text = {'prompt': '[oral_2][laugh_0][break_4]'} audio_array_cn = chat.infer(inputs_cn, params_refine_text=params_refine_text)# audio_array_en = chat.infer(inputs_en, params_refine_text=params_refine_text)torchaudio.save("output3.wav", torch.from_numpy(audio_array_cn[0]), 24000)

In addition to the official self-introduction above, the one that everyone is most familiar with is definitely the one that is most commonly seen these days——Sichuan Food ReadingI have to say that the generated effect is really natural and smooth!

Written in front:

This article is mainly divided into three parts. Friends who are interested in a certain section can jump directly to read 📖.

- ChatTTS in-depth review (PK seven emotions and six desires, excerpts of audio are included in the article, but I won’t put it in here if it’s too irritating to the ears.)

- How to use ChatTTS

- Other open source TTS project recommendations

ChatTTSPK Human "Seven Emotions and Six Desires"

Everyone has emotions. It is said that the voice generated by ChatTTS is very realistic and natural. Let's challenge our"Seven Emotions and Six Desires", let's see how capable it is! We use ChatTTSText Control TagsTo enrich the emotional expression of voice, please enjoy the following details:

Desire for Gain:

Every time I see my investment numbers double, I feel excited like I have discovered a new world [break_1], which makes me unable to stop.

The whole sentence flows naturally, and the emotion of the word "excited" is relatively prominent.

Desire for Food:

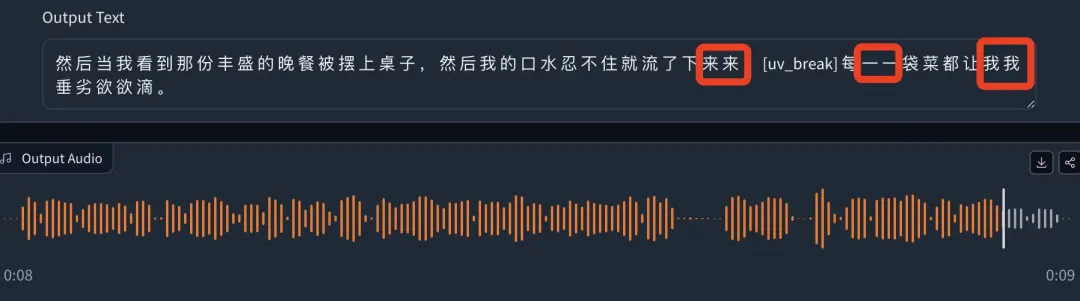

When I saw the sumptuous dinner being placed on the table, I couldn't help but drool[break_1][oral_3], and every dish made me salivate.

There are some overlapping words in the text output, and the ending sounds unclear in the speech, but the overall emotion is full and relatively smooth.

Desire for Sleep:

After a busy day, I just want to fall into the soft bed[lbreak] and indulge in a sweet dream[oral_4].

The overall flow is natural and the emotions are on point.

Desire for Wealth:

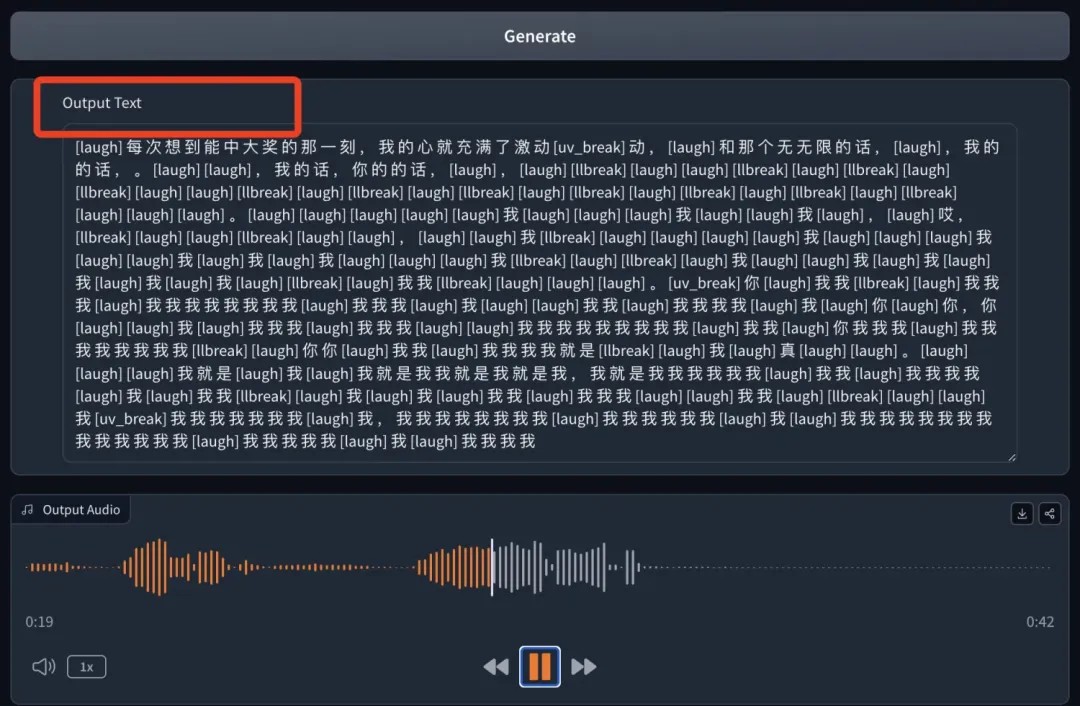

Every time I think about the moment of winning the jackpot, my heart is filled with excitement[laugh_2] and endless fantasies[break_2].

This is starting to get garbled.

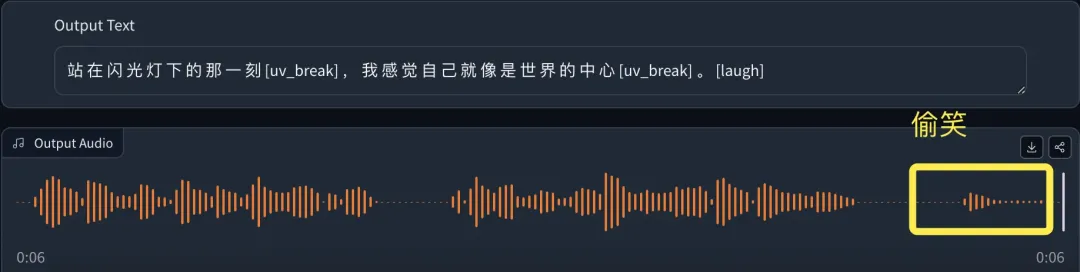

Desire for Fame:

The moment I stood under the flash lights, I felt like I was the center of the world [laugh_1], with all eyes on me.

The whole sentence is very smooth, and after listening to it, I feel like I am standing under a "flashlight". The most interesting thing is that there is a "sneak sneer" at the end, and it feels very sneaky.

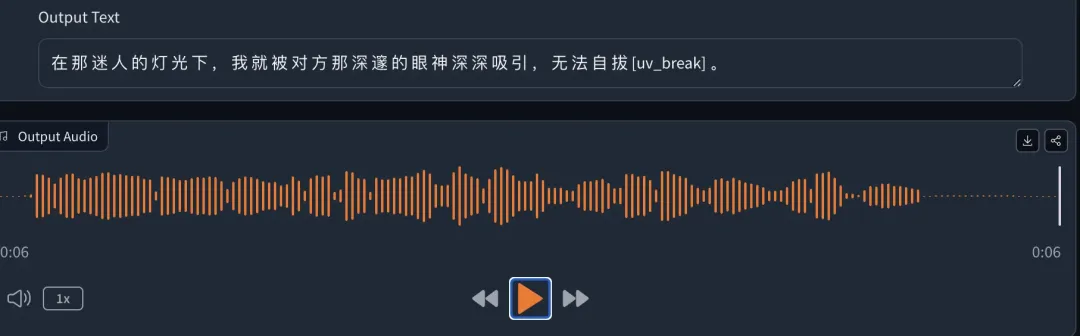

Desire for Sex:

Under that charming light, I was deeply attracted by the other person's deep eyes[break_3] and couldn't extricate myself.

A master of reading without emotion, but extremely natural and fluent.

There are seven emotions

In order to better express the complex emotional system of "seven emotions and six desires", we can also finely control the emotional expression of speech by embedding control tags in the text, as follows:

Joy:

-Original text: I finally got the long-awaited promotion [laugh_1], I feel like I’m on top of the world [break_2], and all my hard work has paid off.

-Text output: StartGarbled code repeats, many times it needs to be generated multiple times.

-Audio output: There are basically no complete words or sentences generated in the audio, it is basically just random shouting.

We will find that the effects of these two times areText OutputThis part is already problematic.

2. Anger

-Original text: I was so furious when I saw that unfair report [lbreak], how could the facts be distorted like this [oral_5]?

-Text output: All text is output correctly.

-Audio output: The first half of the sentence is very calm, with a pause in the middle, but the second half does not sound angry at all, and even has a slight "smile", which is not very reasonable.

This part of the sentence can be read completely, and even has pauses, but the silly girl is a little immature in the emotional changes, and it is almost impossible to hear the emotional changes. (🐰 Blind guess: only the smile and pauses are the most obvious.

Sorrow:

-Original text: At the farewell ceremony, I tried to suppress my sadness [break_4][oral_2], but tears still flowed out.

-Text output: Tried twice, bothThe text for "but tears still welled up in my eyes" is missing.

-Audio output: The emotion is very calm, butInterjections with "嗯".

The third sentence is in output The text output part has already begun to miss the original informationThen, in the voice part, the following half sentence "But the tears still flowed"It is directly lost and no speech can be generated. The emotion is very calm, but you can hear a bit of dialect.

Happiness:

-Original text: At a friend's wedding, we laughed together [laugh_2], and the happiness we felt at that moment [break_1] was incomparable.

-Text output: After half of the text is outputStart to garble,The following is basically a stuttering state.

-Audio output: He was quite happy, but he was basically stuttering the whole time, "I I I..." and so on.

This time the effect is very similar to the first time, basically no complete words appear, although there is no complete word output, but the emotions are very full, and there are laughter hidden in the embarrassment 😂. As for how much fun it is, it is still better to hear it with your ears.

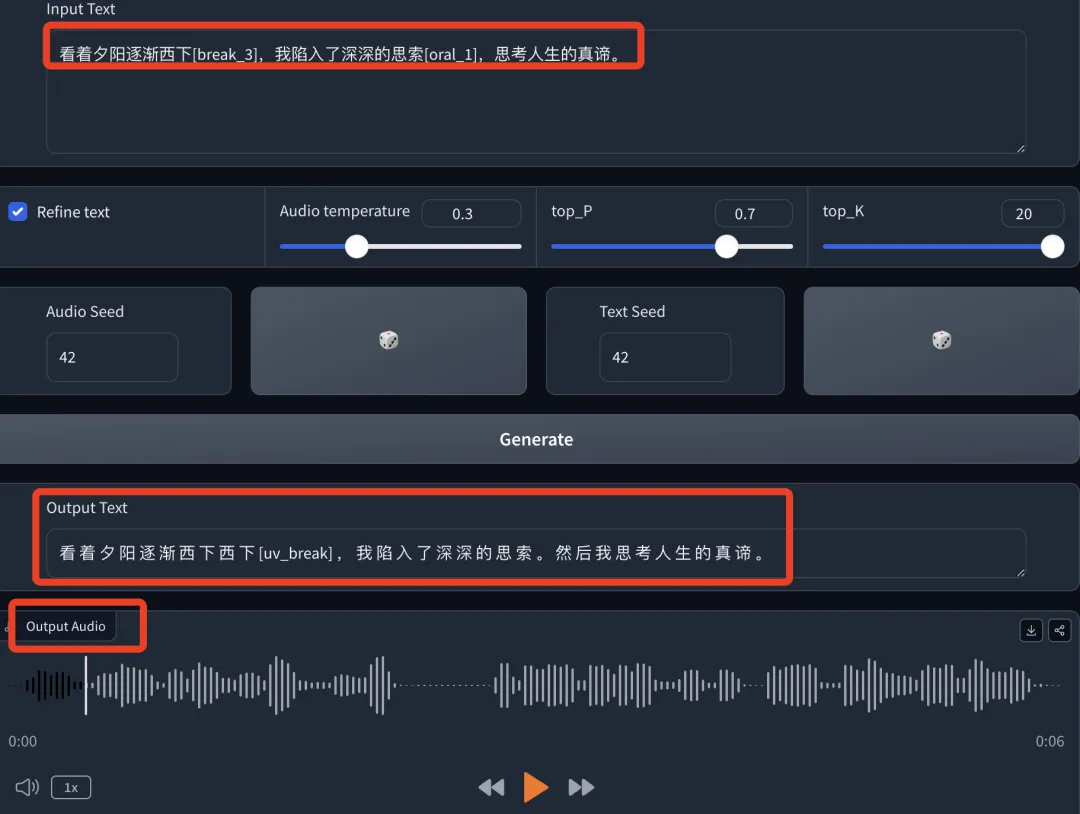

Thoughtfulness:

-Original text: Watching the sunset gradually set[break_3], I fell into deep thought[oral_1], pondering the true meaning of life.

-Text output: The first half of the text output has the word “西下” added, which causes repetition in the content.

-Audio output: The audio also has a double "west down", which sounds strange, but emotionally it has a particularly thoughtful feel.

In the text output part, in addition to missing sentences from time to time,There is word "overlap"This directly affects the final generated speech result.It's a bit like drawing cards, it takes multiple times to be satisfied.

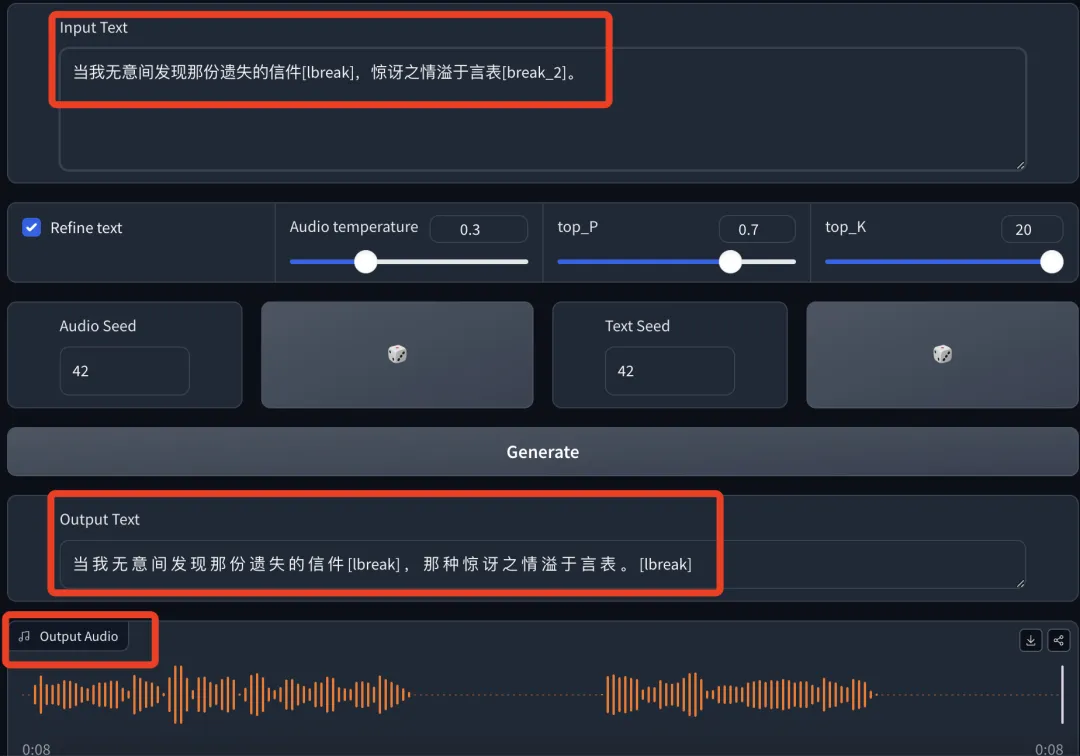

Surprise:

-Original text: When I accidentally found the lost letter[lbreak], my surprise was beyond words[break_2].

-Text output: The text content is output completely.

-Audio output: Complete audio output,Pause at the right time, I feel emotional when reading the words "unintentionally", but the emotion of surprise in the second half of the sentence is not reflected.

This time, the text and audio were completely output. There were some emotions involved, but the fluctuations were not obvious, and no surprise could be heard. It was like a calm reading.

Fear:

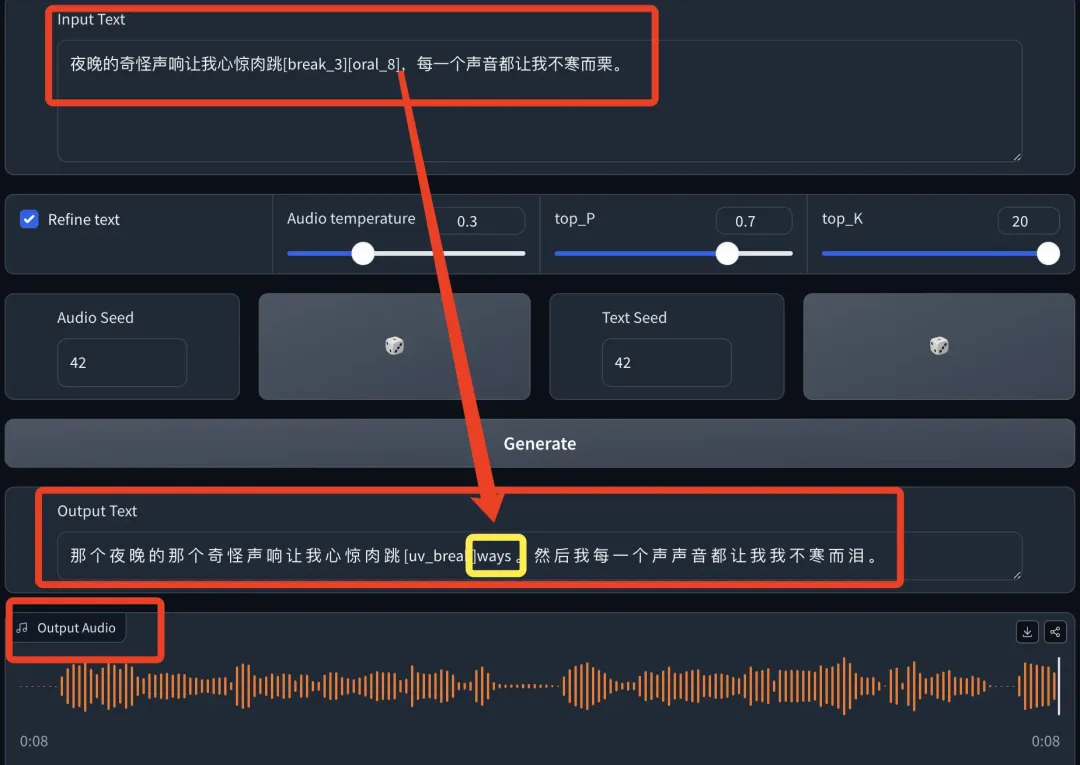

-Original text: The strange noises at night make me shudder[break_3][oral_8], and every sound makes me shudder.

-Text output: text output effectOne more word "way", the rest are complete.

-Audio output: The reading was very natural, and the overall emotions were relatively full, especially when he read the word "shudder", he even took a breath.

This time, the features of ChatTTS are fully demonstrated.Smooth and natural, and the emotions are very appropriate, there is even a second human voice "hmm" at the end, which shows that there are still many treasure functions waiting to be discovered.

We will find ChatTTSThe output is not stable, sometimes complete, sometimes missing parts, again, the same sentence (important things are said three times‼️):

Draw more cards and try more!

Draw more cards and try more!

Draw more cards and try more!

In general, through the control marks of pauses, laughter and oral characteristics, ChatTTS can more accurately convey complex emotional states and improve the expressiveness and interactivity of voice content. But relatively speaking, there is still some distance to go.

Summarize

In fact, after this review, ChatTTS is quite popular on GitHub.reasonFor example:

- Multi-language support: Whether you speak Chinese or English, this thing can handle it.

- Voice has emotions: It can add laughter or change the tone of voice when speaking to make the conversation more natural.

- Ease of use: Its setup process is simple and straightforward, and it can be smoothly integrated into various programs.

But there are also somequestion:

- Sometimes it gets stuck, intermittent, affecting the experience.

- The sound quality varies, Sometimes it takes several tries to get a sound that sounds good.

Some I can think ofUsage scenarios(Of course there are more):

- Robots and virtual assistants: Real sound output is particularly suitable for enhancing the user's interactive experience.

- Production of multimedia content: For example, audio books or story telling can be used.

How to use ChatTTS

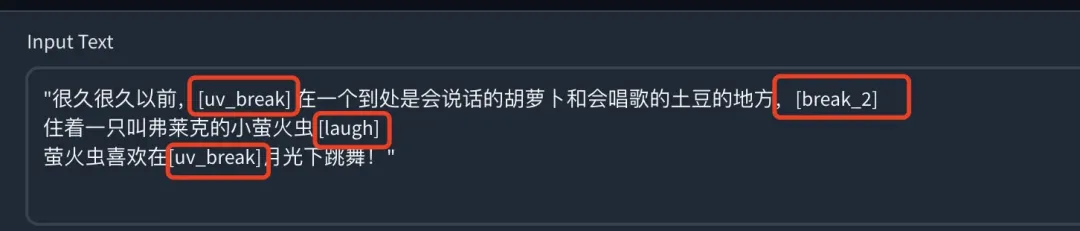

For text preprocessing: embed controls in text

At the text level, ChatTTS uses special tags asEmbedded CommandsThese tags allow you toControlled pauses and laughterand othersoralaspect.

- Sentence-level control: inserting [laugh_(0-2)] Such a mark, bringing laughter,[break_(0-7)] to indicate pauses of varying lengths, and [oral_(0-9)] to control other oral features.

- Word-level control: By placing [uv_break] and [lbreak] ,accomplishFine control of pauses within sentences.

For example, if you are creating a whimsical AI character for a children’s storytelling app, you can use ChatTTS to create text like this (English picture book):

"Once upon a time, [uv_break] in a land filled with talking carrots and singing potatoes, [break_2] lived a little firefly named Flicker. [laugh] Flicker loved to [uv_break] dance among the moonbeams!"

Actual generated effect: The English is completed in one go and sounds very good.

Chinese picture books

"Once upon a time,[uv_break] in a land full of talking carrots and singing potatoes,[break_2] there lived a little firefly named Flack.[laugh] Fireflies love to dance in the[uv_break] moonlight!"

The actual generated effect: long paragraphs of text read very smoothly and there are also laughs.

By refining these markers, you can have ChatTTS generate a voice that pauses for dramatic effect, laughs warmly, and brings that fantasy world to life.

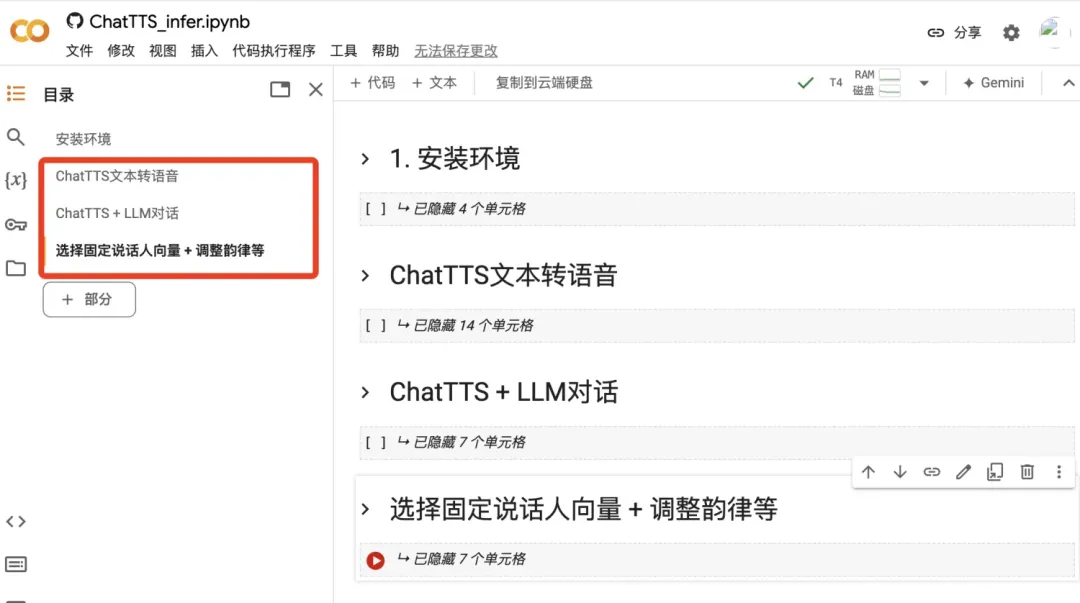

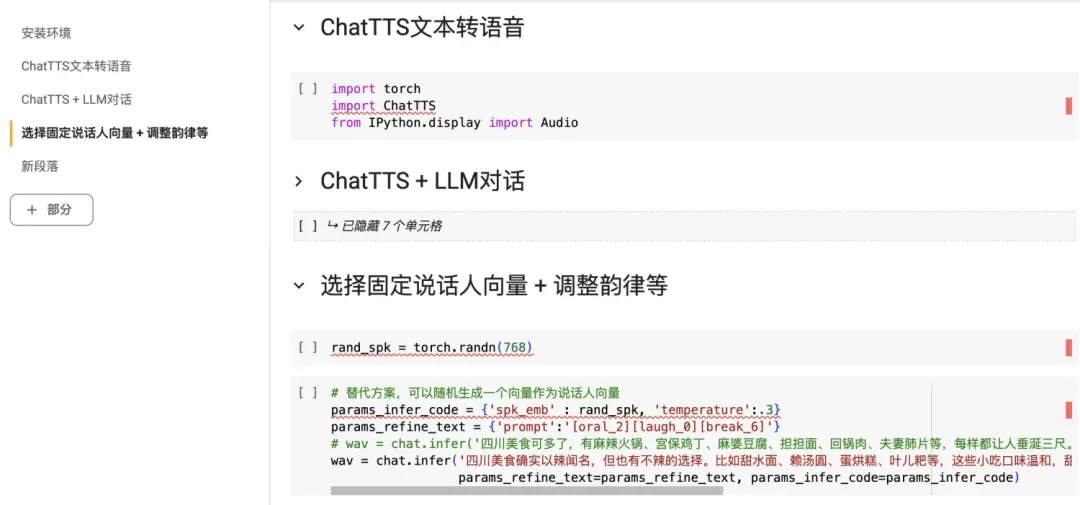

Inference parameters: fine-tuning output

During the audio generation process (inference), you can further refine the output via arguments passed to the chat.infer() function:

1️⃣ params_infer_code: This dictionary controls aspects such as speaker identity (spk_emb), voice variation (temperature), and decoding strategy (top_P, top_K).

2️⃣ params_refine_text: This dictionary is mainly used for sentence-level control, similar toHow to use tags inside text.

These two levels of control combined enable unprecedented expressiveness and customization of synthesized speech.

Notice:There are many experience addresses on the Internet, but this https://chattts.com/ is not an official website, but you can experience it directly without deployment.

The official entrance is here:https://github.com/2noise/ChatTTS

Students with a basic knowledge of coding can try it themselves, or use the address that netizens have deployed in colab:

https://colab.research.google.com/github/Kedreamix/ChatTTS/blob/main/ChatTTS_infer.ipynb

Other open source TTS models are also worth paying attention to

- Bark It is a transformer-based TTS model proposed by Suno AI. The model is able to generate a variety of audio outputs, including speech, music, background noise, and simple sound effects. In addition, it can also generate non-verbal speech such as laughter, sighs, and sobs. Among them, tone and laughter effects are the main advantages.

- Project address: https://github.com/suno-ai/bark

- Piper TTS(Text-to-Speech) is a neural network-based text-to-speech system optimized for low-power computers and hardware such as Raspberry Pi. At its core, it is a fast, flexible, and easy-to-deploy text-to-speech solution that is particularly suitable for scenarios that need to run on resource-constrained devices.

- Project address: https://github.com/rhasspy/piper

- GradTTS As a representative of flexible architecture models, it provides an efficient and high-quality text-to-speech solution by combining advanced technologies such as diffusion probability model, generative score matching and monotone alignment search. Its flexible framework and broad application prospects make it an important milestone in the current text-to-speech field.

- Project address: https://github.com/WelkinYang/GradTTS

- Matcha-TTS It provides an efficient, natural and easy-to-use non-autoregressive neural TTS solution suitable for a variety of application scenarios.

- Project address: https://github.com/shivammehta25/Matcha-TTS

at last

Anyone who has come into contact with TTS knows that the text-to-speech effect is particularly stiff, with obvious word and sentence breaks, no emotion at all, and a robotic feel. These are just some of the problems.

But ChatTTS brought me a big surprise.Build qualityCome and see,The quality of its generation is very similar to the feeling of human speech, with laughter, crying, and pauses., and it will breathe heavily. Of course, it still has many shortcomings, such as long generation time, missing sentences, and sometimes even unable to generate a complete sentence, but this will not prevent it from moving forward.

The ChatTTS project not only achieved new technological breakthroughs, but also opened up new application possibilities for speech generation technology. The detailed sample code and documentation provided provide developers and technology enthusiasts with a wide range of exploration and experimental space.

We expect future projects to further improve the sound quality and increase the choice of speaker voices, bringing more innovations to the field of real-time speech generation.

References:

https://github.com/2noise/ChatTTS

https://chattts.com/

https://ai.gopubby.com/chattts-an-incredible-open-source-tts-model-for-dialogues-7ed71d55944f

https://www.bilibili.com/video/BV1zn4y1o7iV/?vd_source=c51b77ea0e8c6261e9039c2c3d6b6410