Kunlun WanweiAnnounced todayOpen Source 2 Hundred Billion SparseLarge Model Skywork-MoE is an expansion of the intermediate checkpoint of the Skywork-13B model previously open sourced by Kunlun Wanwei. It is said to be the first open source trillion-dollar MoE large model that fully applies and implements the MoE Upcycling technology. It is also the first open source trillion-dollar MoE large model that supports reasoning using a single RTX 4090 server (8 RTX 4090 graphics cards).

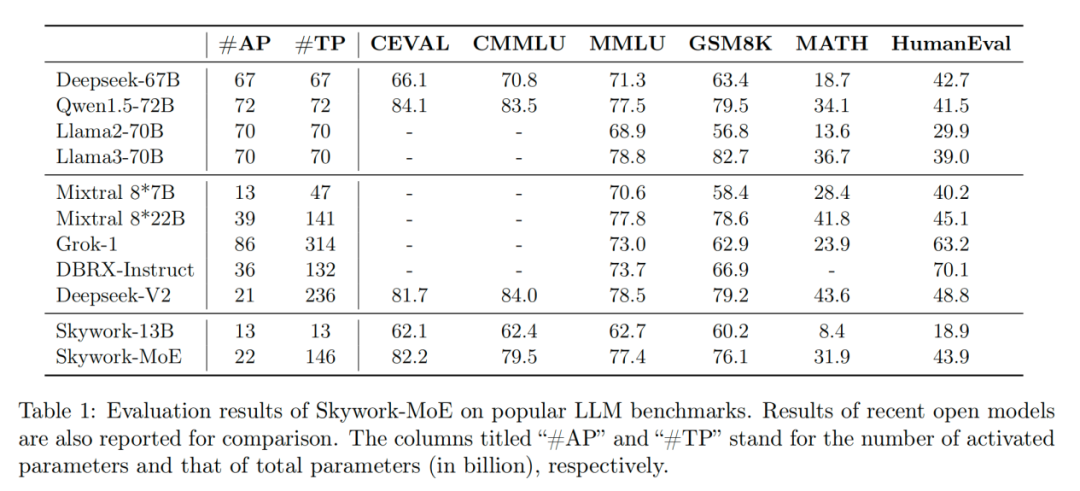

According to reports, the open source Skywork-MoE model belongs to the Tiangong 3.0 R&D model series and is a medium-sized model (Skywork-MoE-Medium). The total number of parameters in the model is 146B, the number of activated parameters is 22B, there are 16 experts in total, each expert is 13B in size, and 2 experts are activated each time.

Tiangong 3.0 also trained two MoE models, 75B (Skywork-MoE-Small) and 400B (Skywork-MoE-Large), which are not included in this open source release.

According to official tests, with the same activation parameter size of 20B (inference calculation amount), Skywork-MoE's capability is close to that of a 70B Dense model, which reduces the model's inference cost by nearly 3 times. At the same time, the total parameter size of Skywork-MoE is 1/3 smaller than that of DeepSeekV2, achieving similar capabilities with a smaller parameter size.

Skywork-MoE's model weights and technical reports are completely open source and free for commercial use without application. The links are as follows:

Model weights download:

https://huggingface.co/Skywork/Skywork-MoE-base

https://huggingface.co/Skywork/Skywork-MoE-Base-FP8

Model open source repository: https://github.com/SkyworkAI/Skywork-MoE

Model technical report: https://github.com/SkyworkAI/Skywork-MoE/blob/main/skywork-moe-tech-report.pdf

Model inference code: (supports 8 bit quantized load inference on 8 x 4090 servers) https://github.com/SkyworkAI/vllm